Characteristic point recognition method based on neural network

A technology of neural network and recognition method, applied in the direction of neural learning method, biological neural network model, input/output process of data processing, etc., can solve the problems of low accuracy and efficiency, achieve accuracy, improve calculation speed, The effect of simple positioning process

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0025] In order to solve the defect that the accuracy and efficiency of determining the projection ID in the current virtual reality space positioning method are not high, the present invention provides a feature point recognition method based on a neural network that can improve the accuracy and efficiency of the projection ID.

[0026] In order to have a clearer understanding of the technical features, purposes and effects of the present invention, the specific implementation manners of the present invention will now be described in detail with reference to the accompanying drawings.

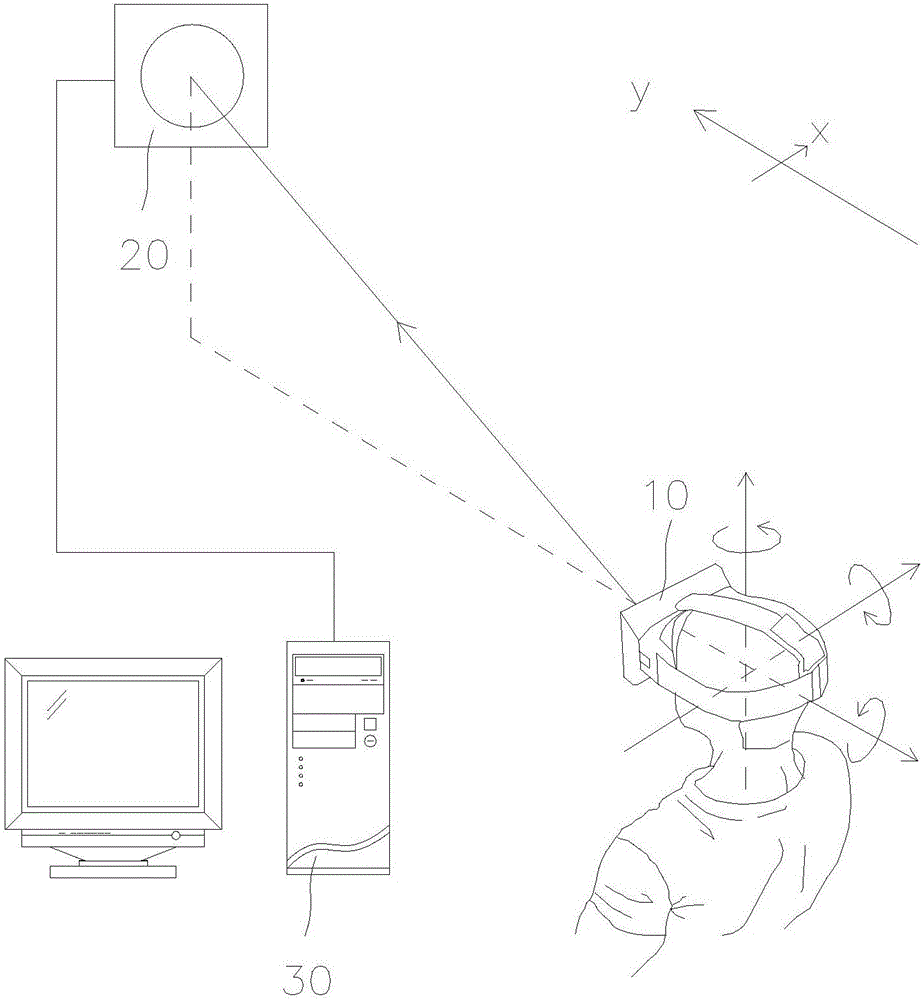

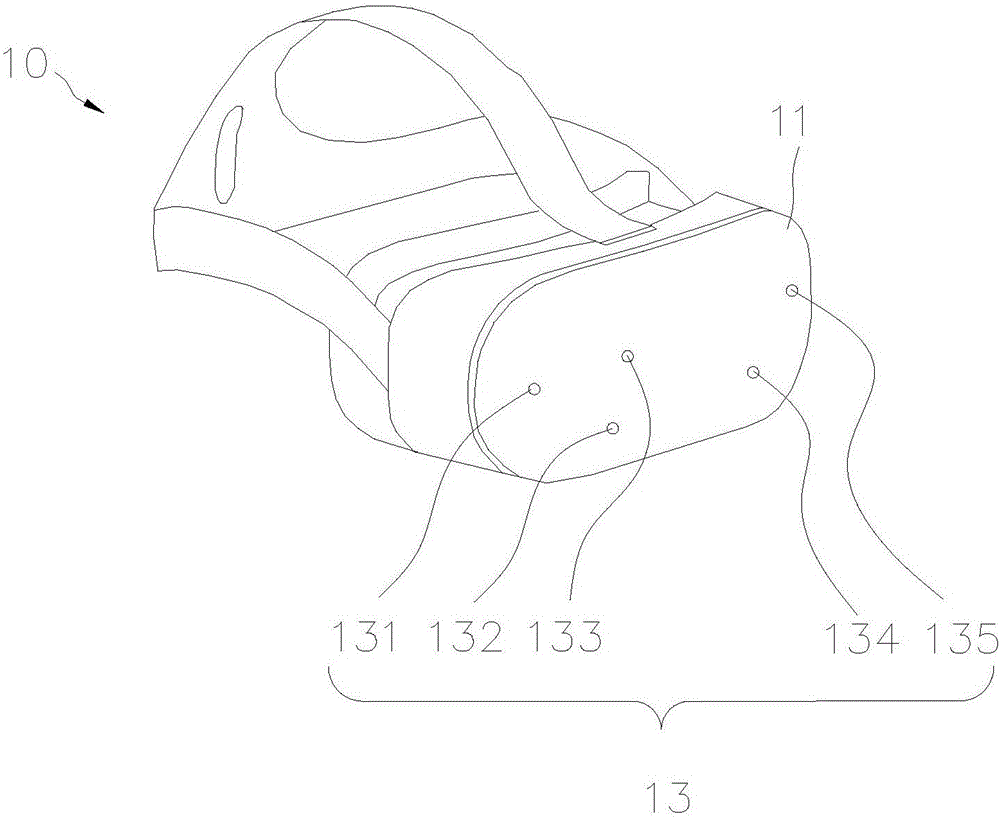

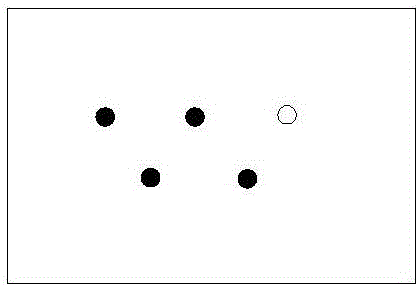

[0027] see figure 1 — figure 2 . The neural network-based feature point recognition method of the present invention includes a virtual reality helmet 10 , an infrared camera 20 and a processing unit 30 , and the infrared camera 20 is electrically connected to the processing unit 30 . The virtual reality helmet 10 includes a front panel 11 , and a plurality of infrared point light sources 13 ar

PUM

Login to view more

Login to view more Abstract

Description

Claims

Application Information

Login to view more

Login to view more - R&D Engineer

- R&D Manager

- IP Professional

- Industry Leading Data Capabilities

- Powerful AI technology

- Patent DNA Extraction

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic.

© 2024 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap