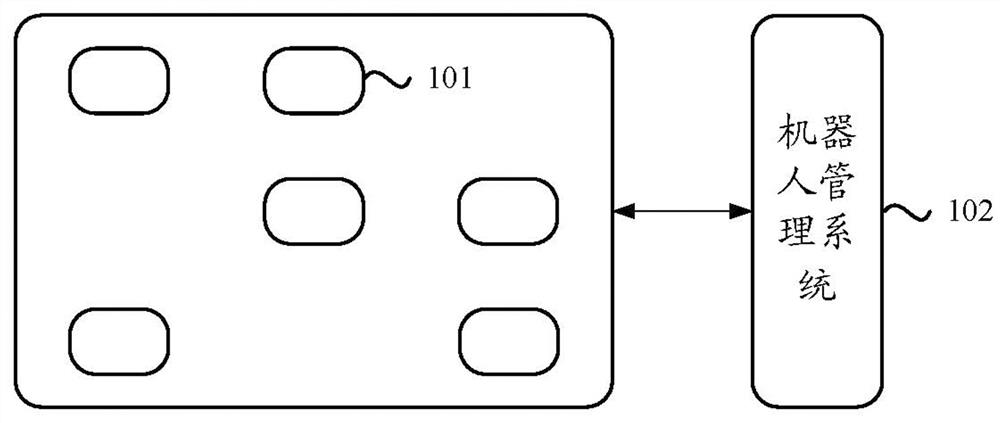

Robot repositioning method, device and equipment

A robot and relocation technology, applied in the computer field, can solve the problems of task interruption, time-consuming and labor-intensive, affecting the normal execution of robot tasks, etc., and achieve the effect of accurate relocation, simplicity and relocation

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Benefits of technology

Problems solved by technology

Method used

Image

Examples

example 1

[0067]Example 1. A rangefinder that can be configured by a mapping robot can measure the distance between the robot and the feature points of the object being photographed, such as the distance between the robot and the feature points of the two-dimensional code taken, and the distance between the robot and the photographed ceiling The distance between the feature points; then, based on the distance and the camera's perspective, determine the relative position between the robot and each feature point, and then based on the current position of the robot during the collection operation (such as the three-dimensional coordinates in the three-dimensional initial map, or The two-dimensional coordinates in the two-dimensional initial map) and the relative positions of the two to calculate the position of each feature point in the initial map.

[0068]Example 2: Extract the feature points in the environmental image collected in step 404, and parse the location distribution data between the featu

example 2

[0089]Example 2. When the robot needs to be repositioned, because the relocation can be completed quickly through the QR code identification, the QR code image can be collected first; if there is no QR code image around, the ceiling image can be collected.

[0090]Example 3: When the target environment image is collected, first identify whether the target environment image is a two-dimensional code image, and if so, trigger the execution of the step of identifying the identification information of the two-dimensional code; otherwise, trigger the execution of the target The step of performing feature matching between the first feature point set in the environmental image and the pre-established visual map and subsequent steps.

[0091]In other words, in this example, the two-dimensional code image is preferentially used, and the priority of other types of environmental images is lower than the two-dimensional code image.

[0092]Example 4. When an image of the target environment is collected, th

PUM

Login to view more

Login to view more Abstract

Description

Claims

Application Information

Login to view more

Login to view more - R&D Engineer

- R&D Manager

- IP Professional

- Industry Leading Data Capabilities

- Powerful AI technology

- Patent DNA Extraction

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic.

© 2024 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap