High-capacity cache as well as quick retrieval method and construction method thereof

A construction method and high-capacity technology, applied in the field of large-capacity cache and its fast retrieval, to save memory space and improve utilization.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

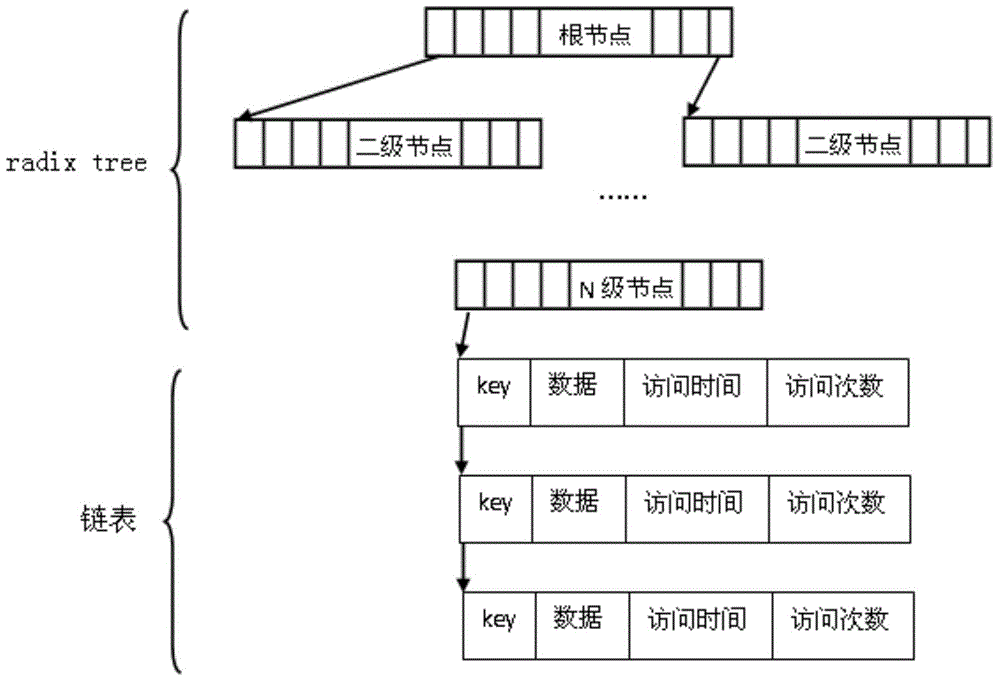

[0038] Such as figure 1 As shown, the embodiment of the present invention provides a large-capacity cache, and its data structure includes a linked list and a radix tree; data is stored in the linked list, and the linked list is set on the leaf nodes of the radix tree; The key includes a first key and a second key, the first key is used to retrieve the radix tree, and searches the linked list corresponding to the key; the second key is used to retrieve the linked list corresponding to the key, Find said data.

[0039] The access speed of radix tree is fast, but it takes up more memory; the access speed of linked list is slower, but it takes less memory. In the embodiment of the present invention, by adopting the technical solution: storing data in the linked list, setting the linked list on the leaf nodes of the radix tree, the advantages of the two data structures of the radix tree and the linked list are cleverly combined The combination achieves the purpose of faster access

Embodiment 2

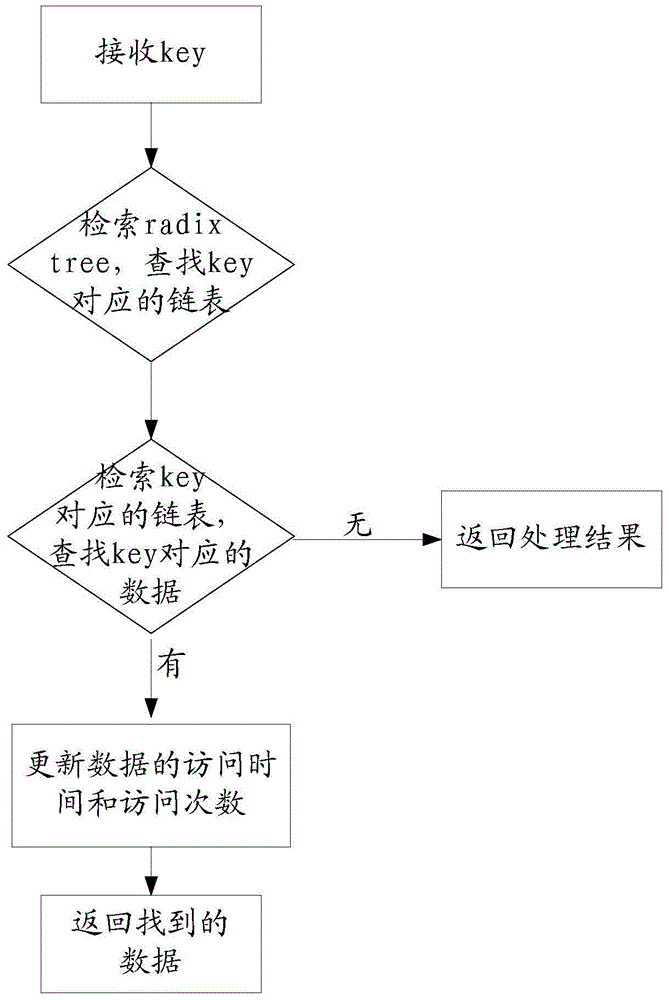

[0044] Such as figure 2 As shown, the embodiment of the present invention provides a fast retrieval method for a large-capacity cache provided by the first embodiment above, and the method includes the following steps:

[0045] S1, receive key;

[0046] S2, retrieving the radix tree according to the first key, and searching for the linked list corresponding to the key;

[0047] S3. Retrieve the linked list corresponding to the key according to the second key, and search for the data corresponding to the key; if the data is not found, return the processing result; if the data is found, execute steps S4-S5;

[0048] S4. After finding the data, update the access time and access times of the data;

[0049] S5. Return the found data.

[0050] Using the above technical solution, when processing a large amount of data, the data can be quickly retrieved without creating a large number of management nodes to manage leaf nodes, which will greatly save memory space and improve memory ut

Embodiment 3

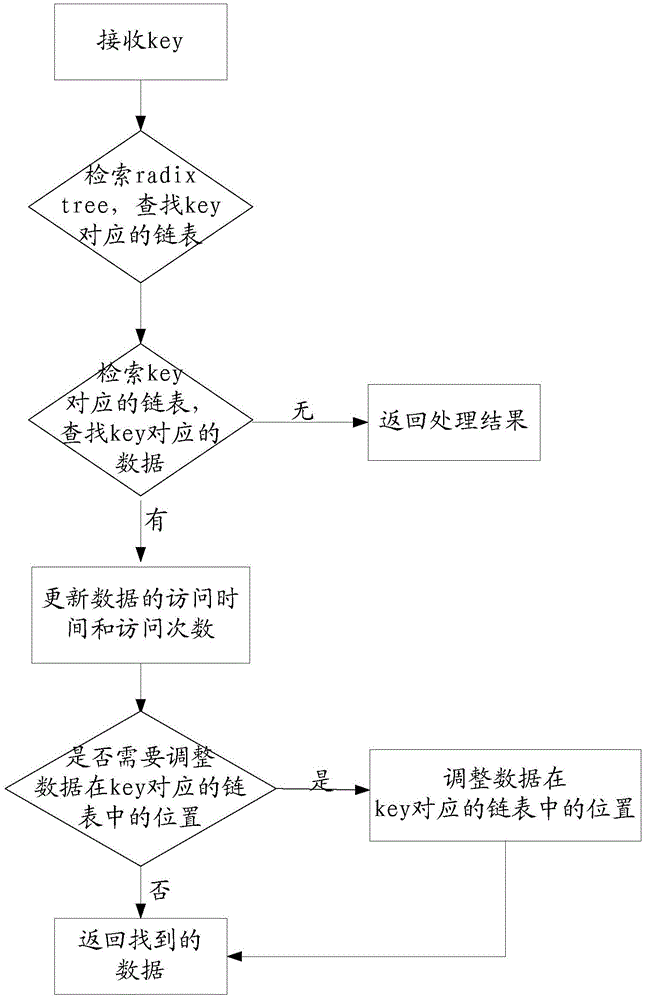

[0052] Such as image 3 As shown, in the embodiment of the present invention, the fast retrieval method for large-capacity cache provided by Embodiment 2 is included. In addition, between steps S4 and S5, the steps may also be included,

[0053] According to the access time and the number of visits of the data, it is judged whether the position of the data in the linked list corresponding to the key needs to be adjusted, if no adjustment is required, then step S5 is performed; if adjustment is required, the position of the data in the linked list is adjusted. The position in the linked list corresponding to the above key.

[0054]Wherein, the adjusting the position of the data in the linked list corresponding to the key may be specifically, if the access priority of the data is higher than the access priority of the comparison data in the linked list, then the data moving to the front of the comparison data; if the access priority of the data is lower than the access priority of

PUM

Login to view more

Login to view more Abstract

Description

Claims

Application Information

Login to view more

Login to view more - R&D Engineer

- R&D Manager

- IP Professional

- Industry Leading Data Capabilities

- Powerful AI technology

- Patent DNA Extraction

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic.

© 2024 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap