Scene text recognition method based on man-machine cooperation

A technology of text recognition and human-machine collaboration, applied in the field of end-to-end scene text recognition, it can solve the problems of time-consuming, labor-intensive and cost-increasing, achieve high performance and reduce the proportion of manual annotation.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Benefits of technology

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0036] The present invention will be described in detail below in conjunction with specific embodiments. The following examples will help those skilled in the art to further understand the present invention, but do not limit the present invention in any form. It should be noted that those skilled in the art can make several modifications and improvements without departing from the concept of the present invention. These all belong to the protection scope of the present invention.

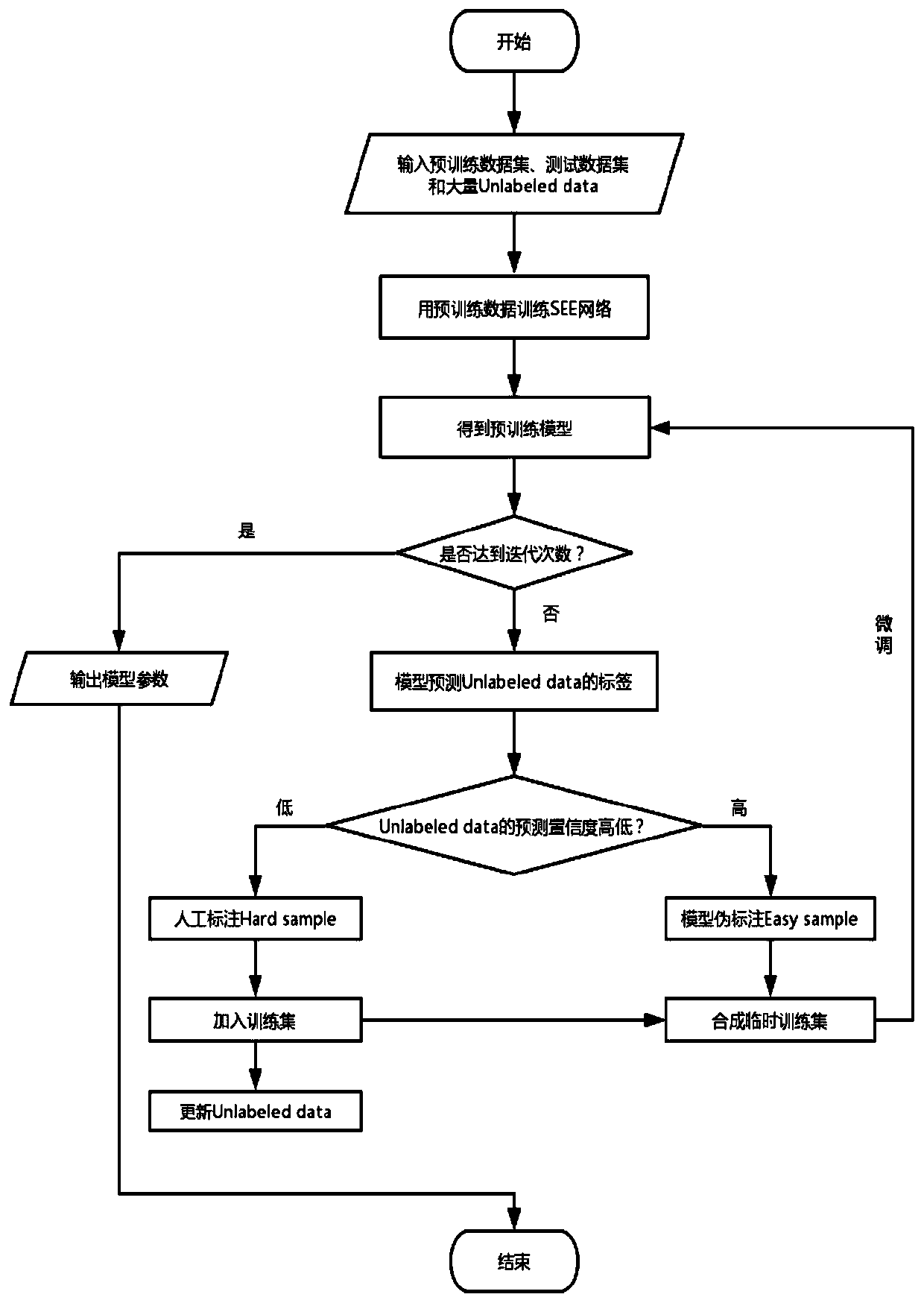

[0037] Such as figure 1 As mentioned above, the embodiment of the present invention provides a scene text recognition method based on human-machine collaboration, which uses human-computer collaboration to label currently unlabeled samples, including the following steps:

[0038] S1. Classify the existing scene text data set FSNS into Labeled data (labeled data) and Unlabeled data (unlabeled data), and select 20% from Labeled data as a test set, and the remaining 80% of Labeled data as a pre-training

PUM

Login to view more

Login to view more Abstract

Description

Claims

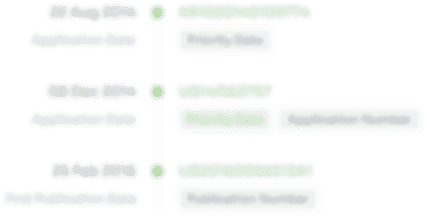

Application Information

Login to view more

Login to view more - R&D Engineer

- R&D Manager

- IP Professional

- Industry Leading Data Capabilities

- Powerful AI technology

- Patent DNA Extraction

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic.

© 2024 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap