Video segmentation from an uncalibrated camera array

a camera array and video segmentation technology, applied in the field of video processing, can solve the problems of masked areas spilling color onto foreground objects, creating unnatural acting environments, and a significant and expensive effort in setting up green screens

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Benefits of technology

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

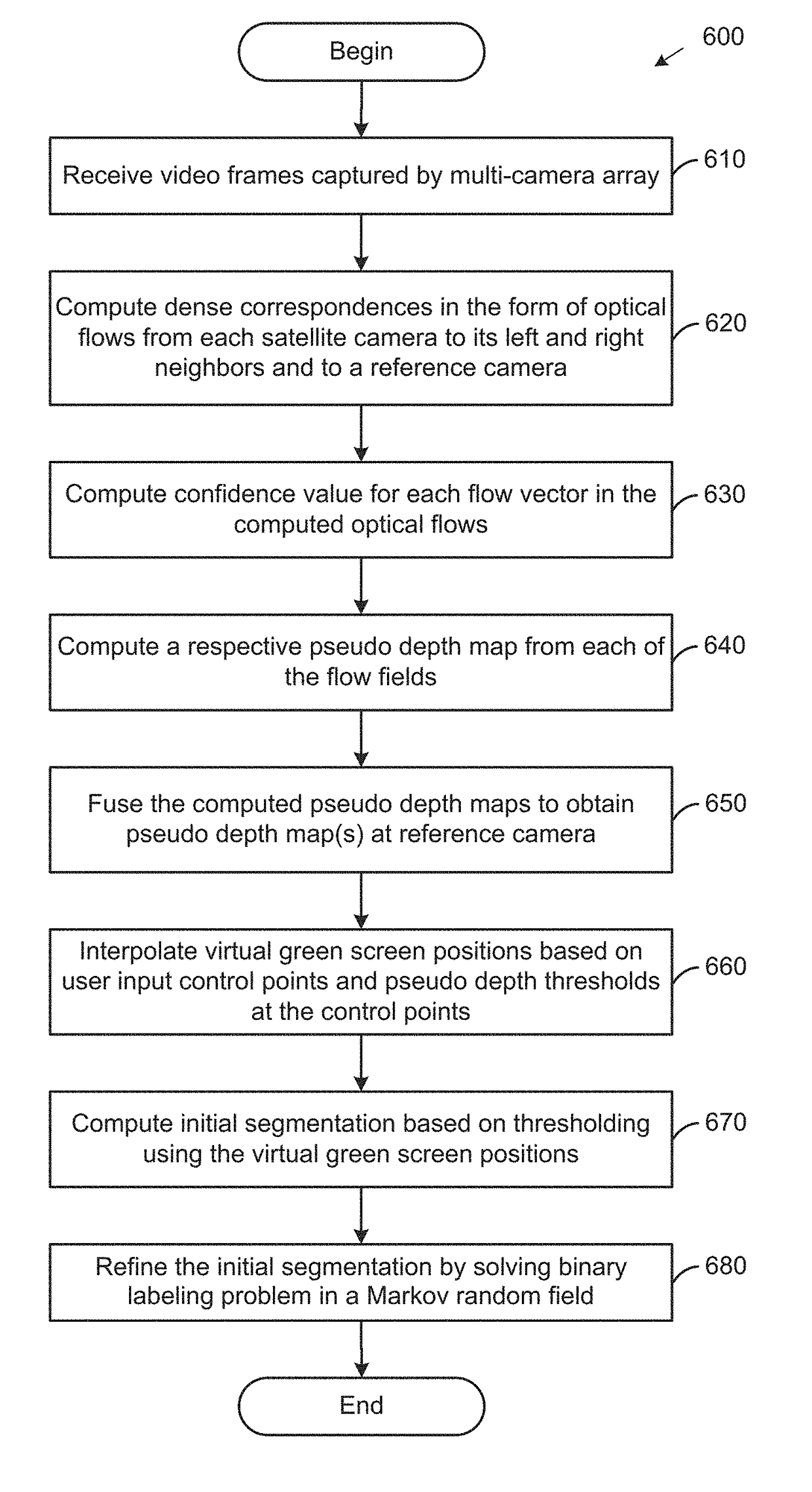

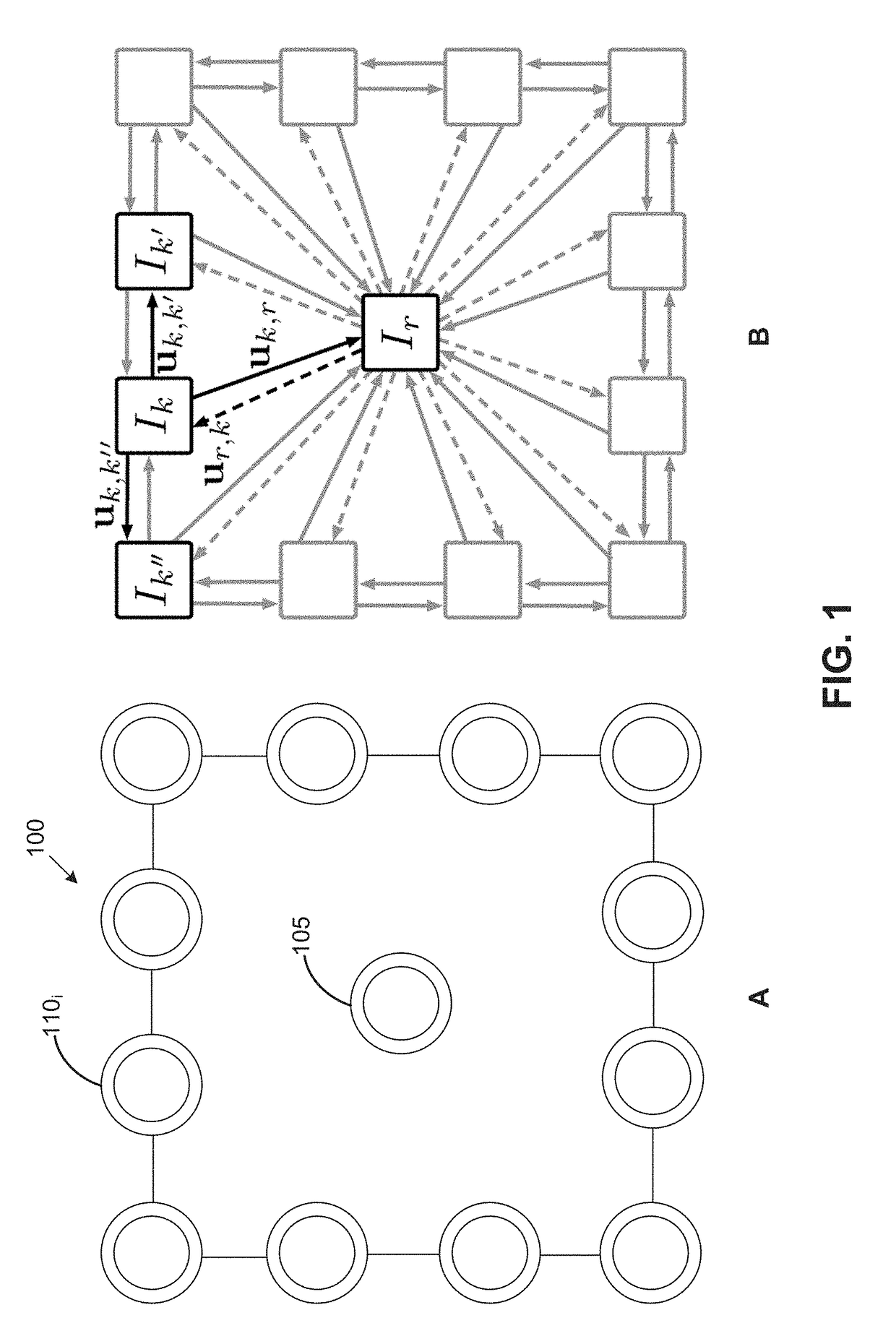

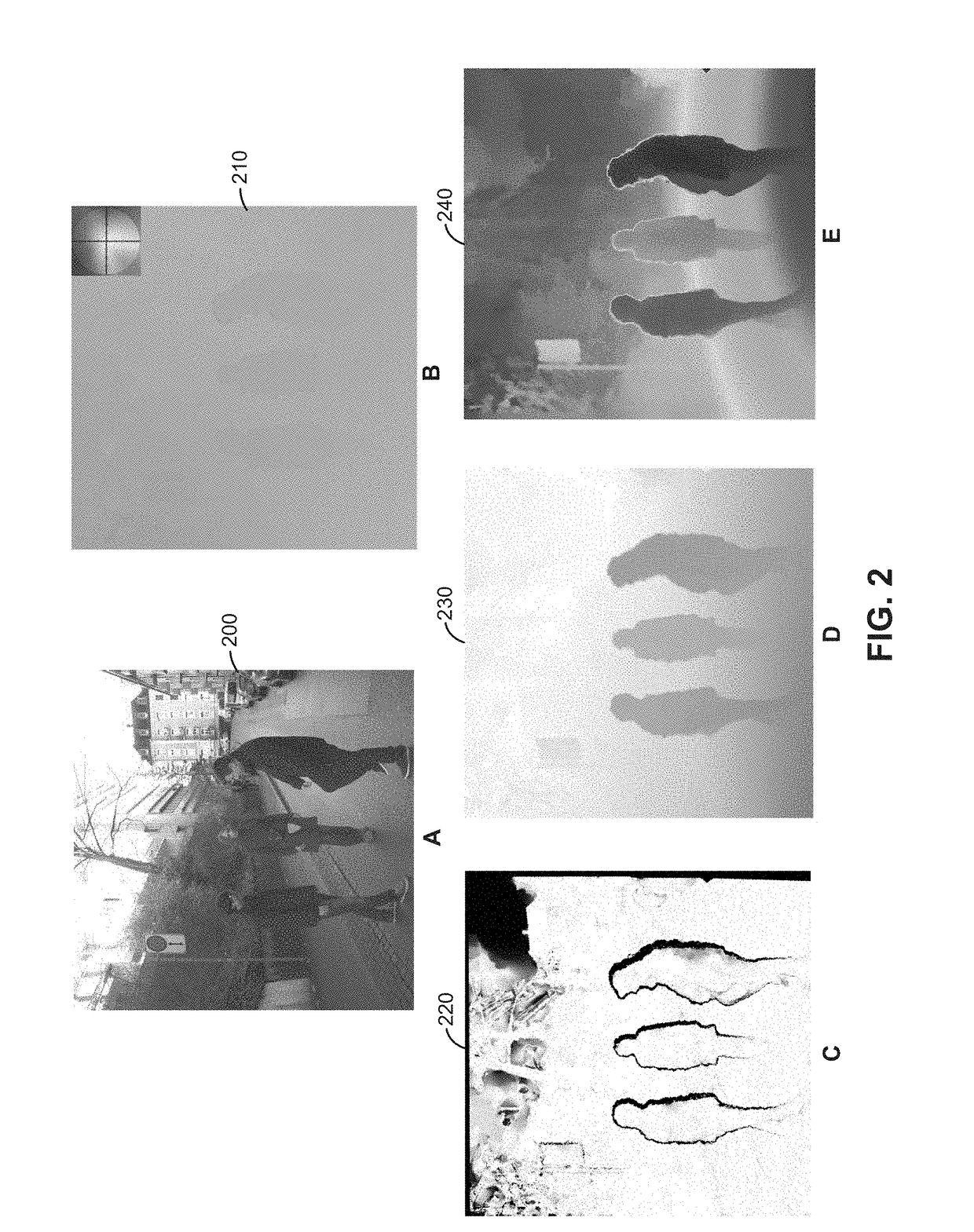

[0015]Aspects presented herein provide an approach for image segmentation from an uncalibrated camera array. In contrast to traditional green screens and chroma keying, a segmentation application obtains depth information for pixels of an image and performs a depth-based segmentation based on a “virtual” placement of a green screen after the image is captured. In one aspect, the segmentation application computes a pseudo depth map for each frame of a video sequence recorded with a camera array, based on dense correspondences between cameras in the array. As used herein, a pseudo depth generally refers to a depth ordering of pixels indicating a relative depth of each pixel with respect to other pixels in an image, without reconstructing actual depths of the pixels. Computing pseudo depths does not require geometric or photometric camera calibration (i.e., the camera array may be uncalibrated), and there is no metric meaning behind pseudo depths, i.e., the pseudo depths do not actually r

PUM

Login to view more

Login to view more Abstract

Description

Claims

Application Information

Login to view more

Login to view more - R&D Engineer

- R&D Manager

- IP Professional

- Industry Leading Data Capabilities

- Powerful AI technology

- Patent DNA Extraction

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic.

© 2024 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap