Deferring and combining write barriers for a garbage-collected heap

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Benefits of technology

Problems solved by technology

Method used

Image

Examples

Example

I. Deferring and Combining Write Barriers in Compiled Code

A. Deferring Write Barriers in Compiled Code

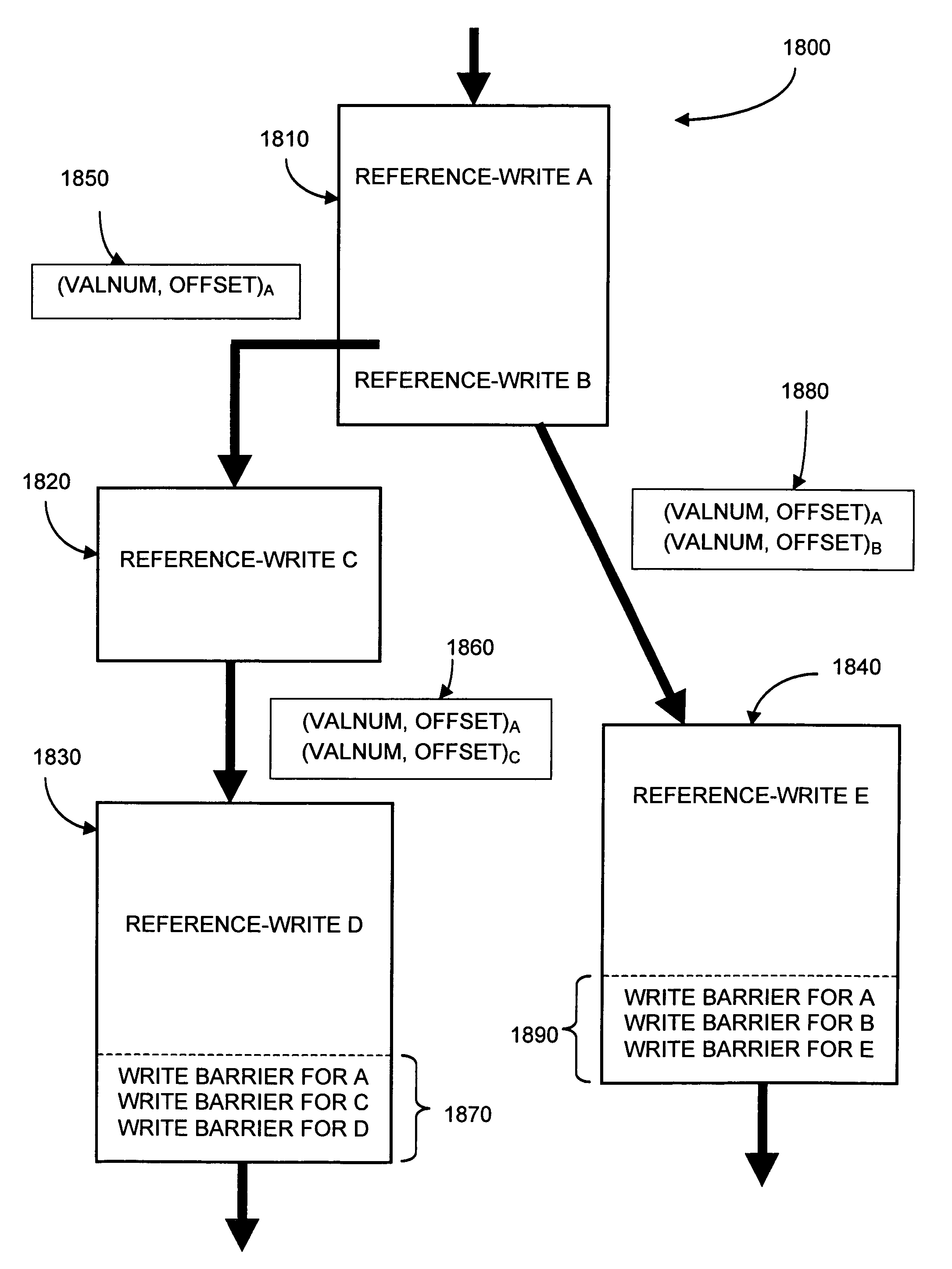

[0093]Conventionally, a compiler emits a write barrier (i.e., write-barrier code, as shown in FIG. 7) at a location in the mutator code immediately following a reference-modifying mutator instruction. In contrast, a compiler embodying the present invention will not always emit a write barrier immediately following its corresponding reference-modifying instruction. Instead, the compiler may defer emitting the write-barrier code until a subsequent location in the mutator code. To that end, the compiler may maintain a list of reference-modifying instructions whose write barriers have been deferred. At some later point in the mutator code, the compiler may emit write barriers based on information stored in the list. Notably, the deferred write-barrier code may be emitted in accordance with various write-barrier implementations, such as sequential store buffers, precise card-marking, imprec

PUM

Login to view more

Login to view more Abstract

Description

Claims

Application Information

Login to view more

Login to view more - R&D Engineer

- R&D Manager

- IP Professional

- Industry Leading Data Capabilities

- Powerful AI technology

- Patent DNA Extraction

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic.

© 2024 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap