Patents

Literature

Hiro is an intelligent assistant for R&D personnel, combined with Patent DNA, to facilitate innovative research.

64 results about "Parallel computing" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

Parallel computing is a type of computation in which many calculations or the execution of processes are carried out simultaneously. Large problems can often be divided into smaller ones, which can then be solved at the same time. There are several different forms of parallel computing: bit-level, instruction-level, data, and task parallelism. Parallelism has long been employed in high-performance computing, but it's gaining broader interest due to the physical constraints preventing frequency scaling. As power consumption (and consequently heat generation) by computers has become a concern in recent years, parallel computing has become the dominant paradigm in computer architecture, mainly in the form of multi-core processors.

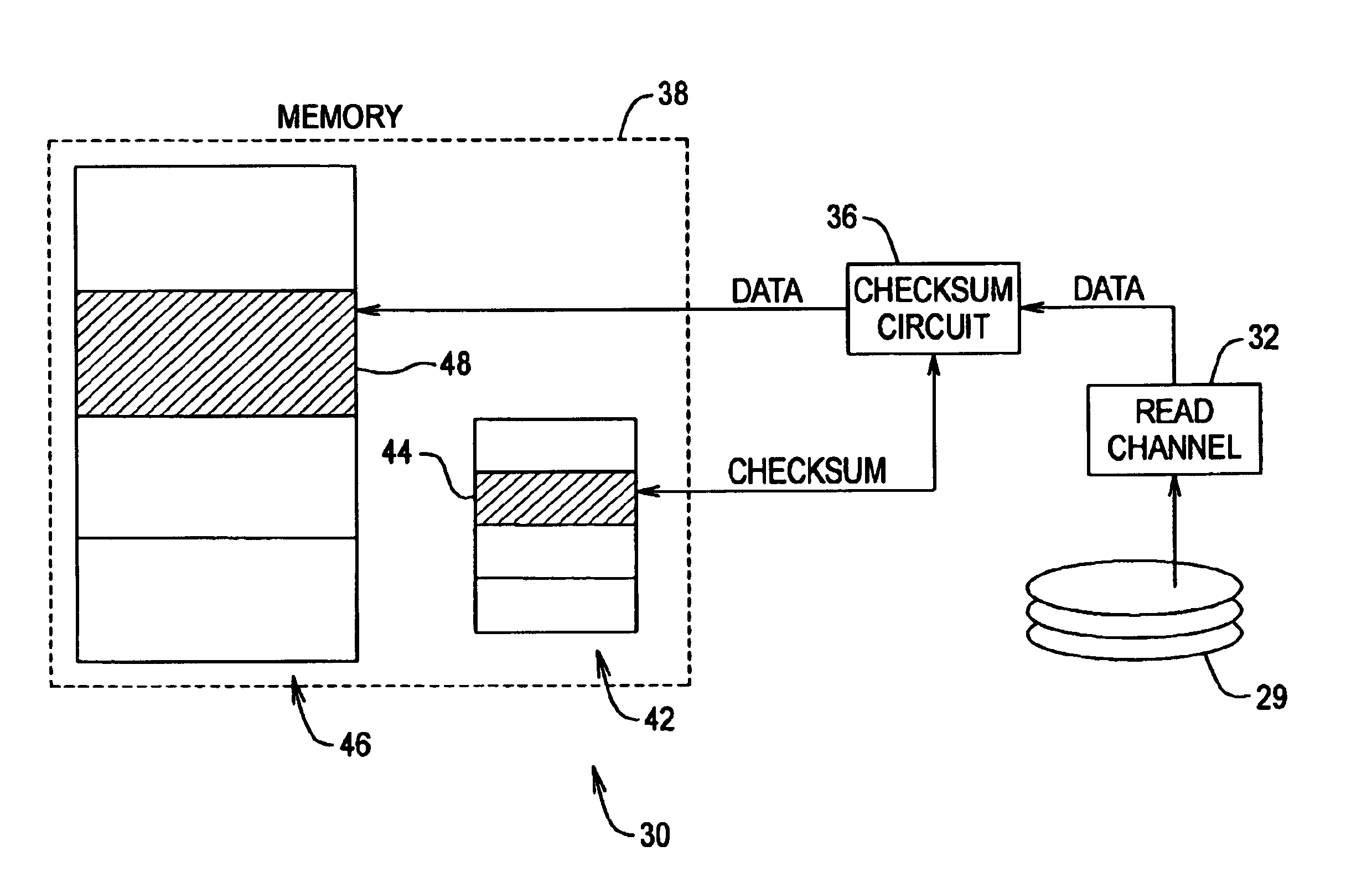

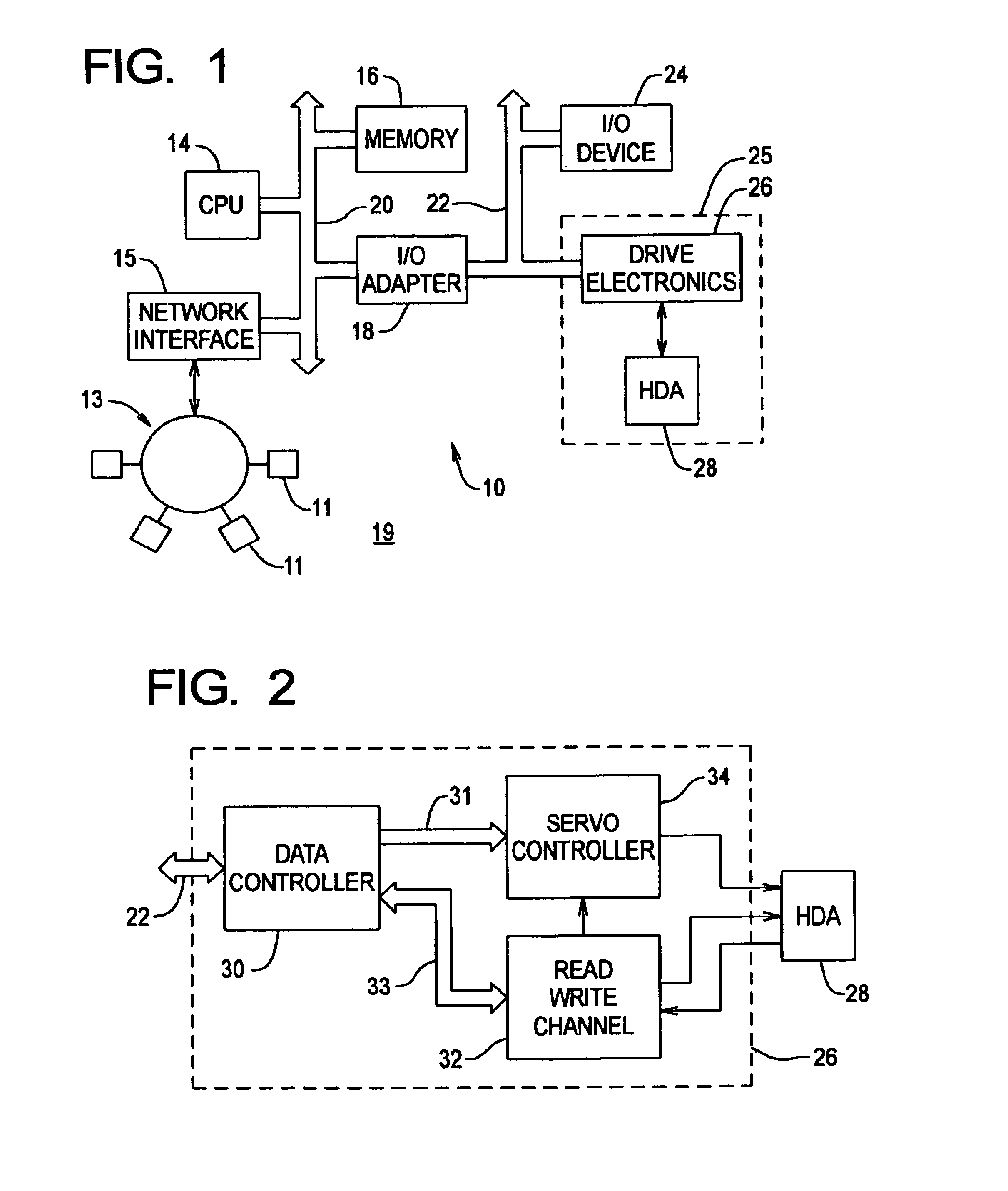

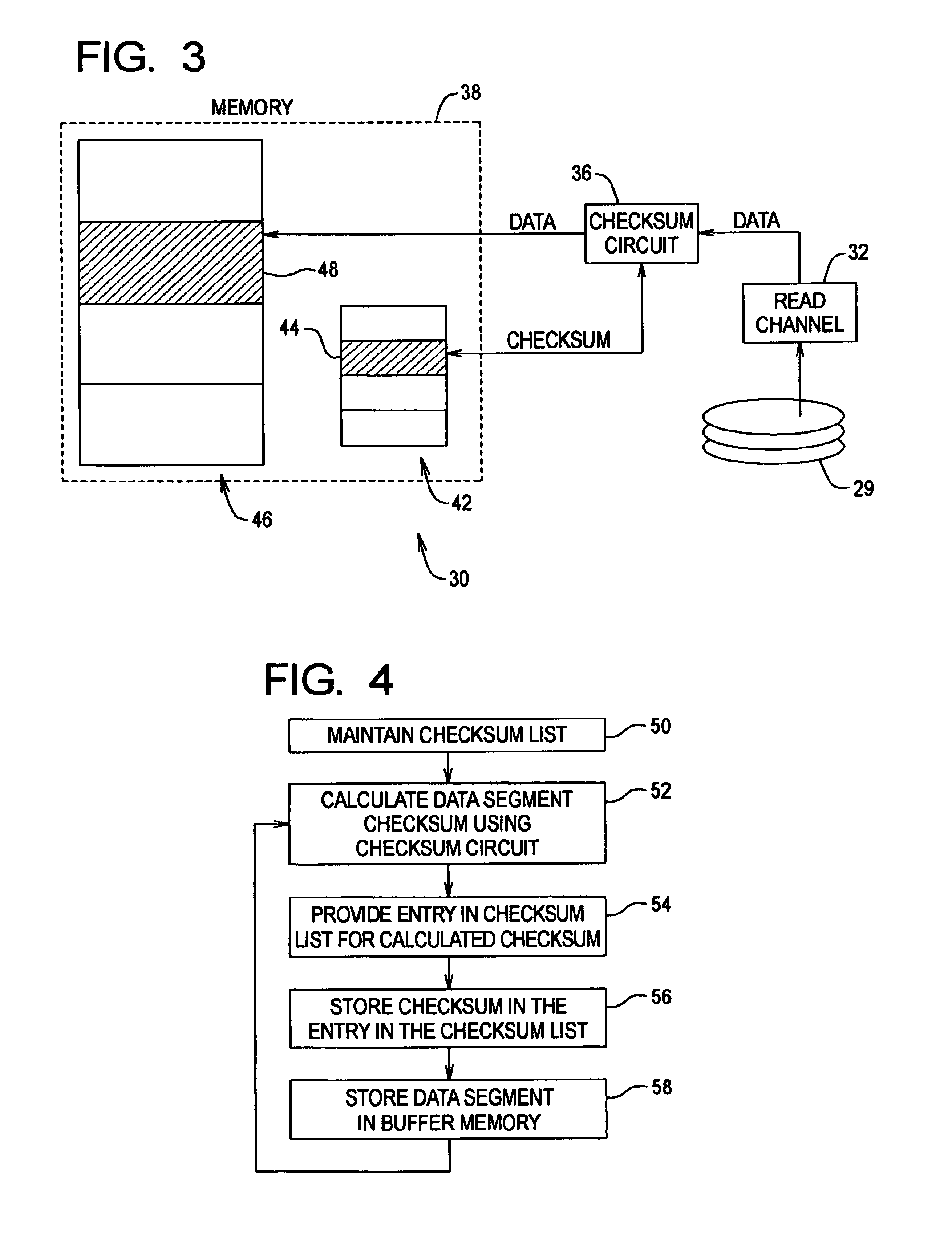

Data checksum method and apparatus

InactiveUS6964008B1Reduces memory bandwidth consumedReduce data transferCode conversionCoding detailsChecksumData store

A method and apparatus for generating checksum values for data segments retrieved from a data storage device for transfer into a buffer memory, is provided. A checksum list is maintained to contain checksum values, wherein the checksum list includes a plurality of entries corresponding to the data segments stored in the buffer memory, each entry for storing a checksum value for a corresponding data segment stored in the buffer memory. For each data segment retrieved from the storage device: a checksum value is calculated for that data segment using a checksum circuit; an entry in the checksum list corresponding to that data segment is selected; the checksum value is stored in the selected entry in the checksum list; and that data segment is stored in the buffer memory. Preferably, the checksum circuit calculates the checksum for each data segment as that data segment is transferred into the buffer memory.

Owner:MAXTOR

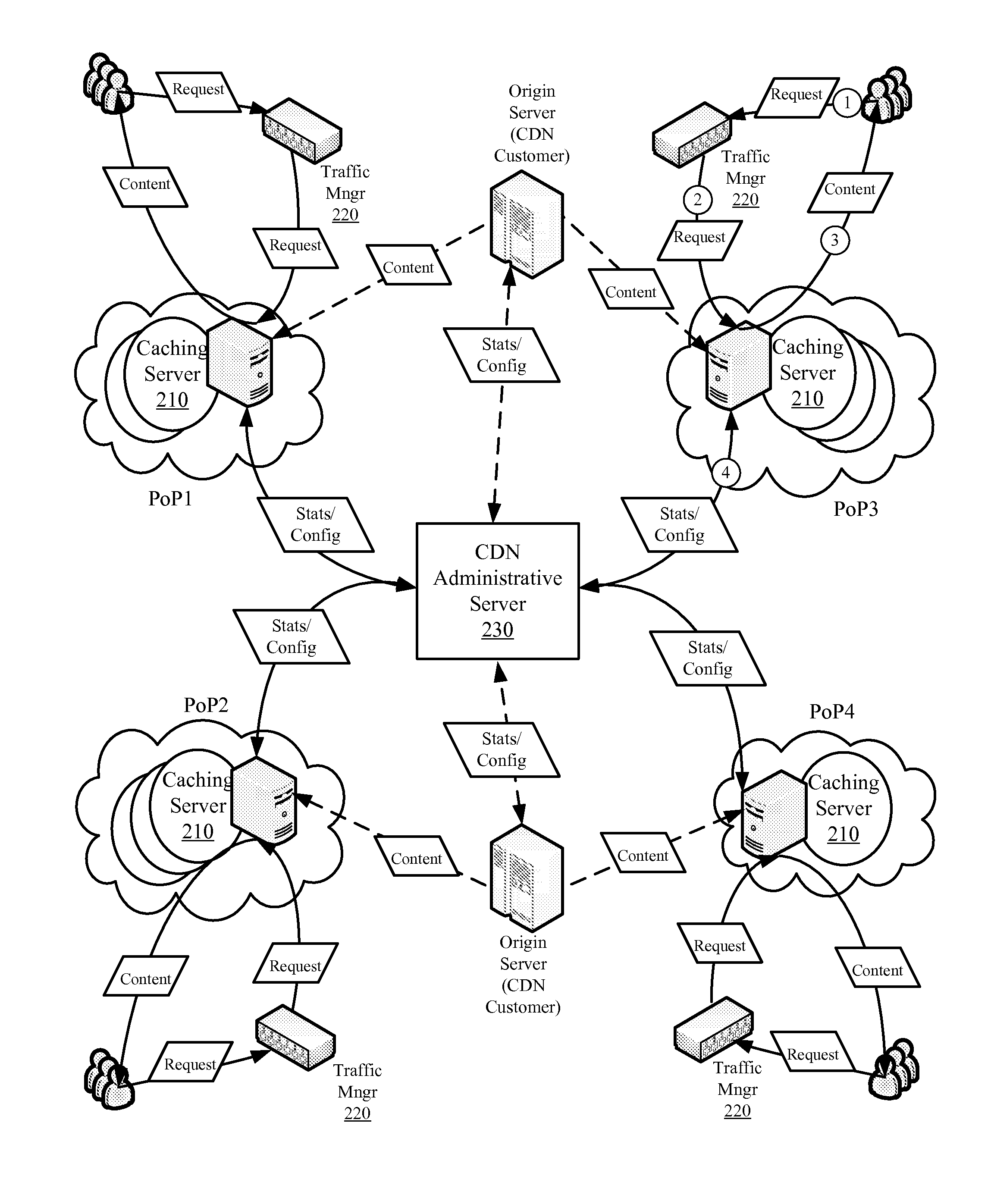

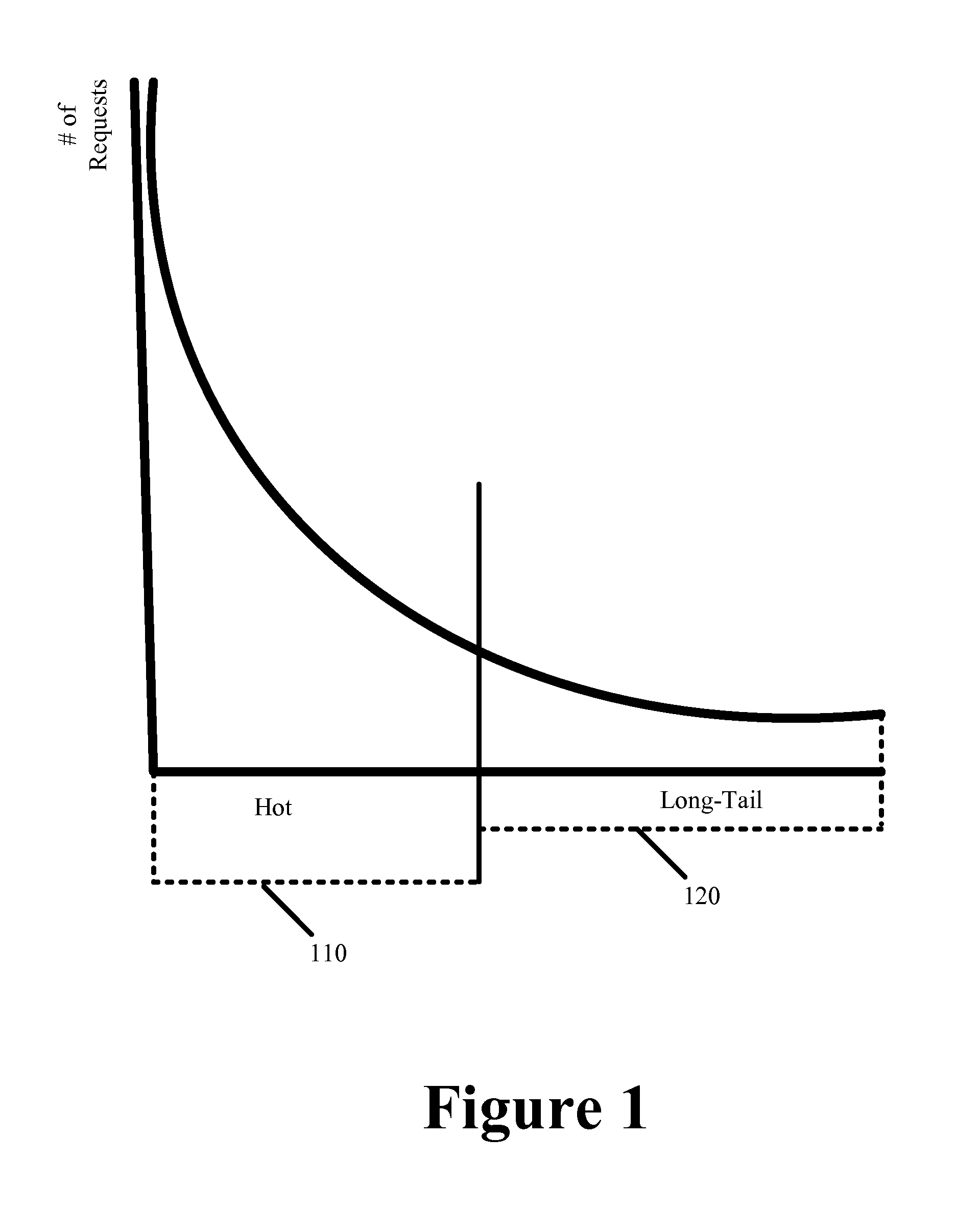

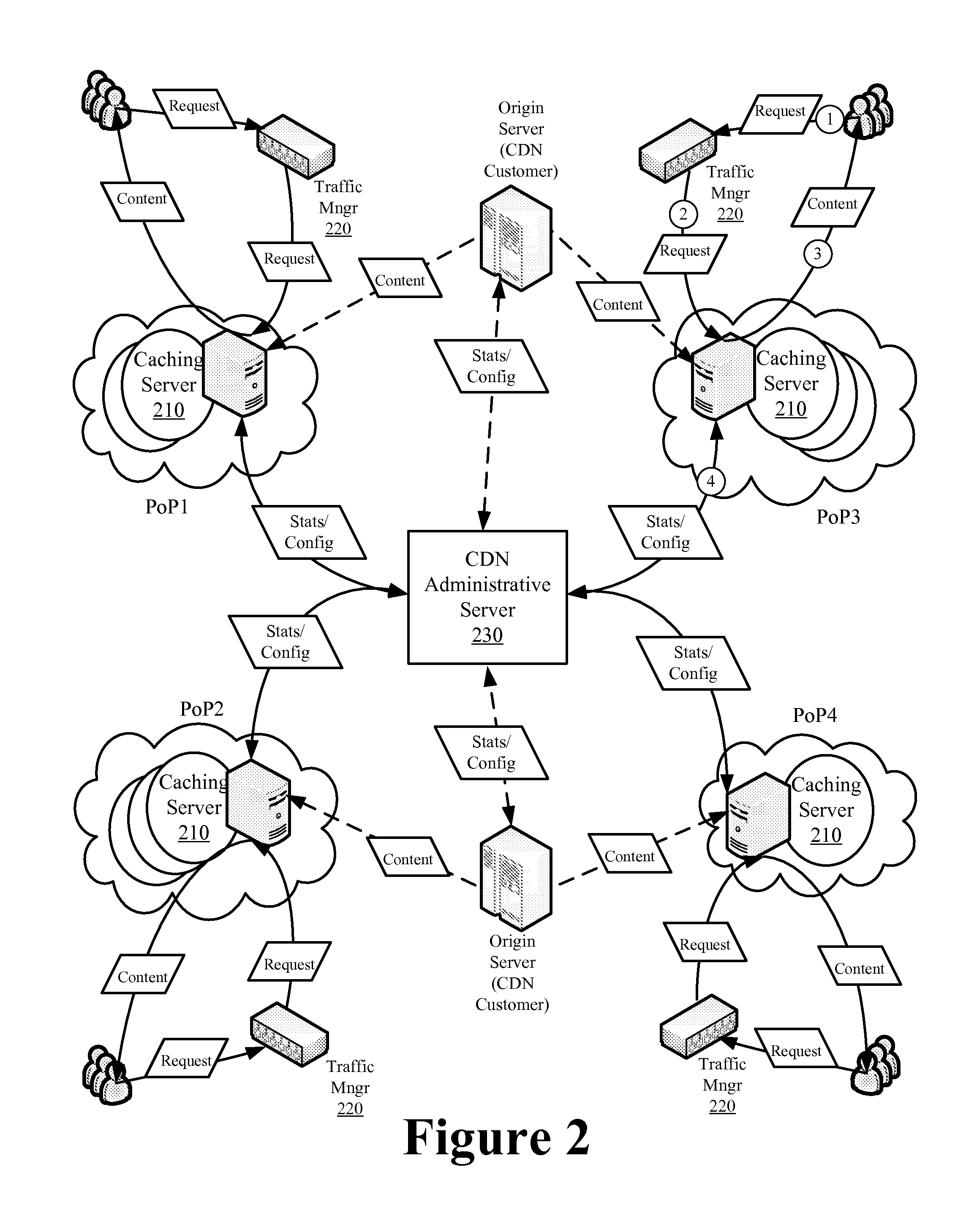

Multi-Layer Multi-Hit Caching for Long Tail Content

ActiveUS20130227051A1Easy to optimizeMinimize impactDigital computer detailsTransmissionBit arrayParallel computing

Owner:EDGIO INC

Method for planning code word in sector of TD-SCDMA system

InactiveCN1728622AReduce the impact of interferenceImprove anti-interference abilityCode division multiplexRadio transmission for post communicationParallel computingSynchronization code division multiple access

The method includes steps: (1) calculating and storing degree of interference between each scrambling code group in all scrambling code groups and other each scrambling code group; the degree of interference indicates degree of crosscorrelation between two scrambling code groups; (2) finding out assignment of sector scrambling code group with minimal total degree of interference in TD-SCDMA system; (3) based on coincidence relation between scrambling code group and down going pilot frequency code, through assigned scrambling code group for each sector, determining down going pilot frequency code for own sector, and selecting a scrambling code for own sector. Considering disturbed condition caused from relativity between composite codes, the invention raises interference killing feature of system and lowers influence on capacity of TD-SCDMA system from interference between codes in same frequency in network.

Owner:CHINA ACAD OF TELECOMM TECH

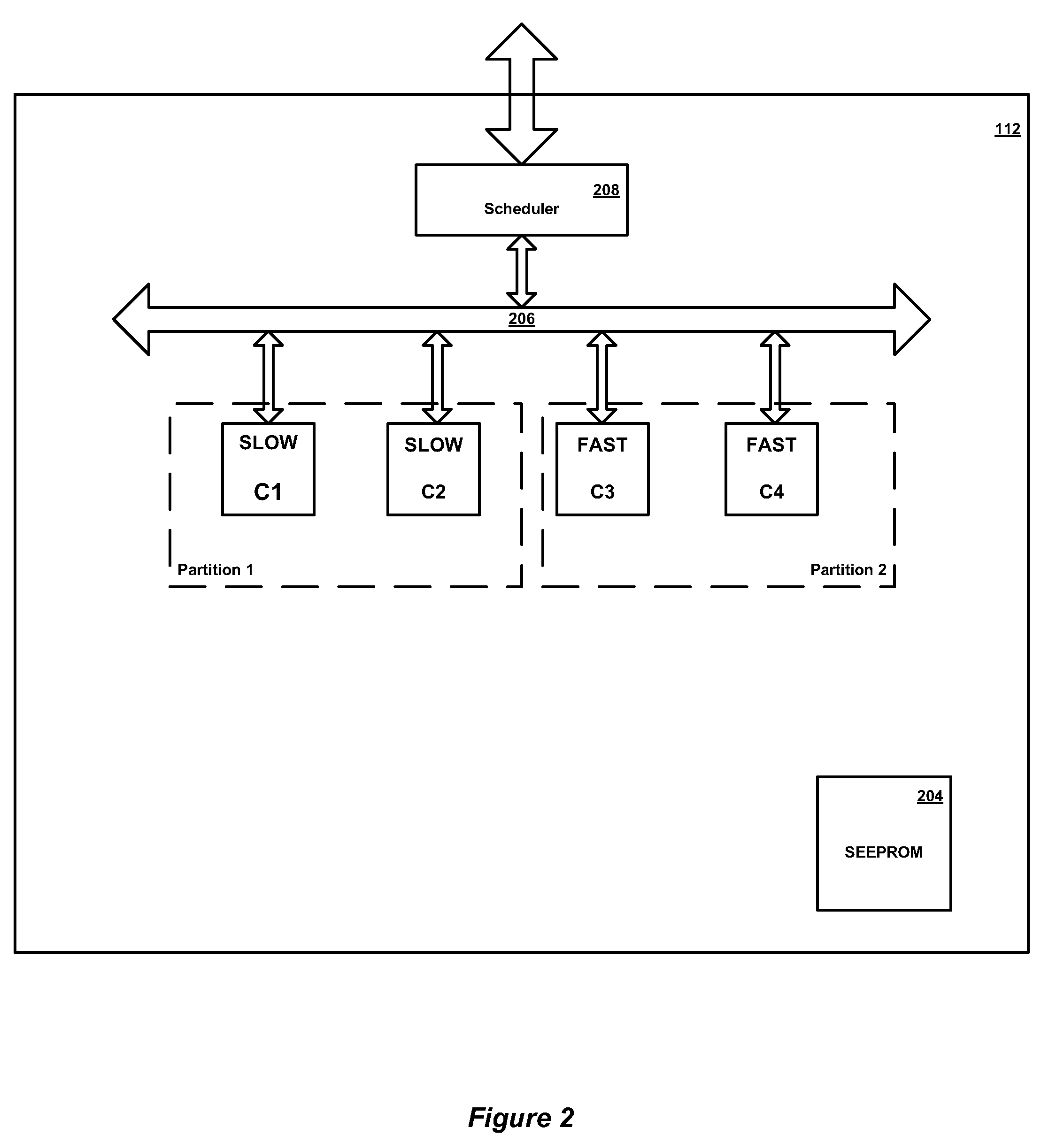

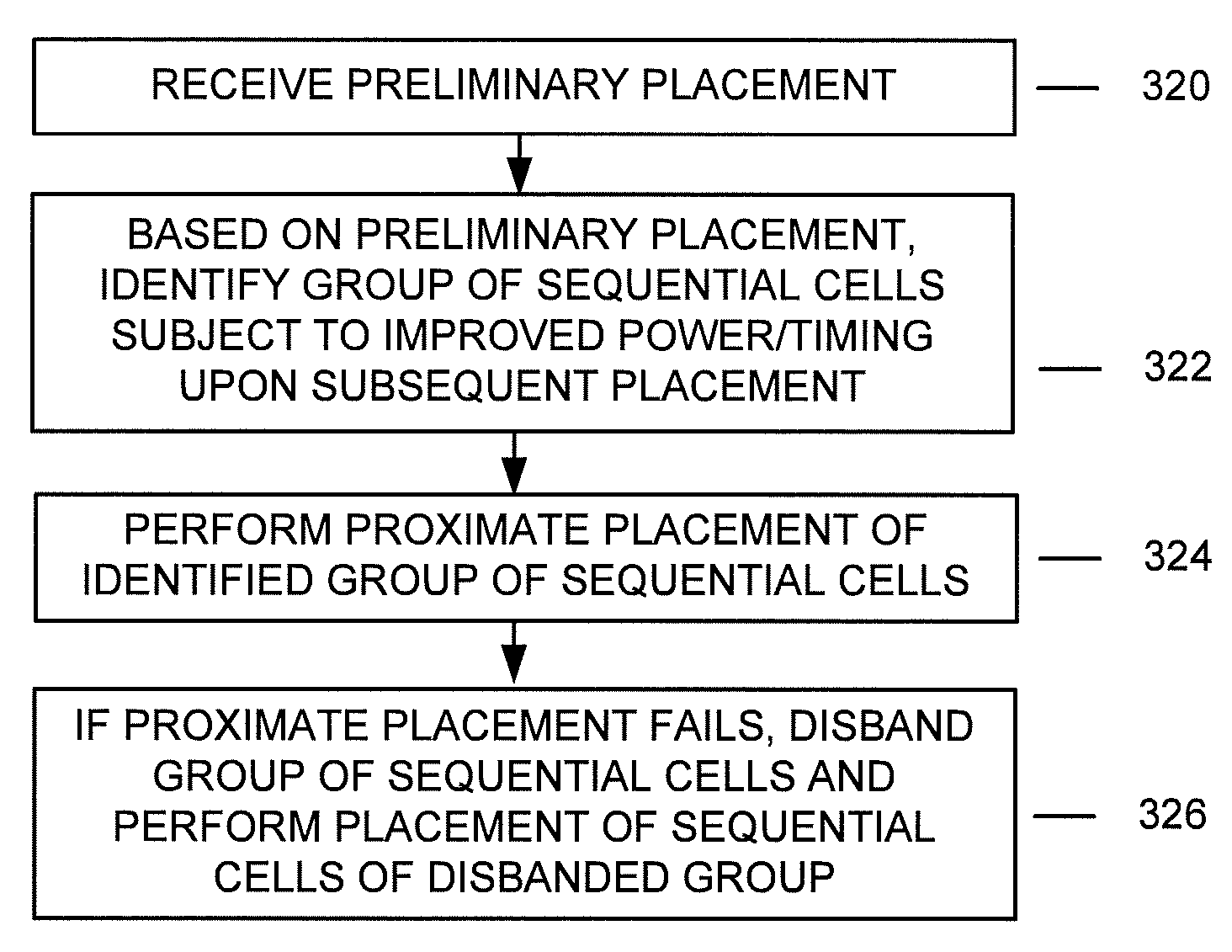

Method and Apparatus for Proximate Placement of Sequential Cells

ActiveUS20100031214A1Increased power consumptionIncreased timing variationCAD circuit designSpecial data processing applicationsProximateParallel computing

Owner:SYNOPSYS INC

Acceleration of bitstream decoding

ActiveUS7286066B1Improve performanceReduce the number of executionsCode conversionParallel computingExecution unit

Described are methods and systems for variable length decoding. A first execution unit executes a first single instruction that optionally reverses the order of bits in an encoded bitstream. A second execution unit executes a second single instruction that extracts a specified number of bits from the bitstream produced by the first execution unit. A third execution unit executes a third single instruction that identifies a number of consecutive zero bit values at the head of the bitstream produced by the first execution unit. The outputs of the first, second and third execution units are used in a process that decodes the encoded bitstream.

Owner:NVIDIA CORP

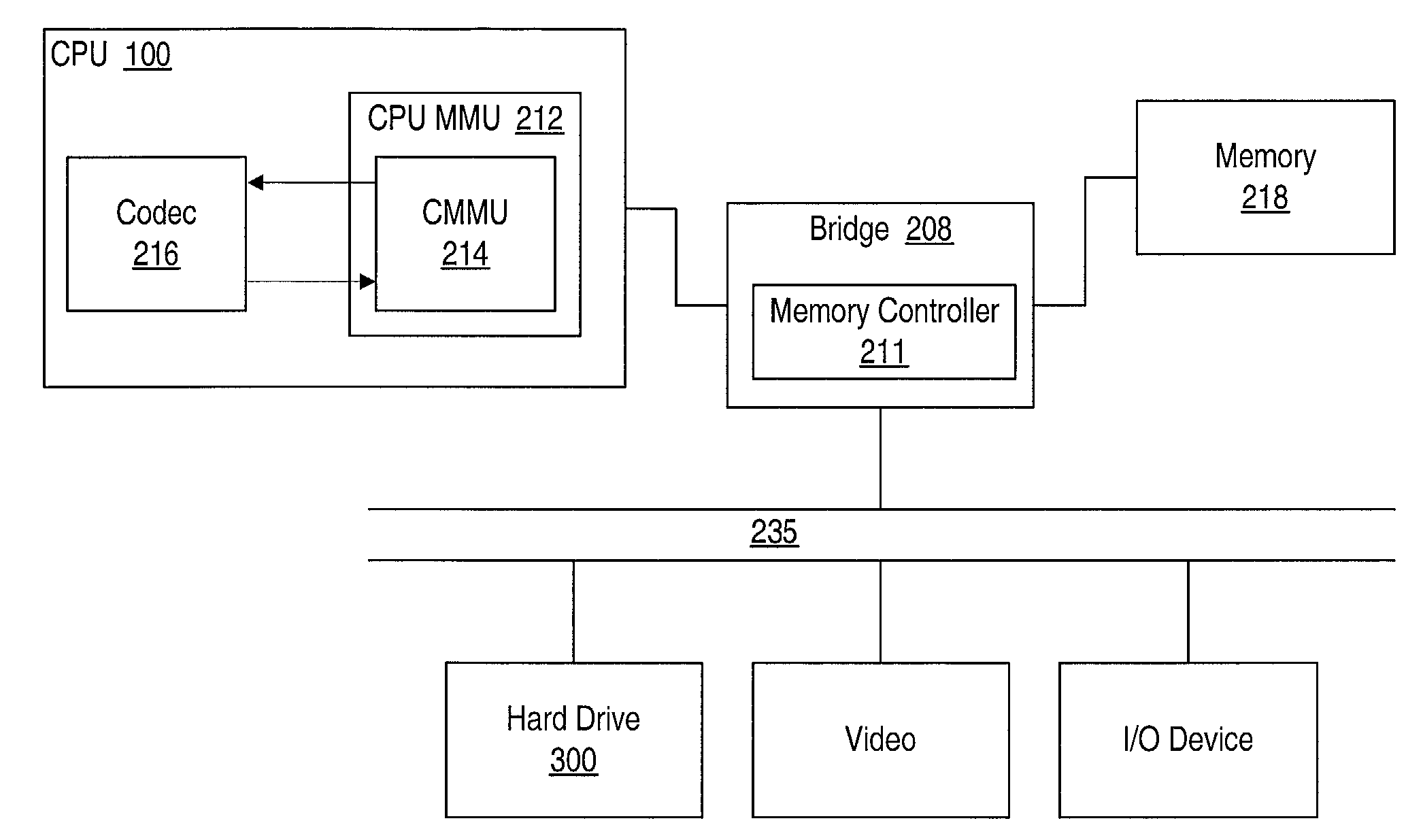

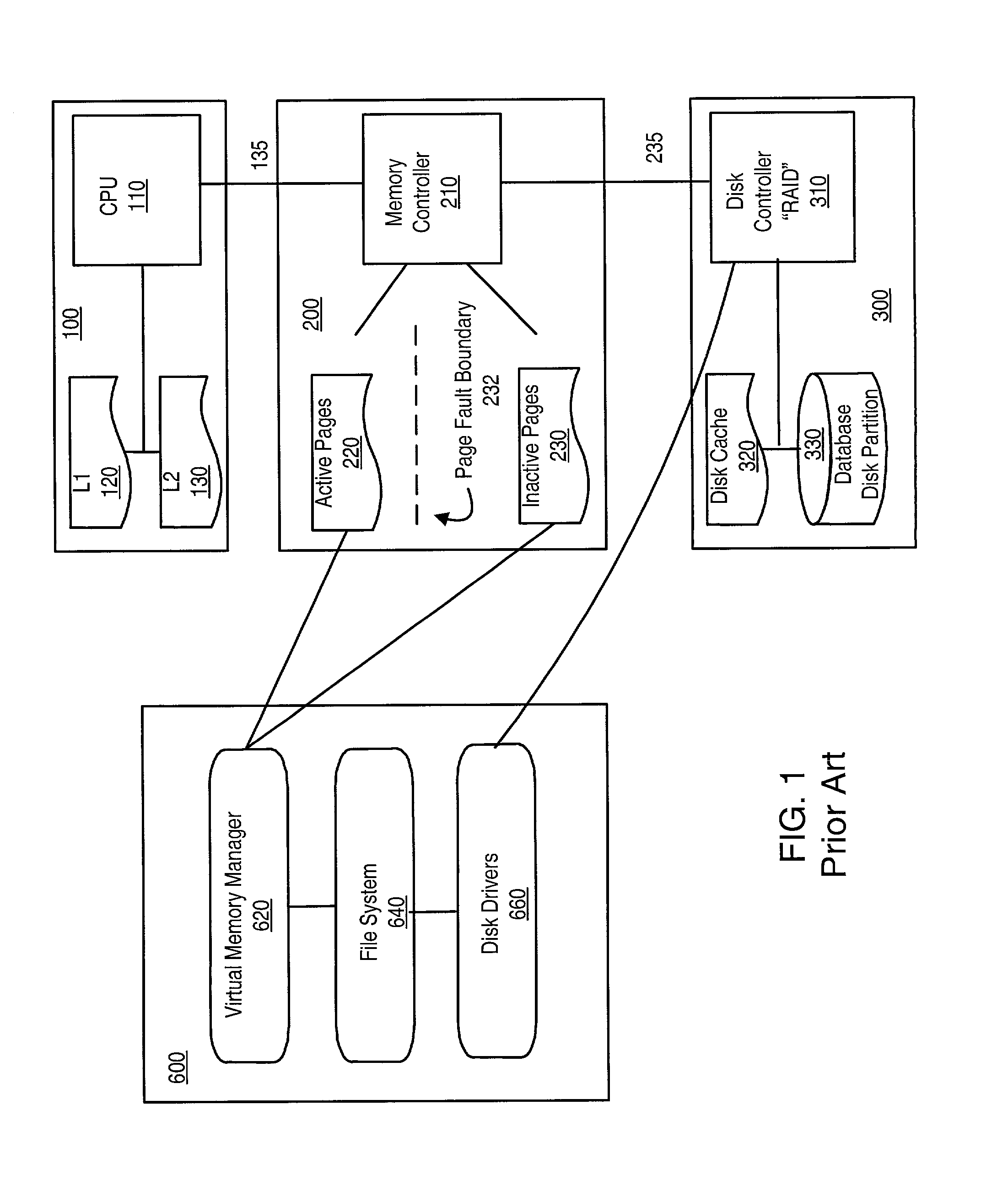

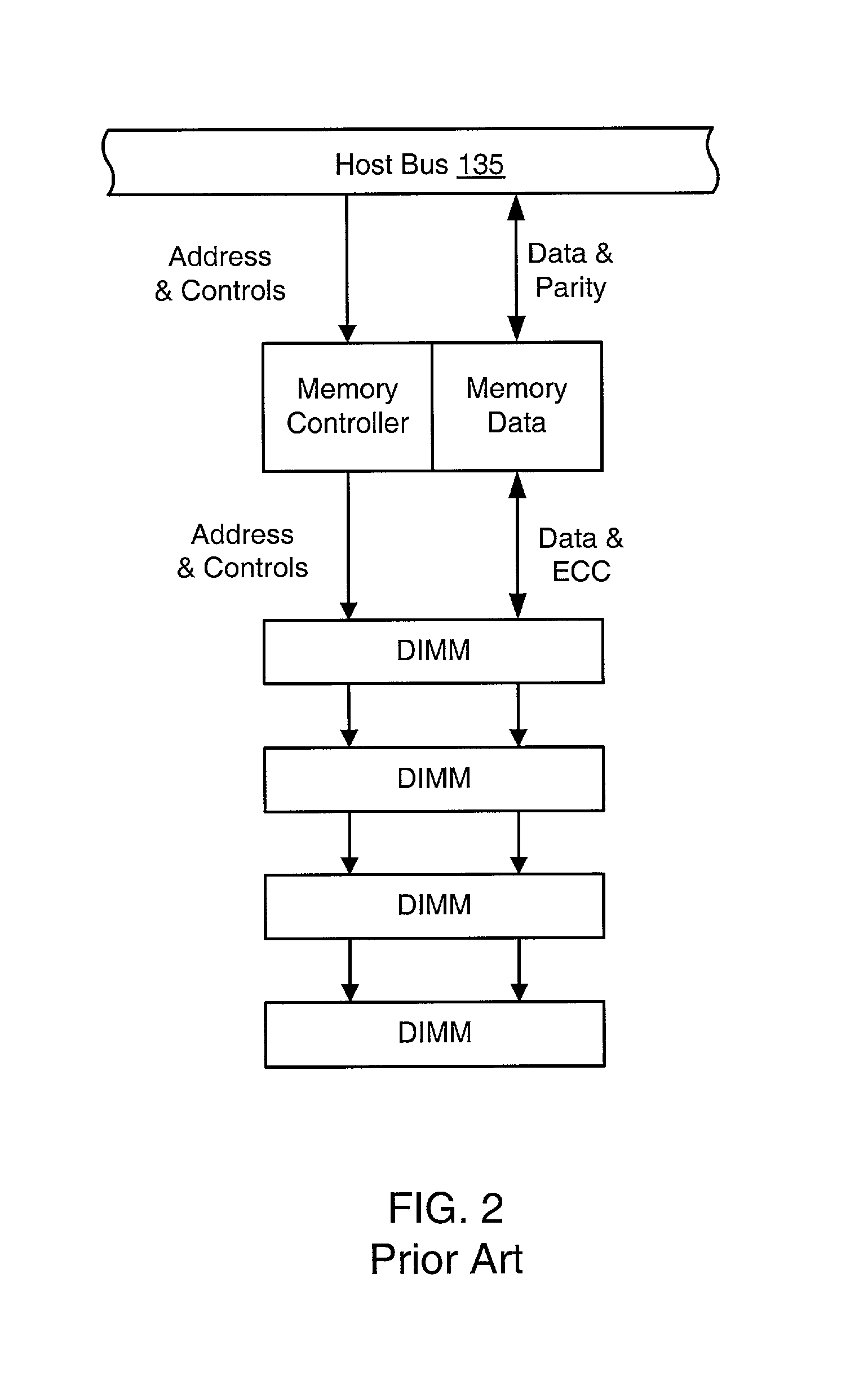

System and method for managing compression and decompression and decompression of system memory in a computer system

InactiveUS7047382B2Easy to useReduce data bandwidthMemory architecture accessing/allocationMemory adressing/allocation/relocationManagement unitParallel computing

Owner:MOSSMAN HLDG

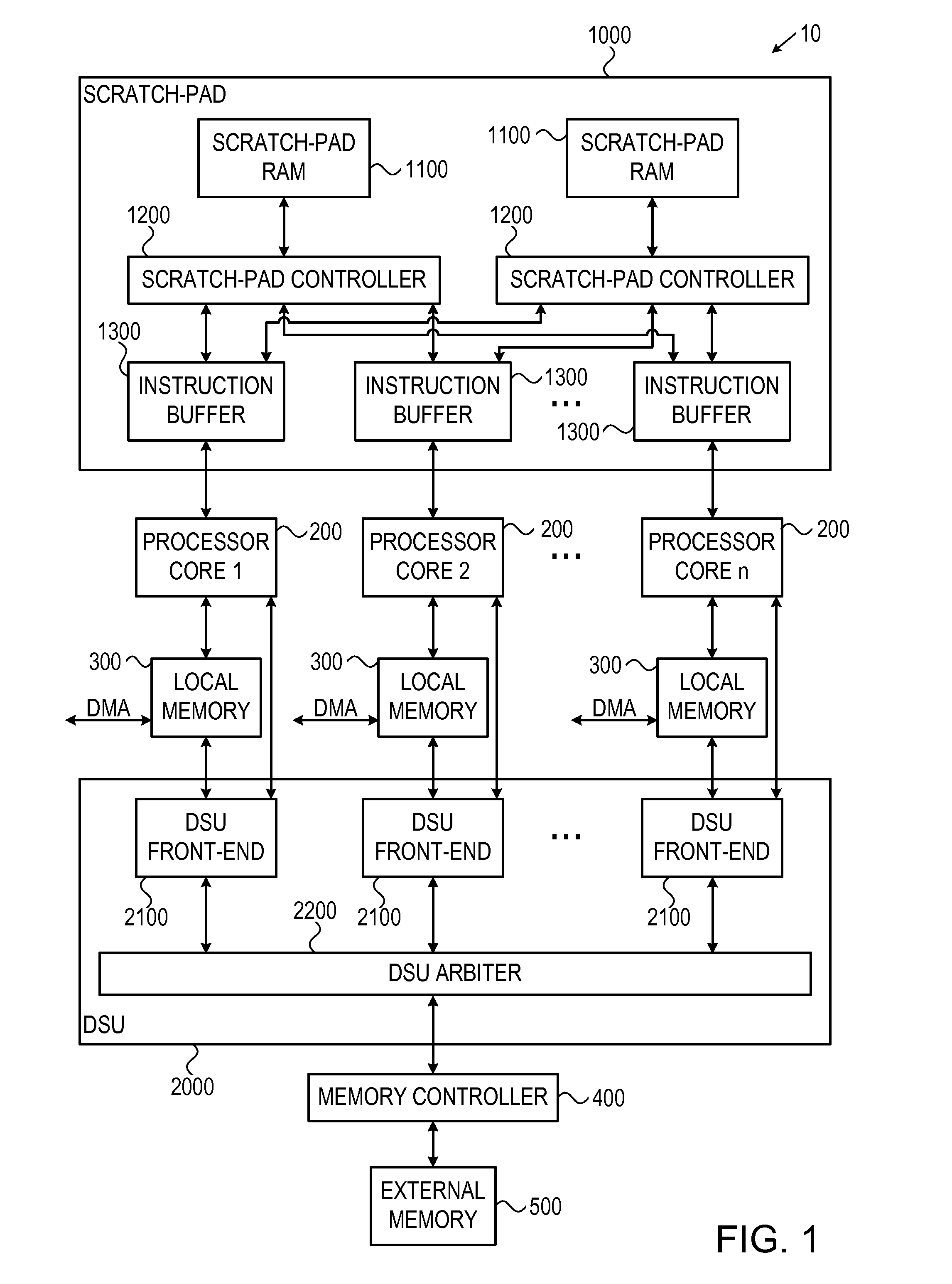

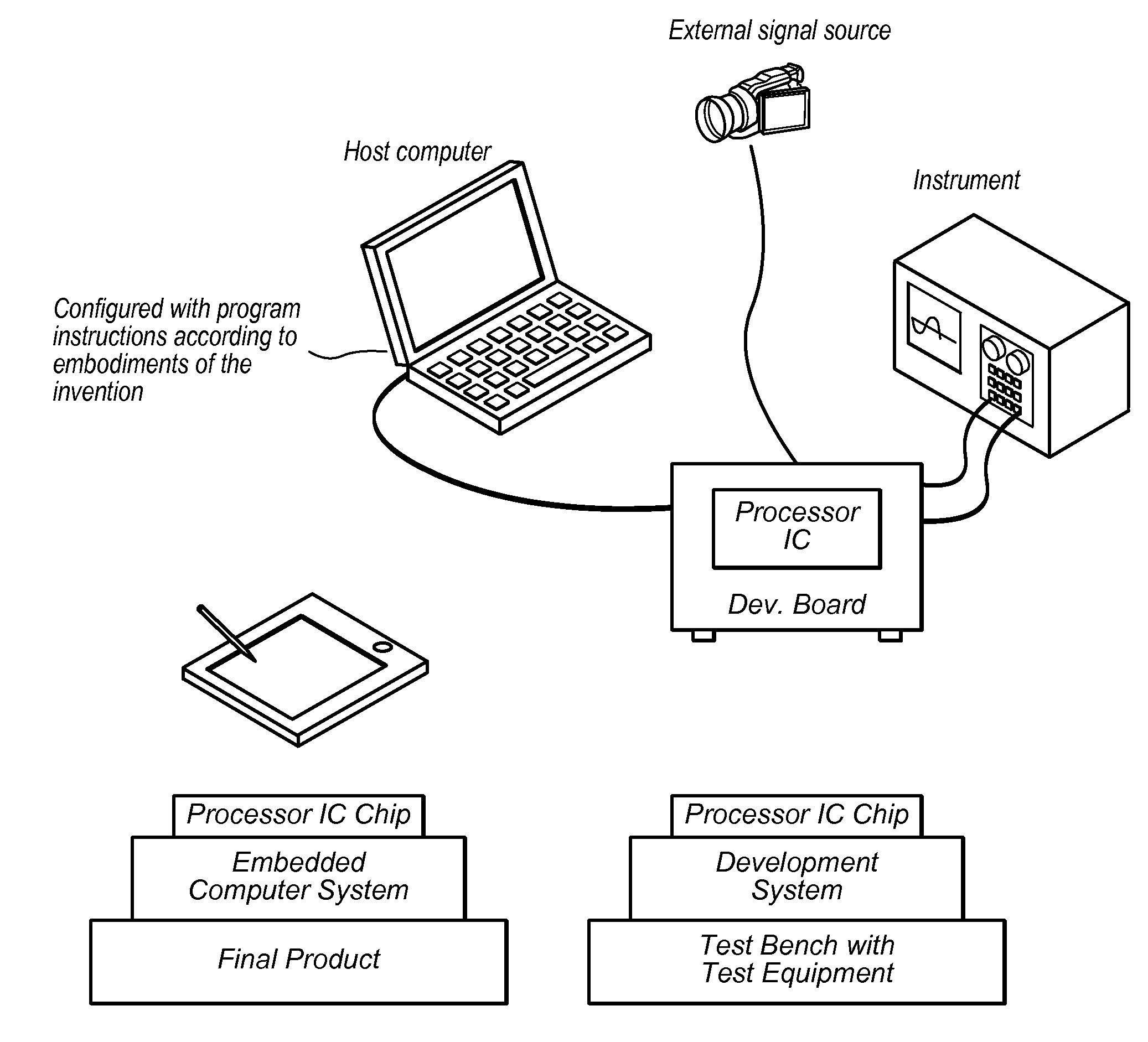

Multiprocessor system-on-a-chip for machine vision algorithms

ActiveUS20120042150A1Program control using stored programsGeneral purpose stored program computerMachine visionData stream

Owner:APPLE INC

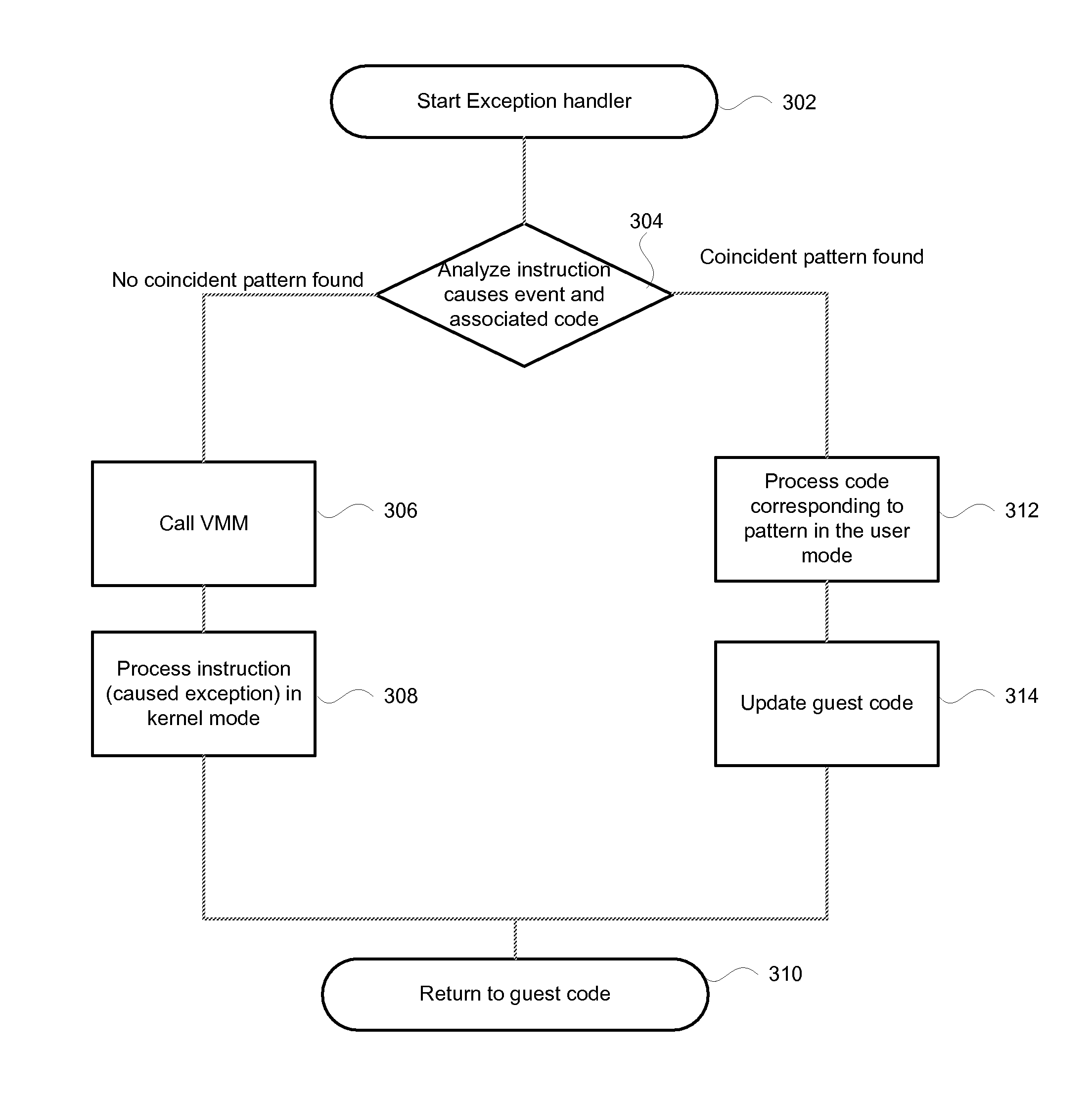

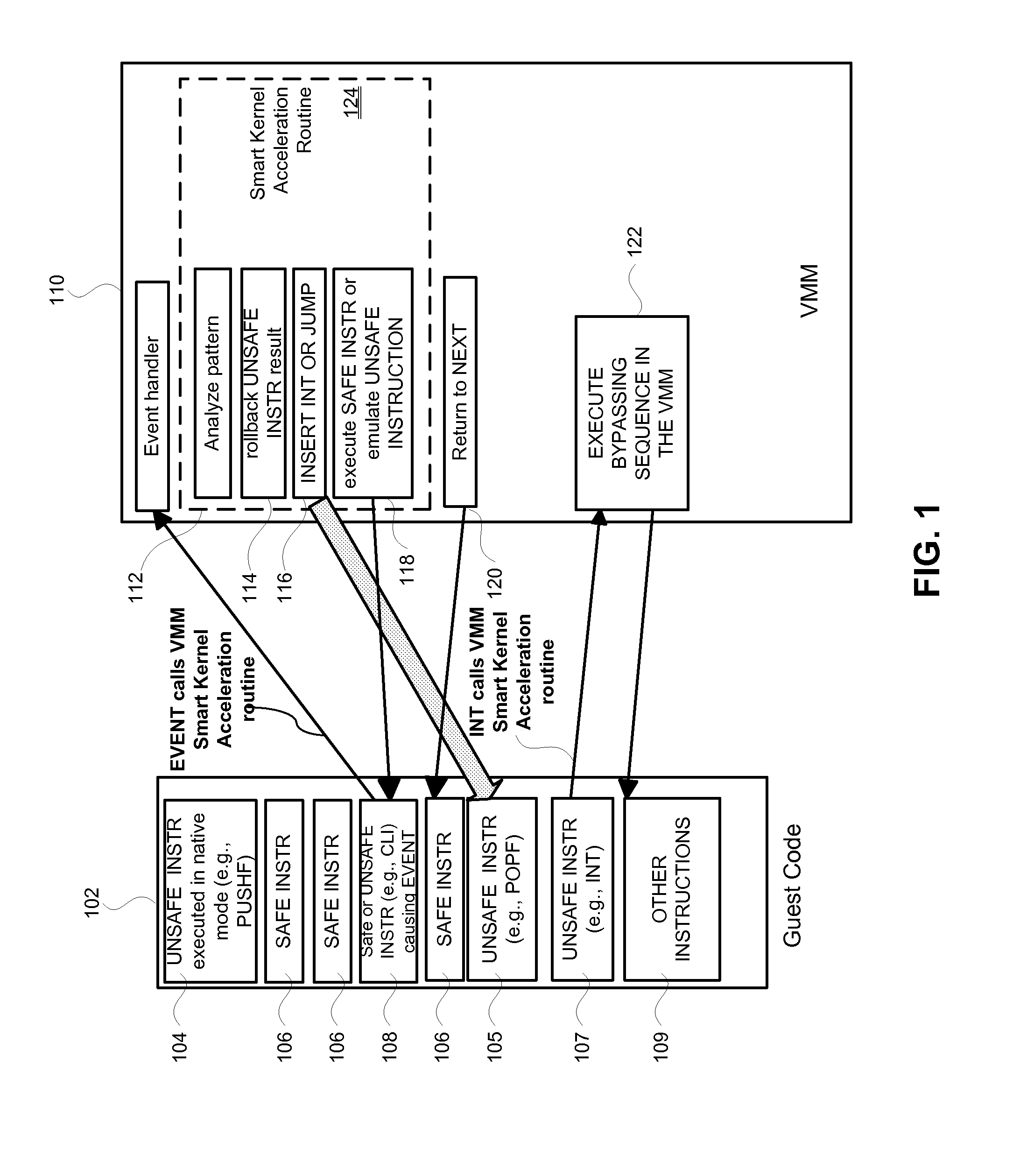

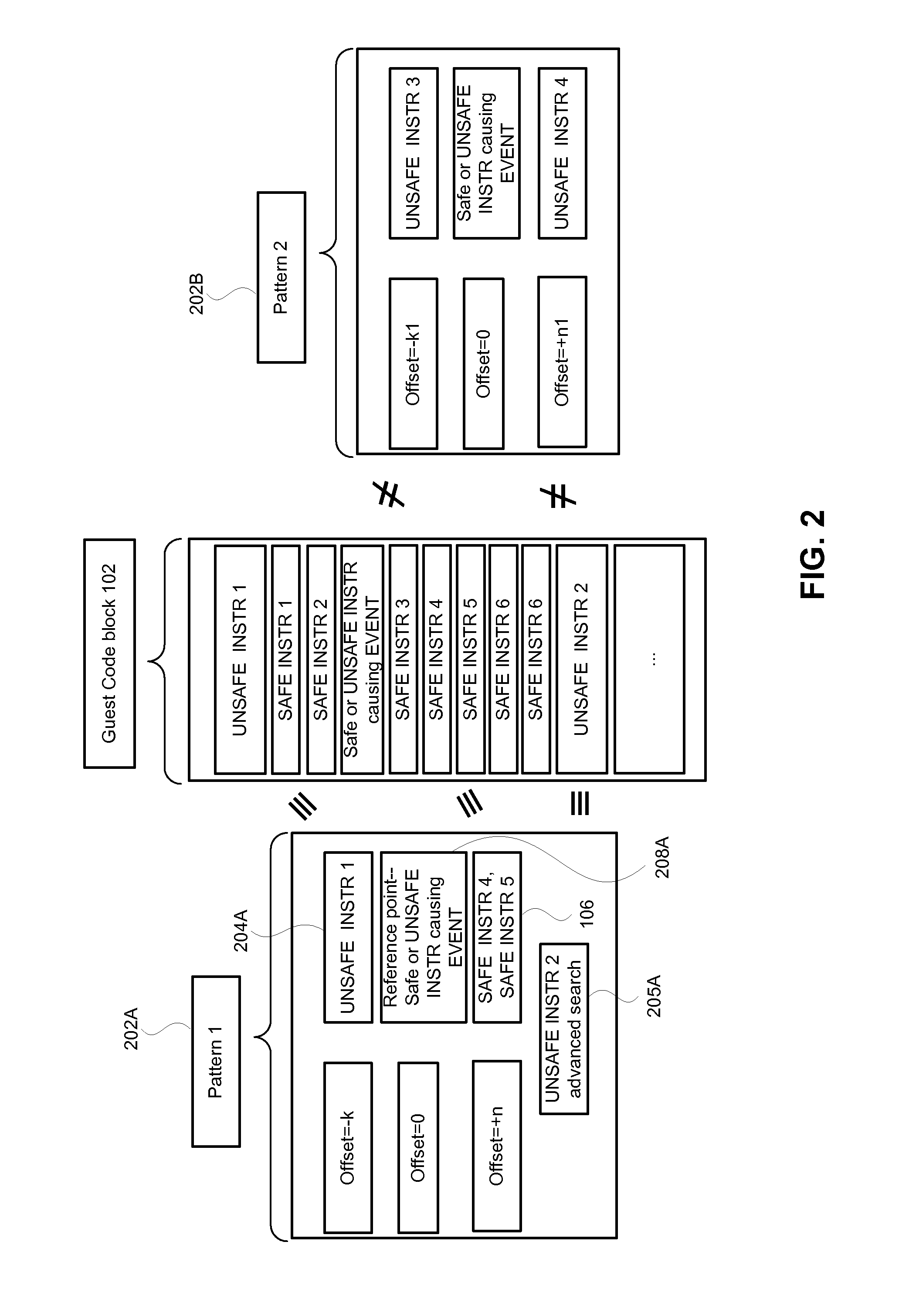

Kernel acceleration technology for virtual machine optimization

Owner:PARALLELS INT GMBH

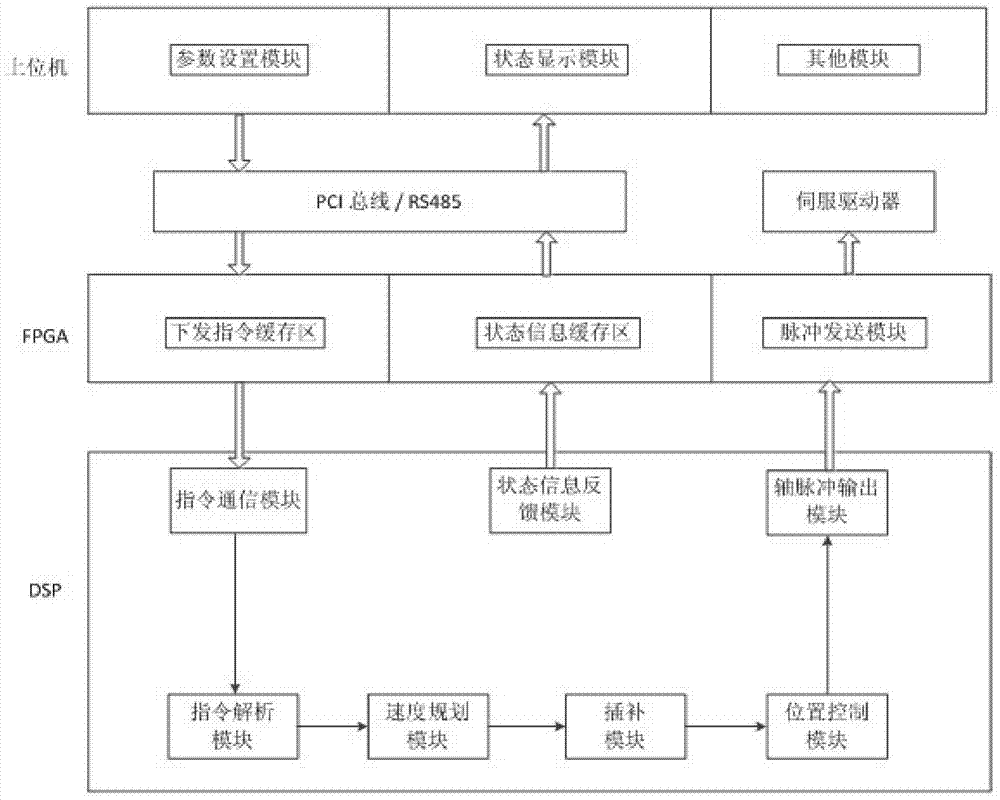

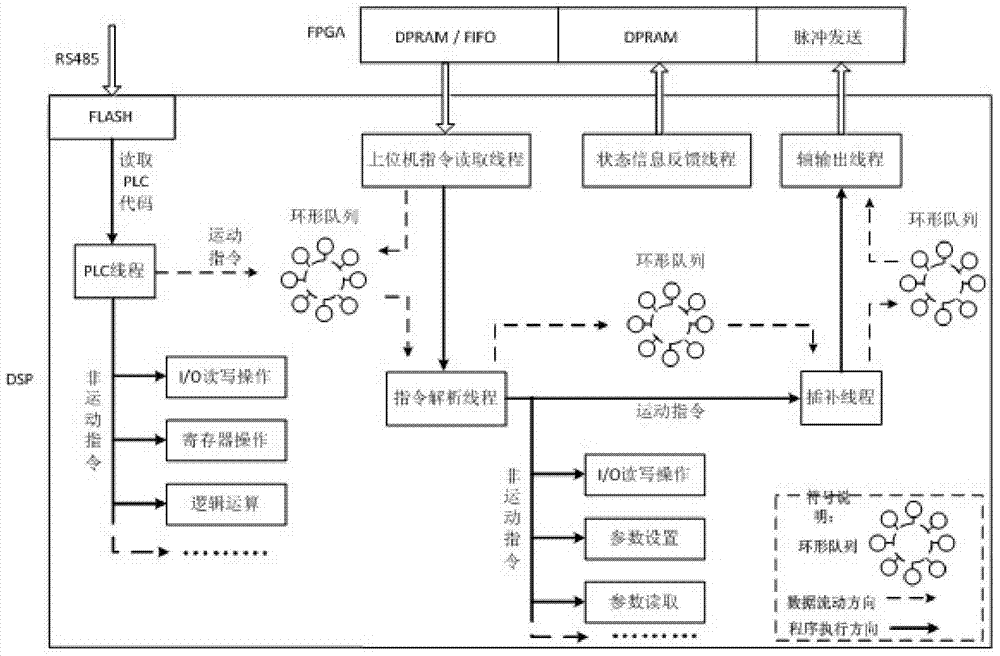

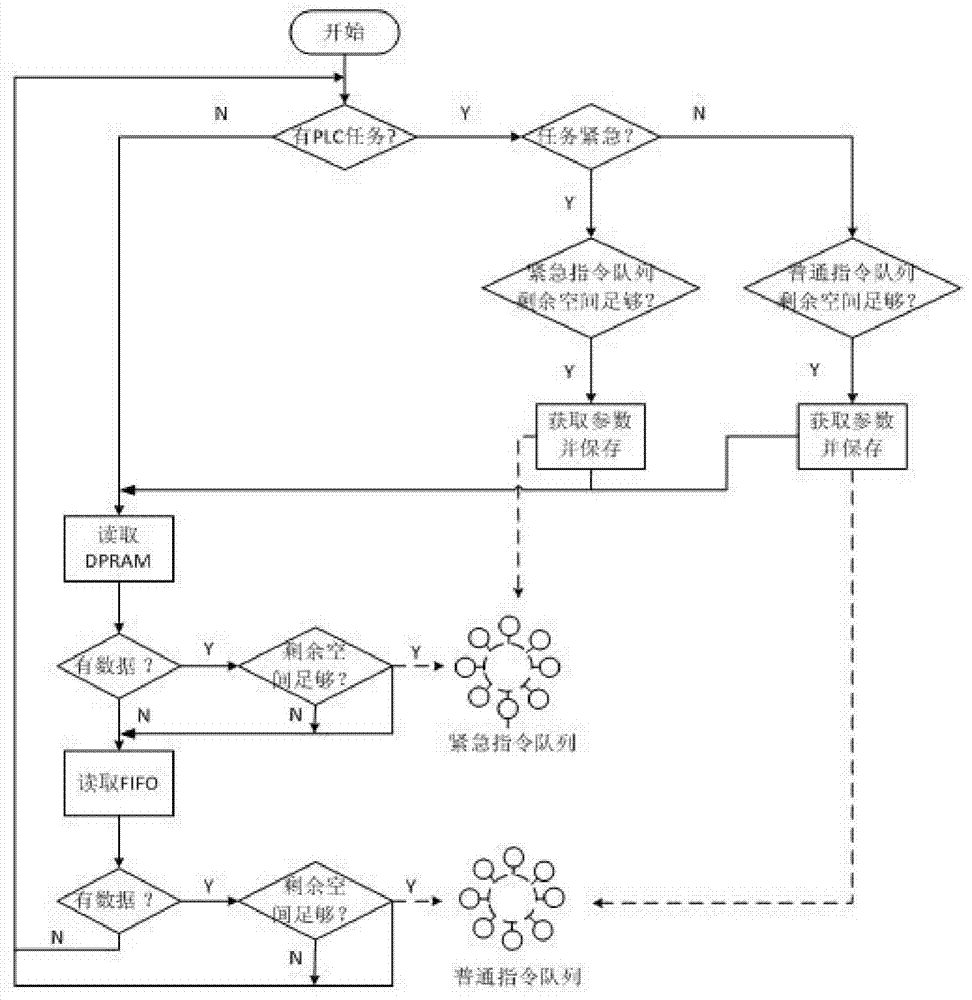

Control method of multi-axis motion card control system

InactiveCN103941649AGuaranteed continuityAchieving a modular designNumerical controlAutomatic controlControl system

Owner:东莞市升力智能科技有限公司

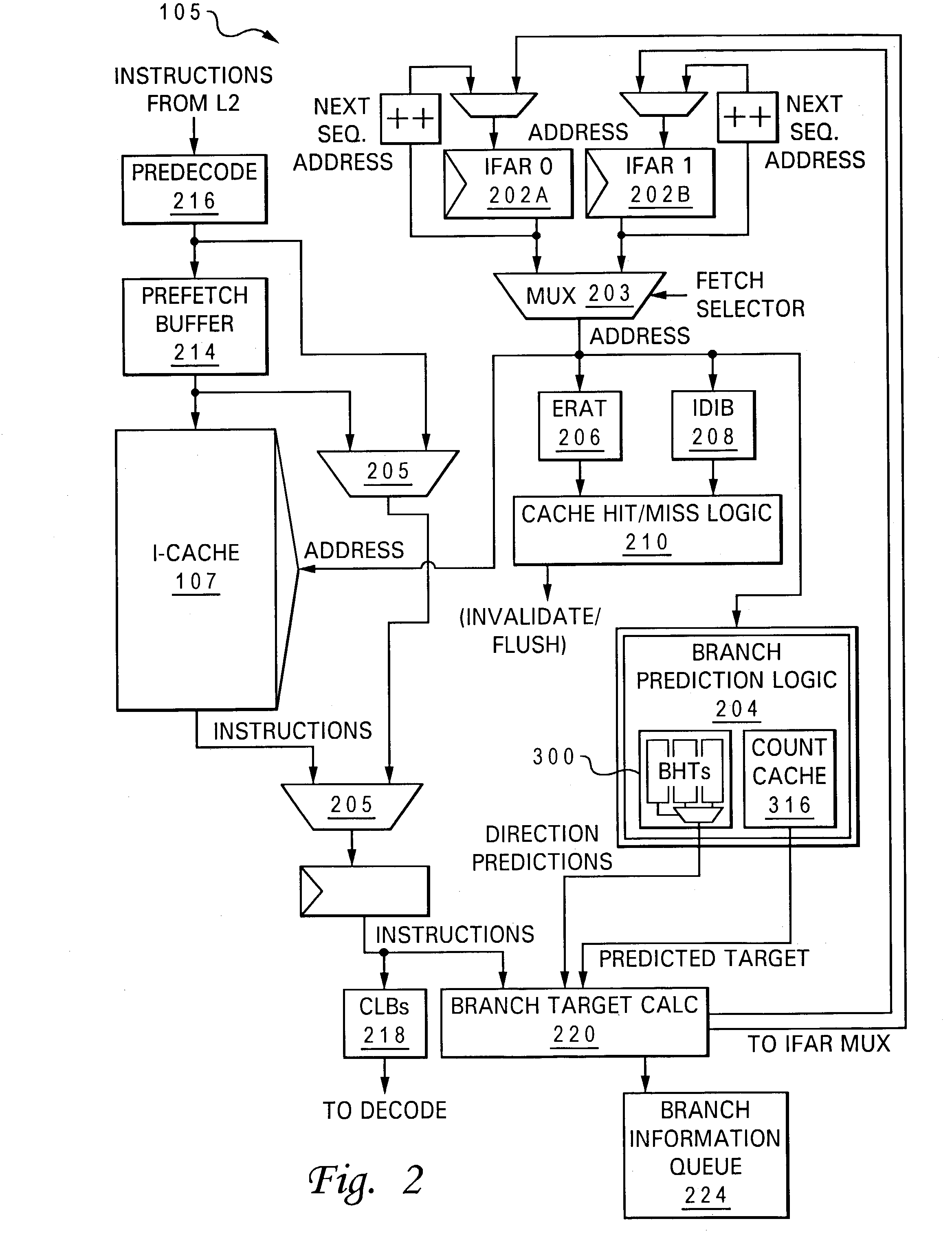

Thread-specific branch prediction by logically splitting branch history tables and predicted target address cache in a simultaneous multithreading processing environment

InactiveUS7120784B2Promote resultsAccelerates branch predictionMemory adressing/allocation/relocationMultiprogramming arrangementsArray data structureParallel computing

Owner:IBM CORP

Processor including fallback branch prediction mechanism for far jump and far call instructions

InactiveUS20050210224A1Digital computer detailsNext instruction address formationParallel computingAddress generator

A method and apparatus are provided for processing far jump-call branch instructions within a processor in a manner which reduces the number of stalls of the processor pipeline. The processor includes an apparatus, for providing a fallback far jump-call speculative target address that corresponds to a current far jump-call branch instruction. The microprocessor apparatus includes a far jump-call branch target buffer and a fallback speculative target address generator. The far jump-call branch target buffer stores a plurality of code segment bases and offsets corresponding to a plurality of previously executed far jump-call branch instructions, and determines if a hit for the current far jump-call branch instruction is contained therein. The fallback speculative target address generator is coupled to the far jump-call branch target buffer. In the event of a miss in the far jump-call branch target buffer, the fall back speculative target address generator venerates the fallback far jump-call speculative target address from a current code segment base and a current offset, the current offset corresponding to the current far jump-call branch instruction.

Owner:IP FIRST

Determining regions when performing intra block copying

Owner:QUALCOMM INC

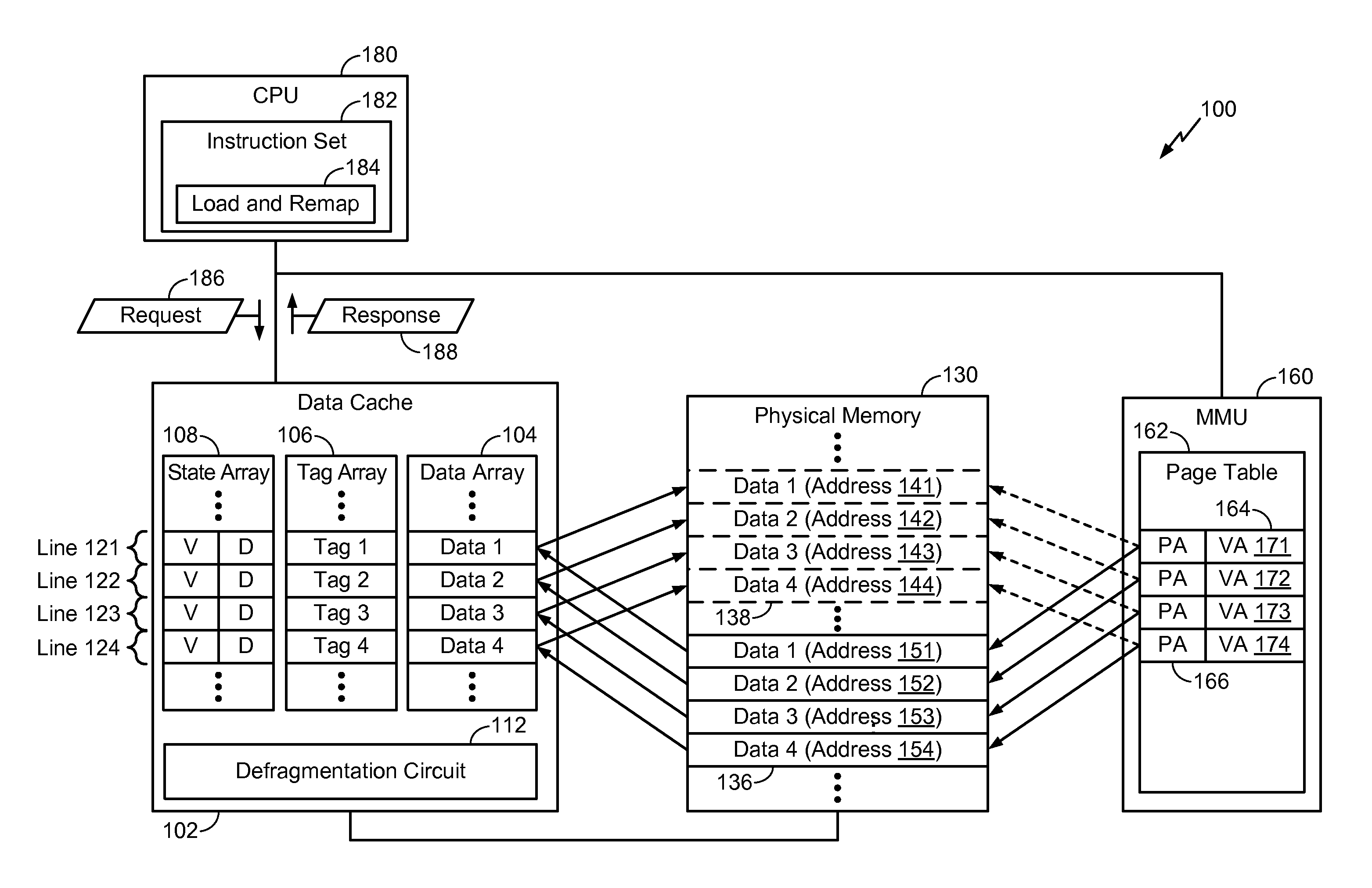

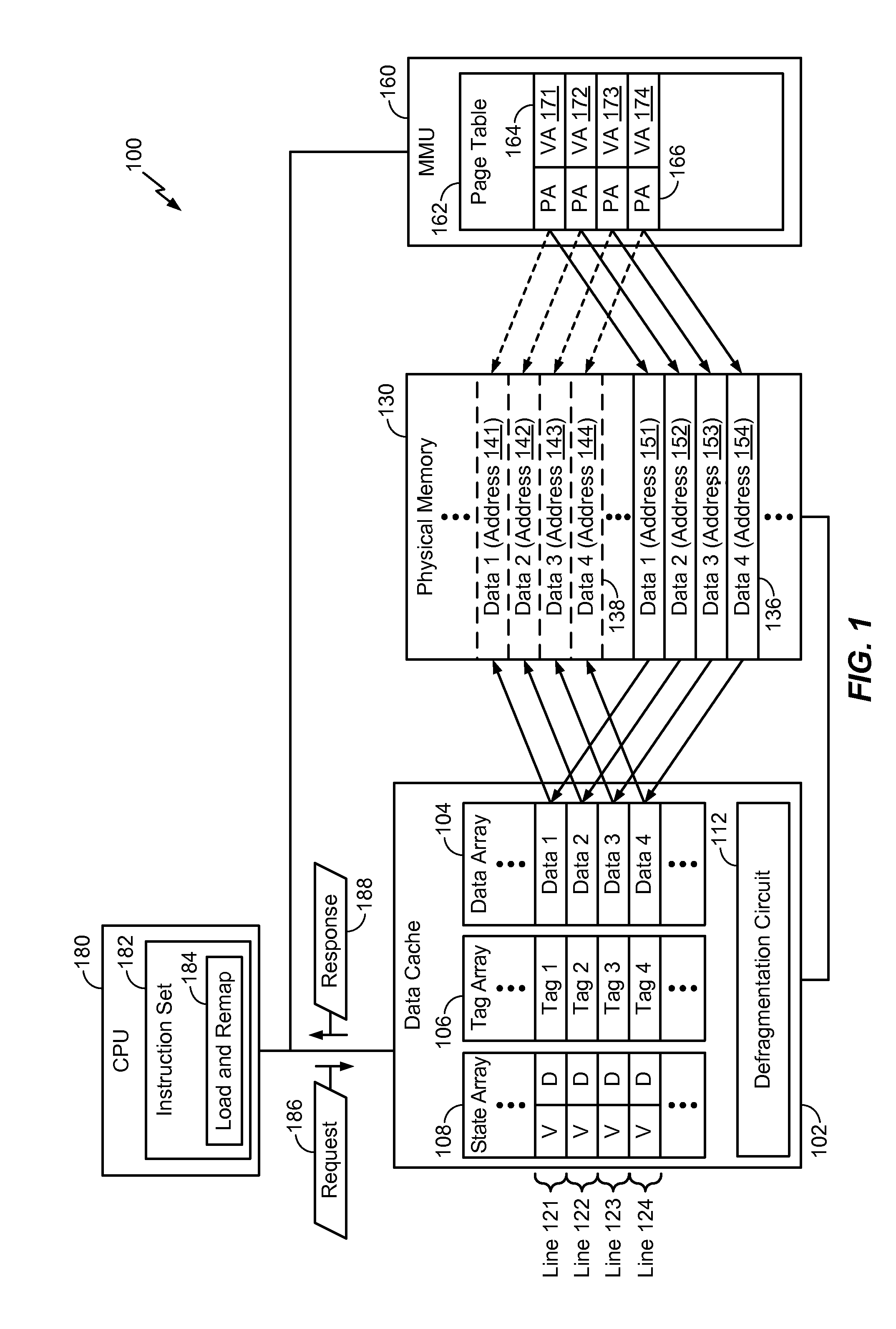

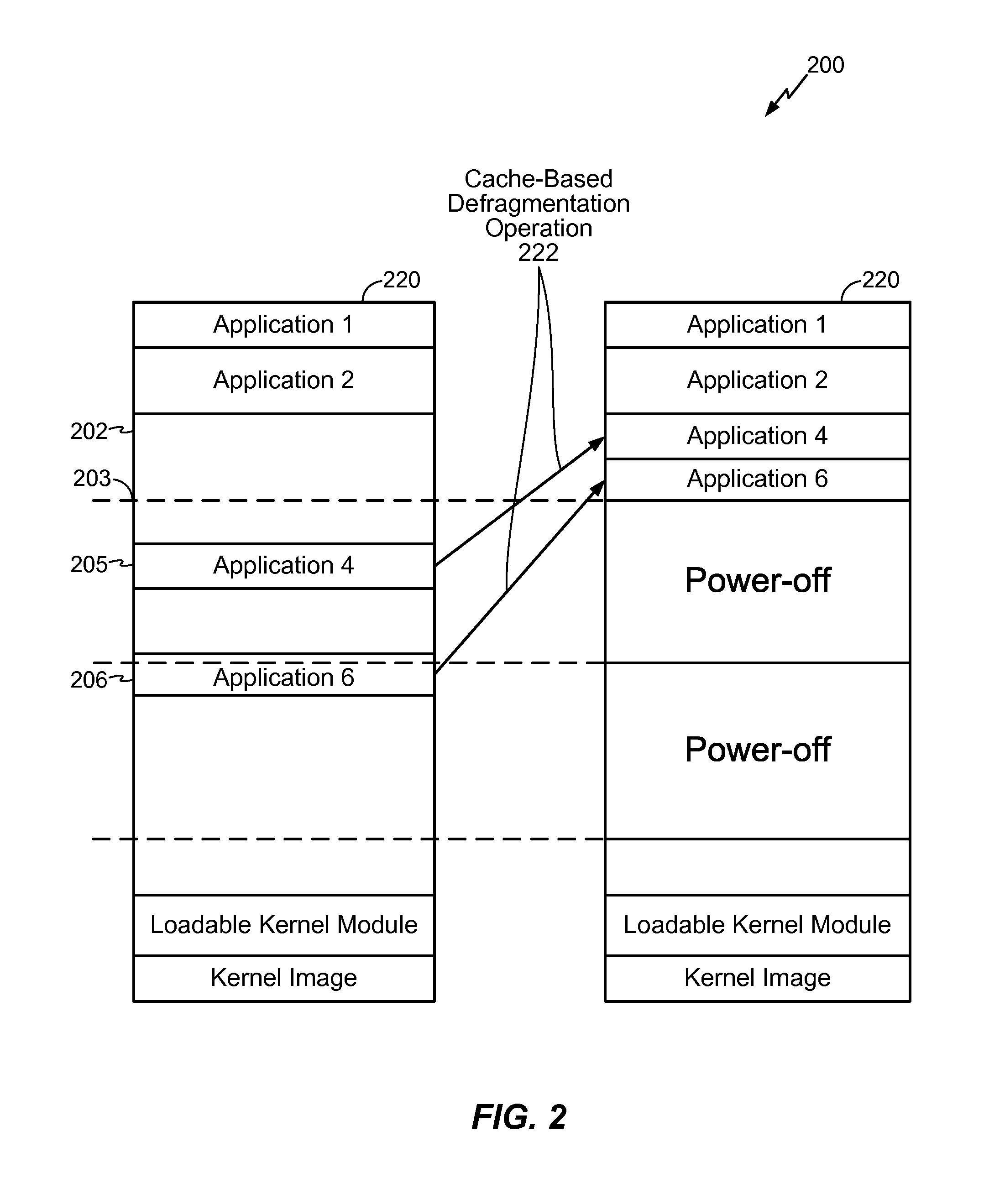

System and method to defragment a memory

ActiveUS20150186279A1Memory architecture accessing/allocationMemory adressing/allocation/relocationMemory addressVirtual memory

Owner:QUALCOMM INC

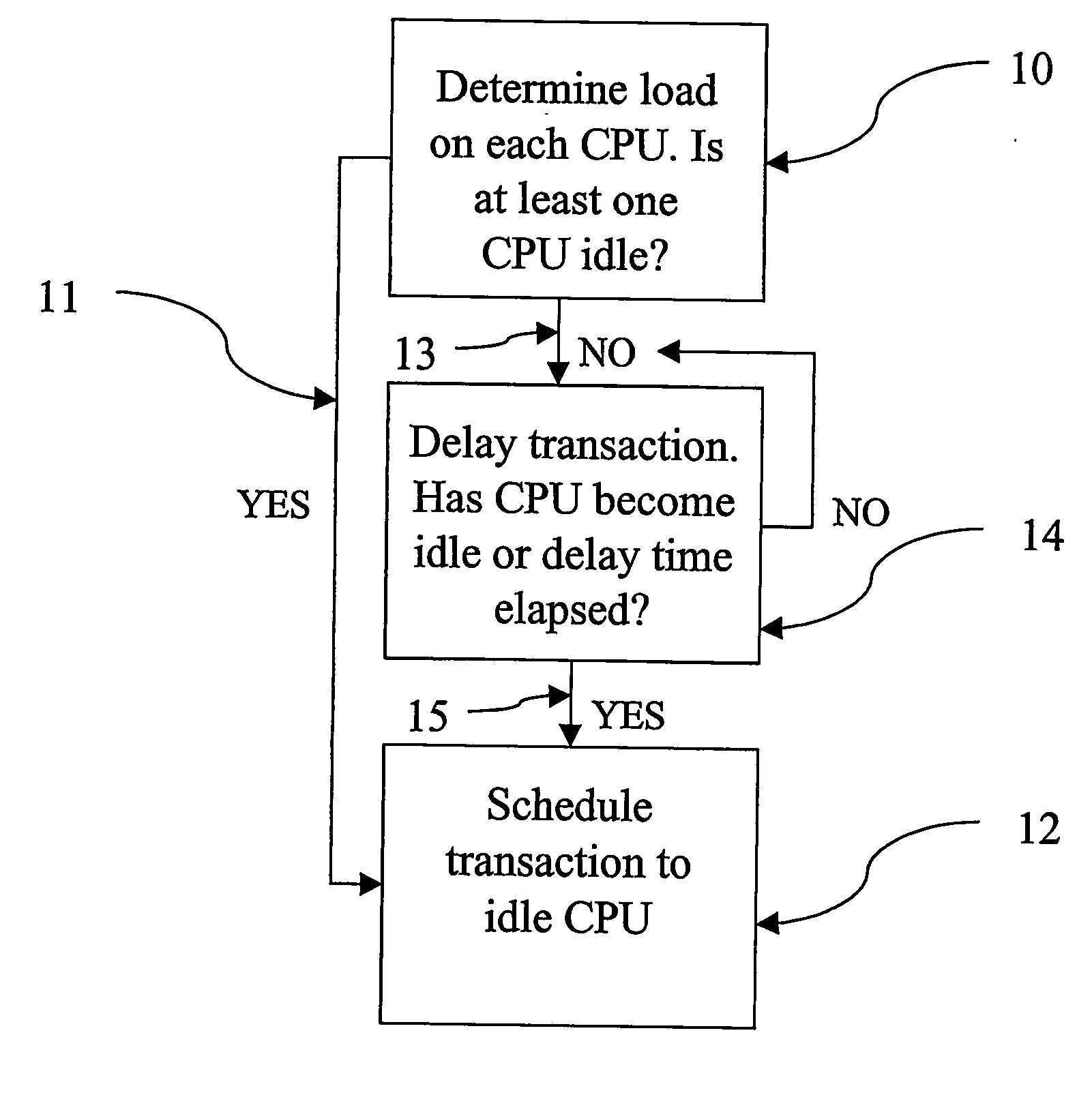

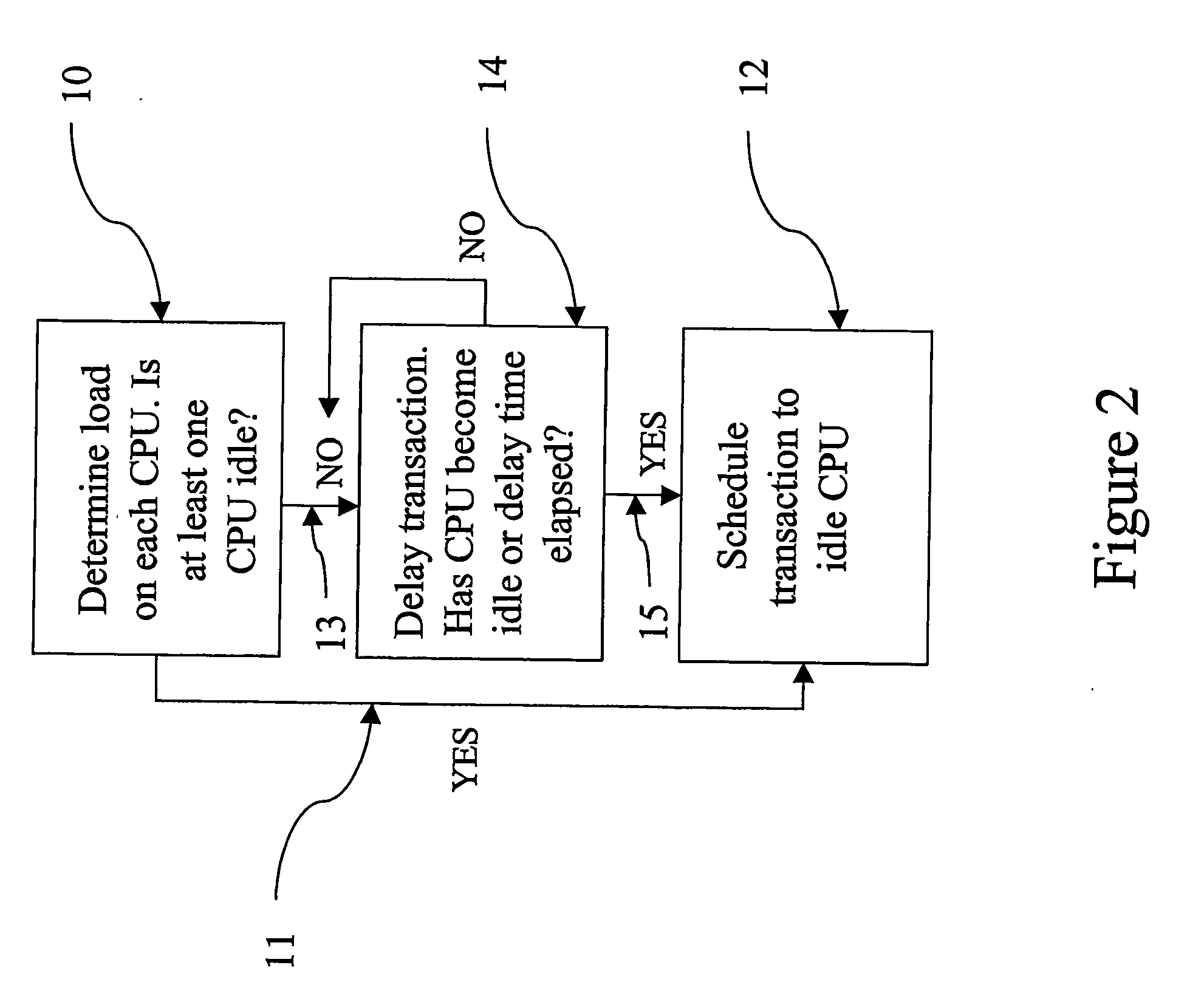

Streamlining cpu utilization by delaying transactions

InactiveUS20060123421A1Increase the number ofMultiprogramming arrangementsMemory systemsCurrent loadDelayed time

Owner:UNISYS CORP

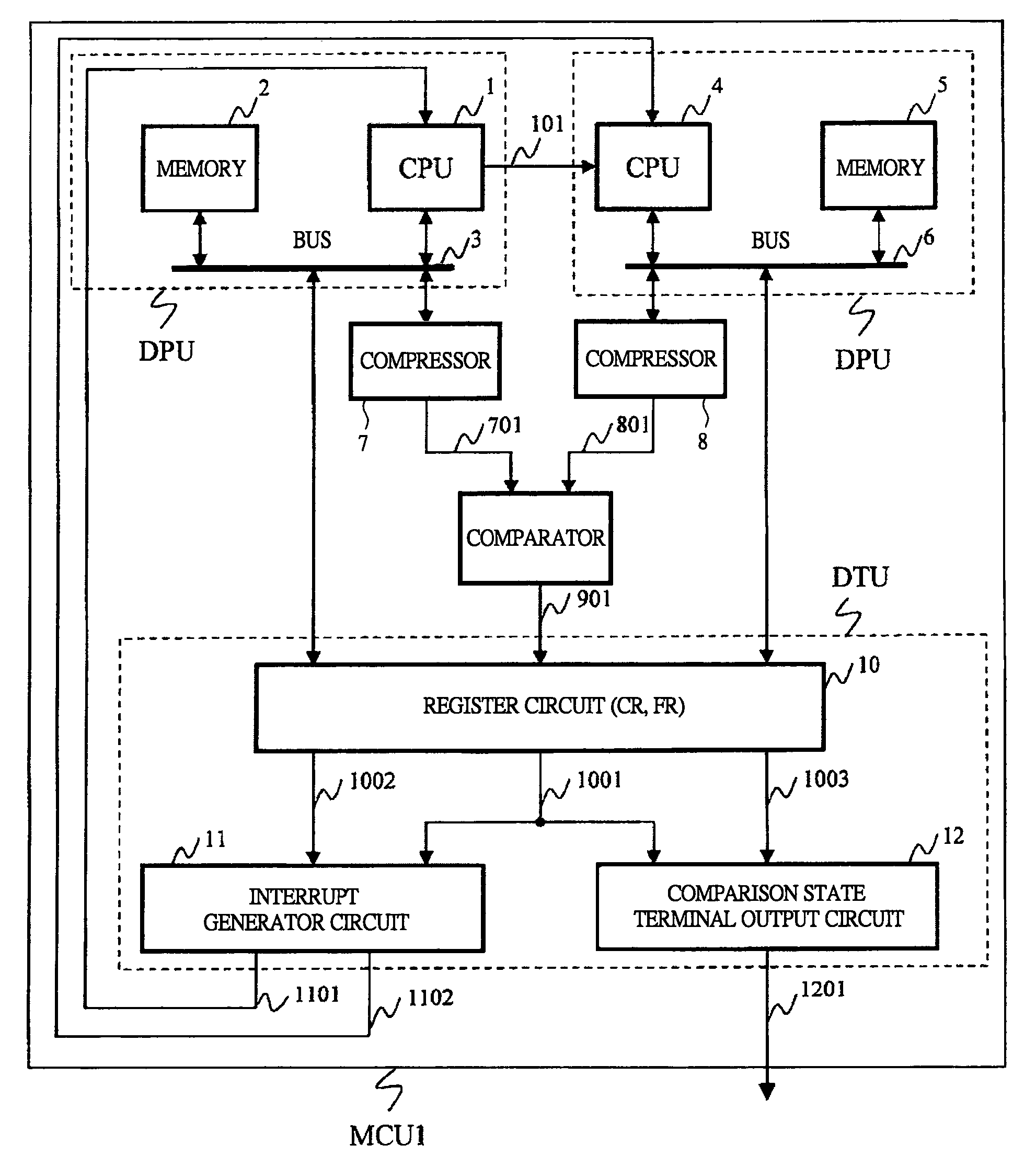

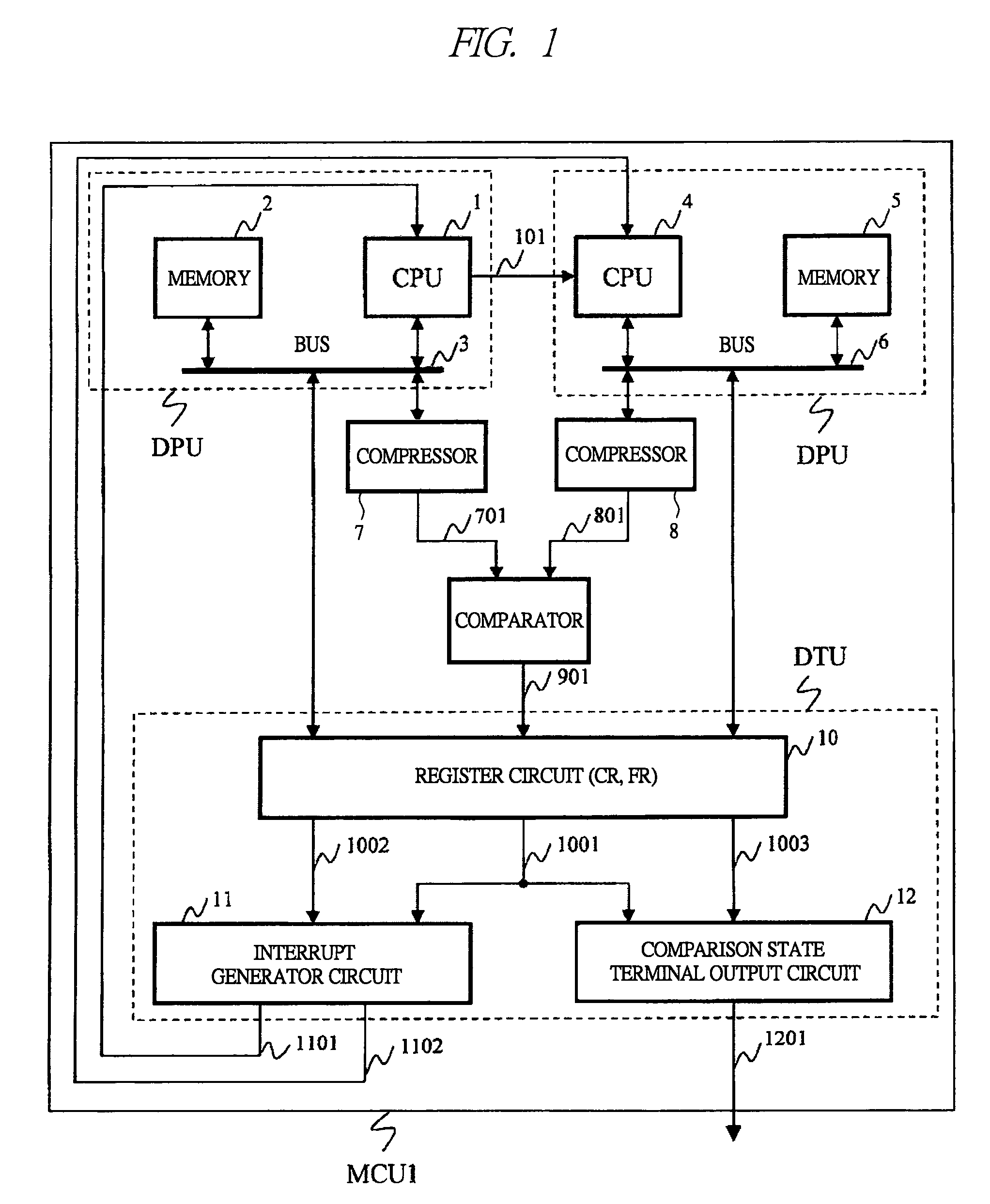

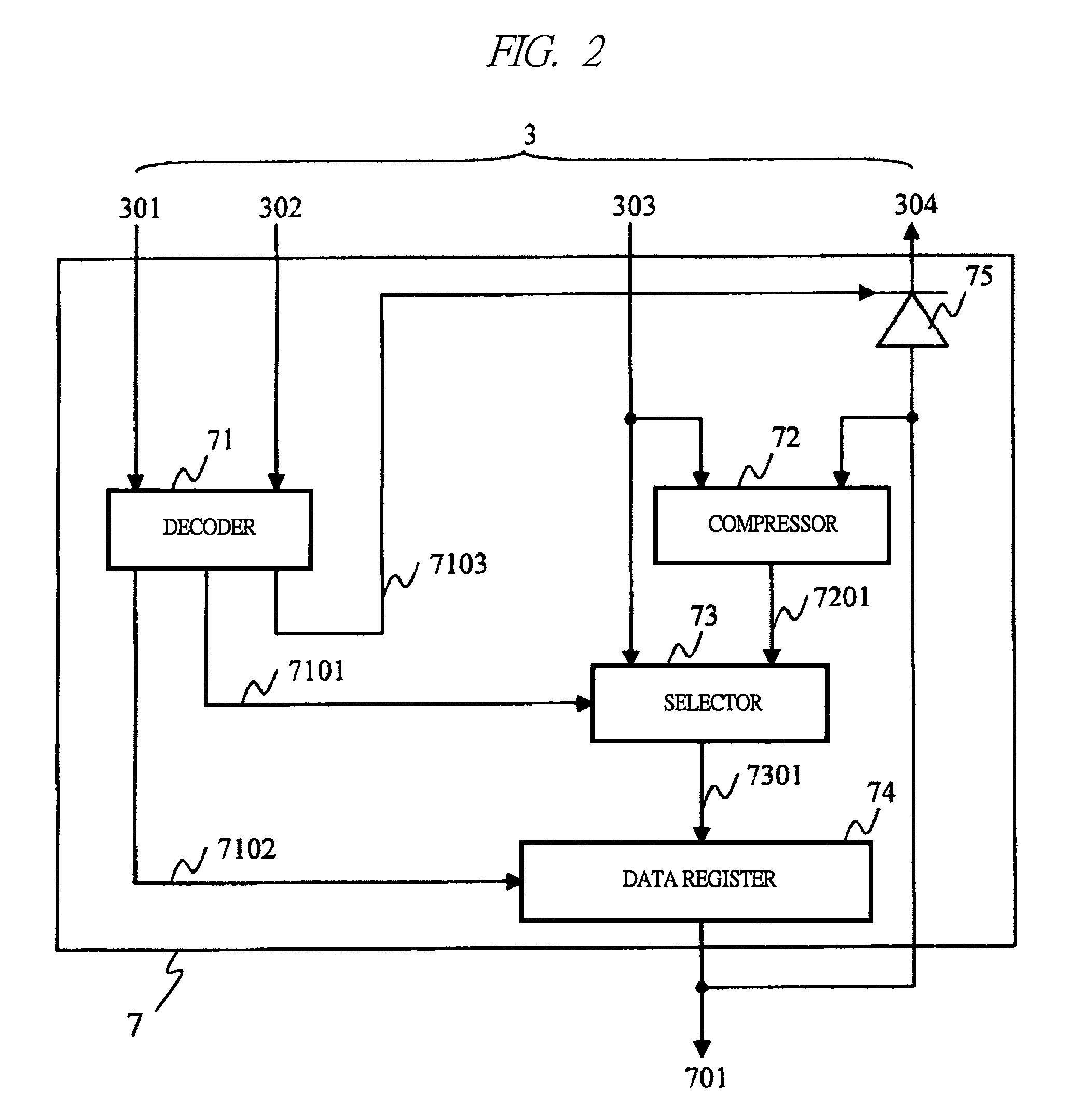

Multi-core microcontroller having comparator for checking processing result

InactiveUS20100131741A1Abnormality of processingHigh-performance processingDigital computer detailsConcurrent instruction executionMicrocontrollerParallel computing

Owner:RENESAS ELECTRONICS CORP

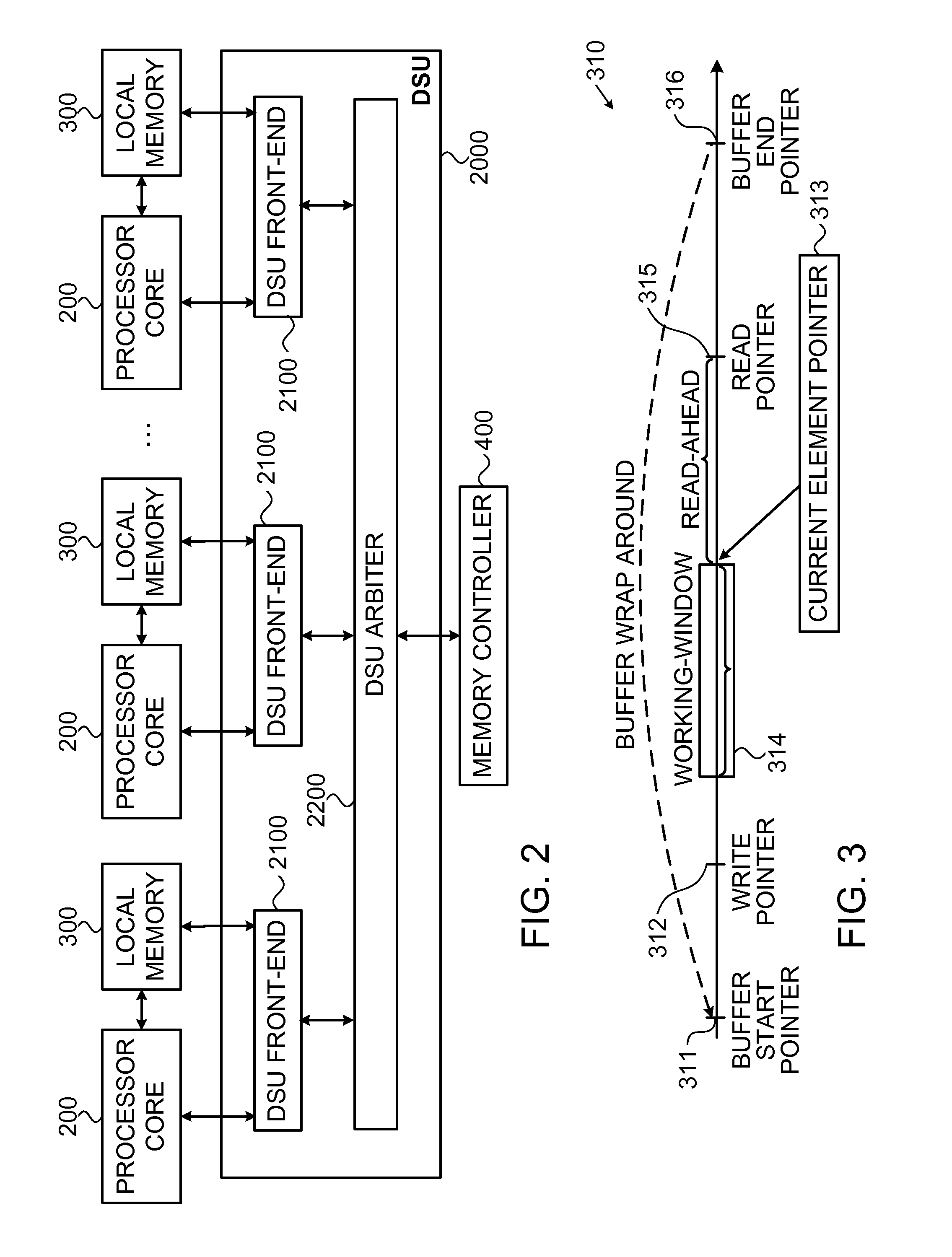

Employing a data mover to communicate between dynamically selected zones of a central processing complex

InactiveUS6874040B2Low costHigh bandwidthInterprogram communicationDigital computer detailsParallel computingMobile device

Data is moved between zones of a central processing complex via a data mover located within the central processing complex. The data mover moves the data without sending the data over a channel interface and without employing processor instructions to perform the move. Instead, the data mover employs fetch and store state machines and line buffers to move the data.

Owner:INTEL CORP

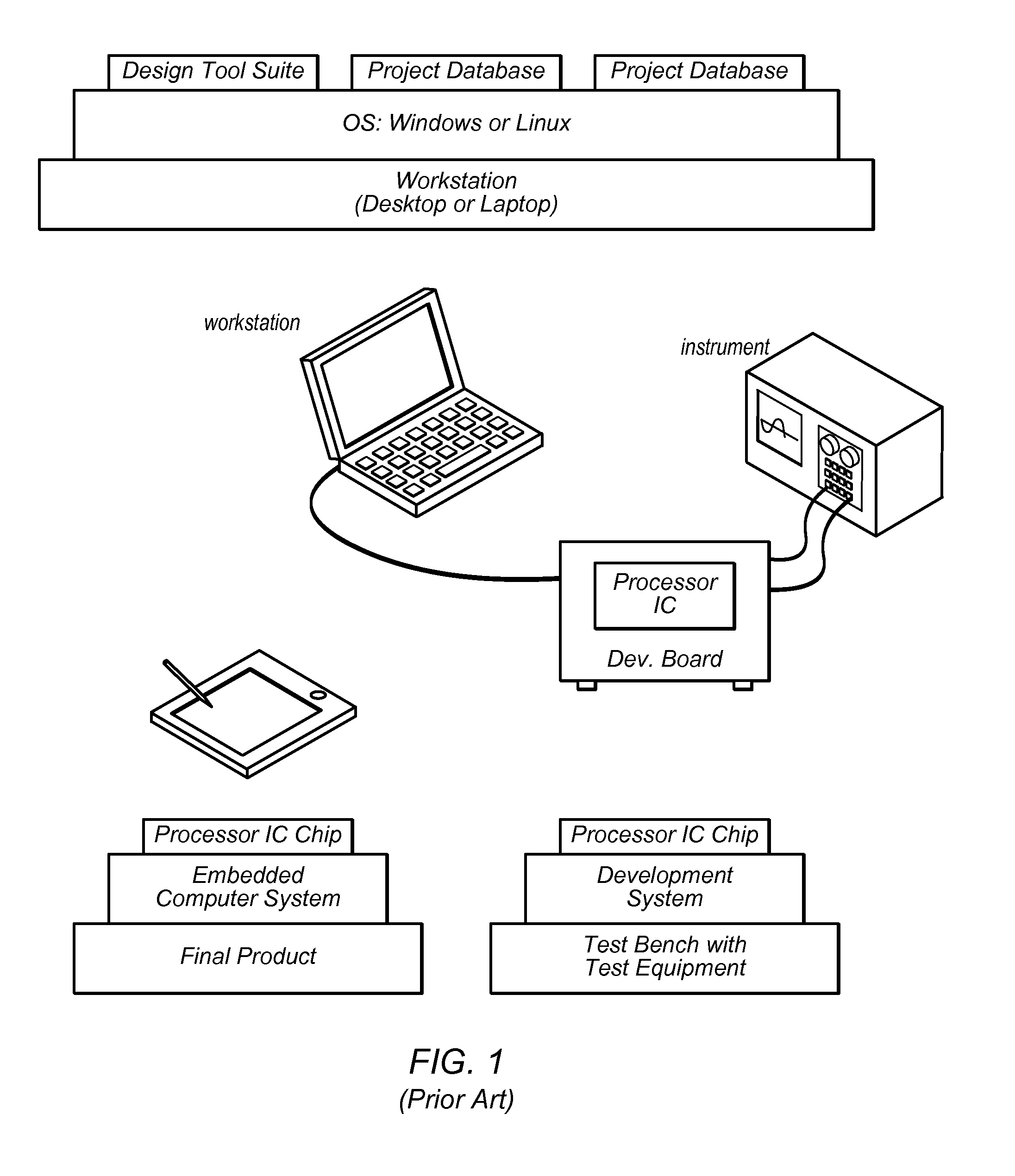

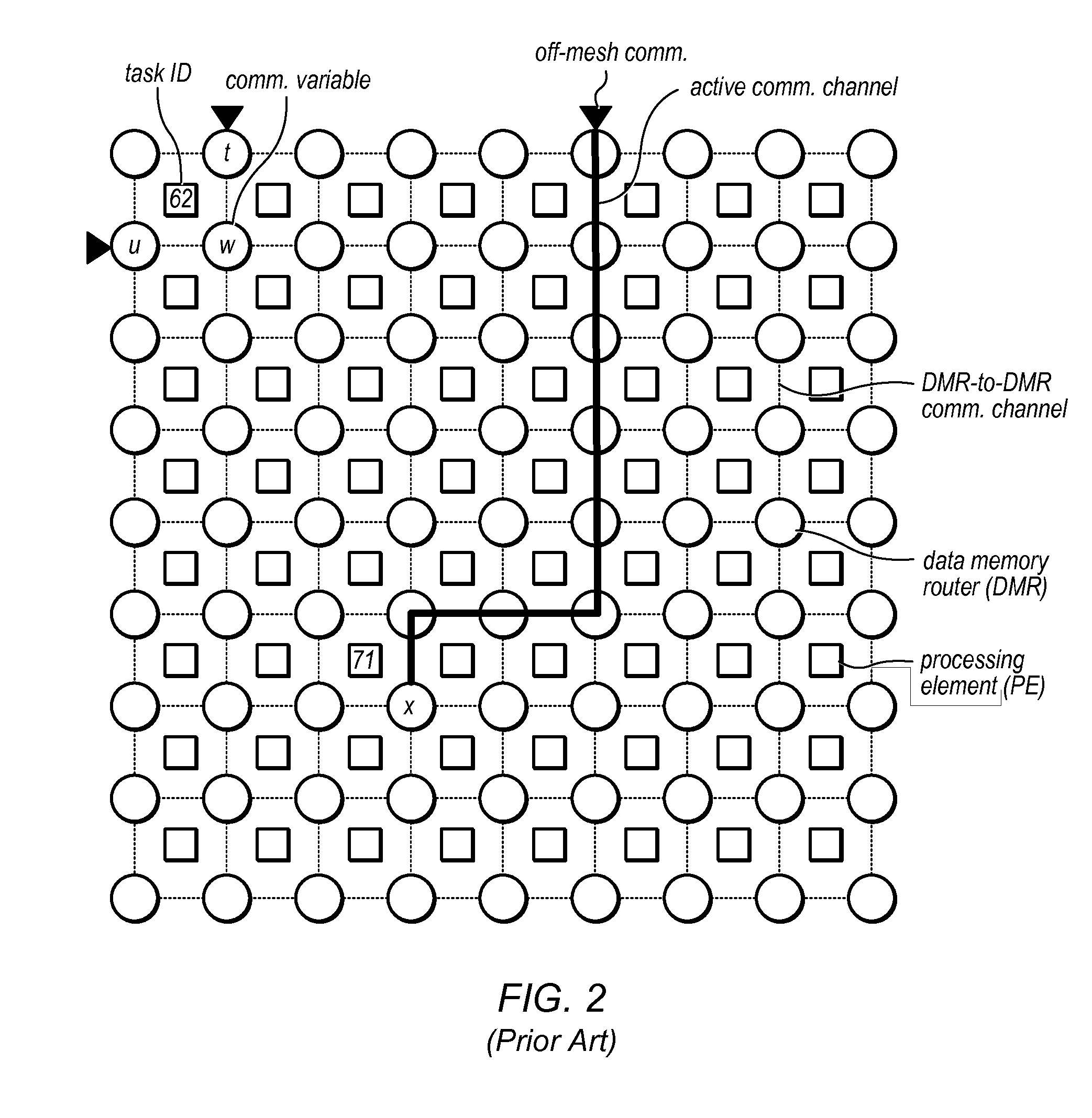

Real Time Analysis and Control for a Multiprocessor System

ActiveUS20140137082A1Software testing/debuggingCAD circuit designReal time analysisParallel computing

Owner:COHERENT LOGIX

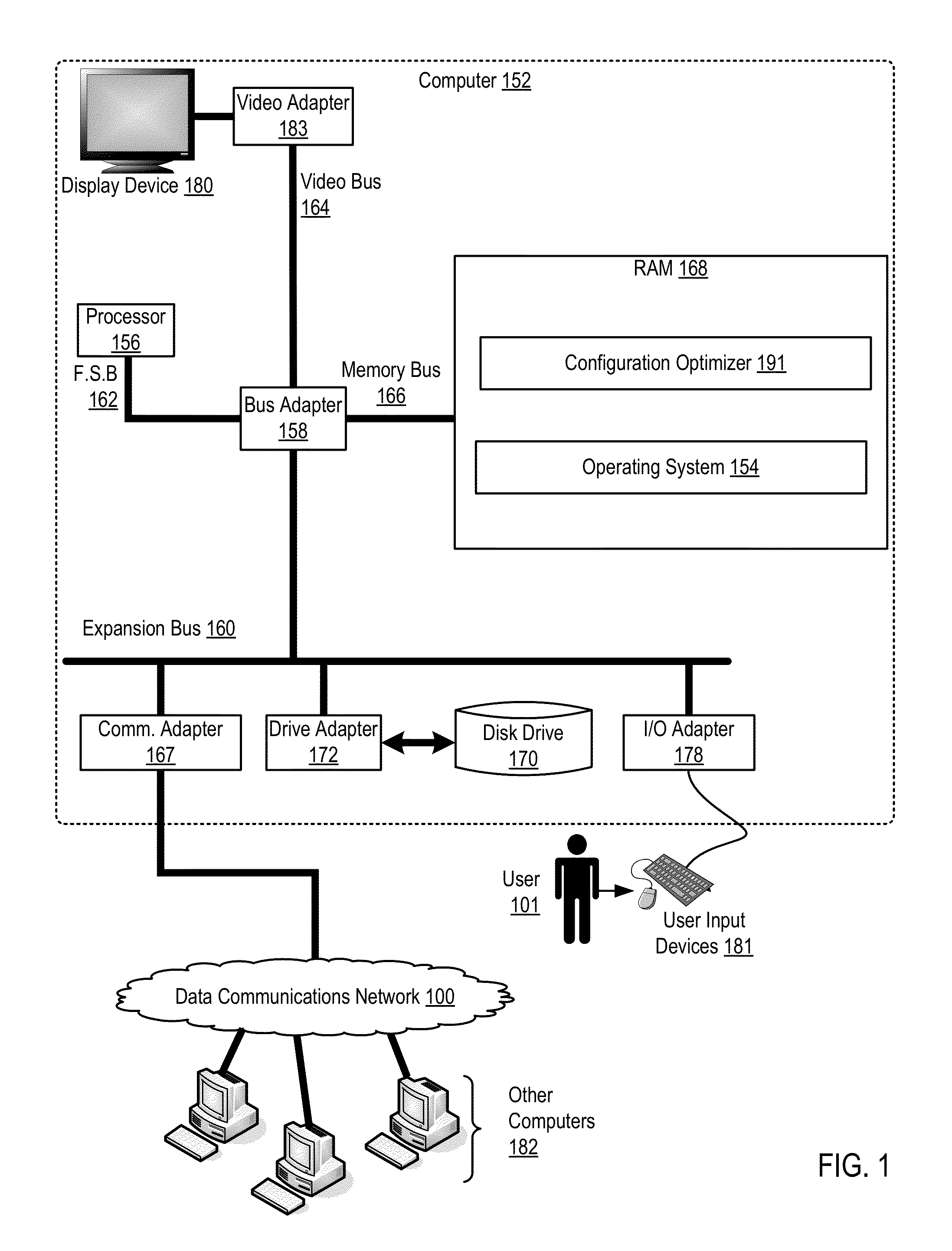

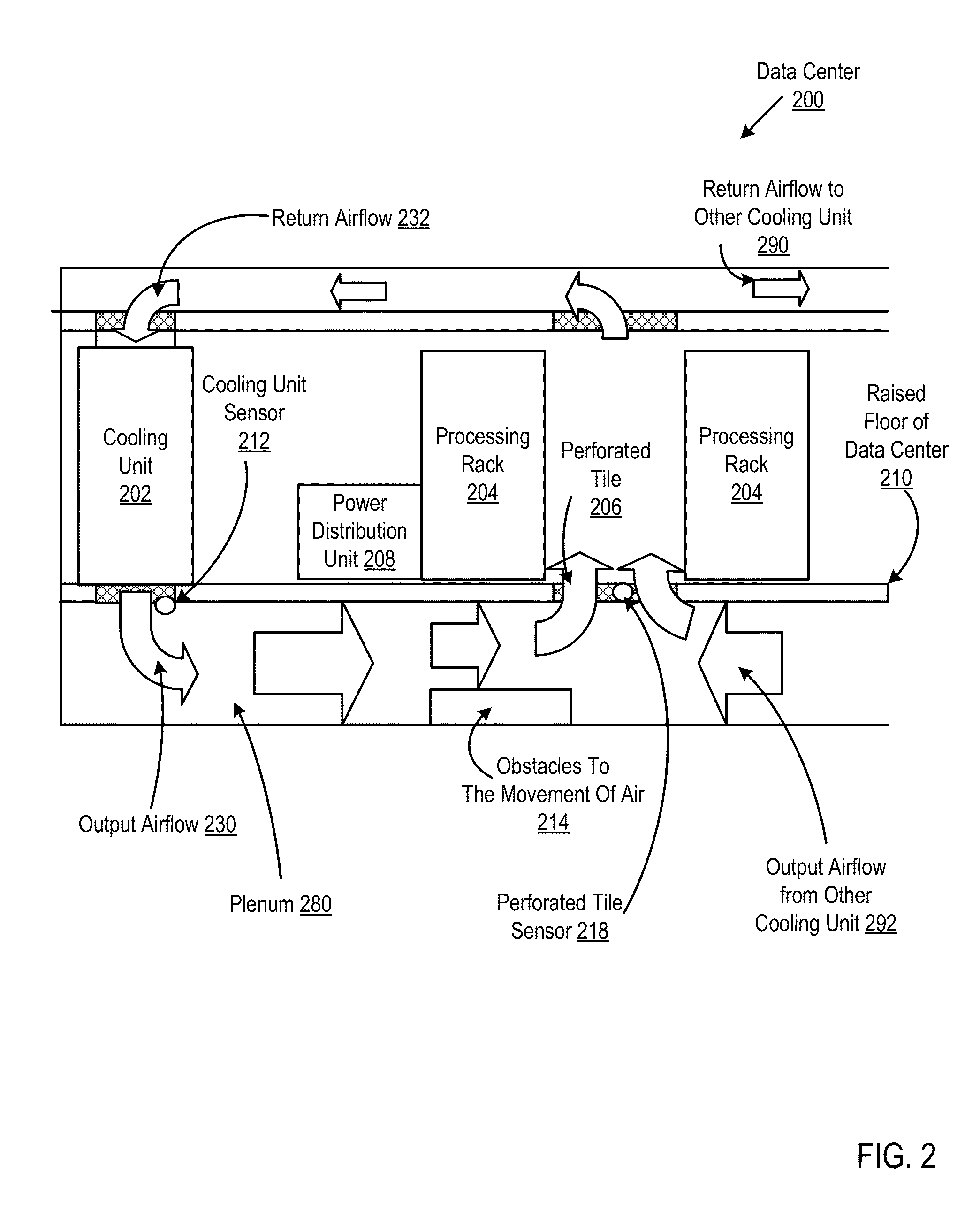

Provisioning aggregate computational workloads and air conditioning unit configurations to optimize utility of air conditioning units and processing resources within a data center

InactiveUS20130103218A1Improve overall utilizationConsume least amount of energyMechanical power/torque controlProgramme controlData centerParallel computing

Owner:IBM CORP

Simulation apparatus and method for verifying hybrid system

InactiveUS20130204602A1Error detection/correctionDesign optimisation/simulationHybrid systemParallel computing

Disclosed herein is a simulation apparatus for verifying a hybrid system. The simulation apparatus includes a system model input unit, a model information storage unit, a simulation unit, and a result display unit. The system model input unit receives subsystem models which model subsystems included in a hybrid system. The model information storage unit stores the subsystem models and information about the operations of the subsystem models. The simulation unit runs a simulation of the subsystem models based on the information about the operations of the subsystem models stored in the model information storage unit. The result display unit displays the results of running the simulation of the subsystem models using the simulation unit.

Owner:ELECTRONICS & TELECOMM RES INST

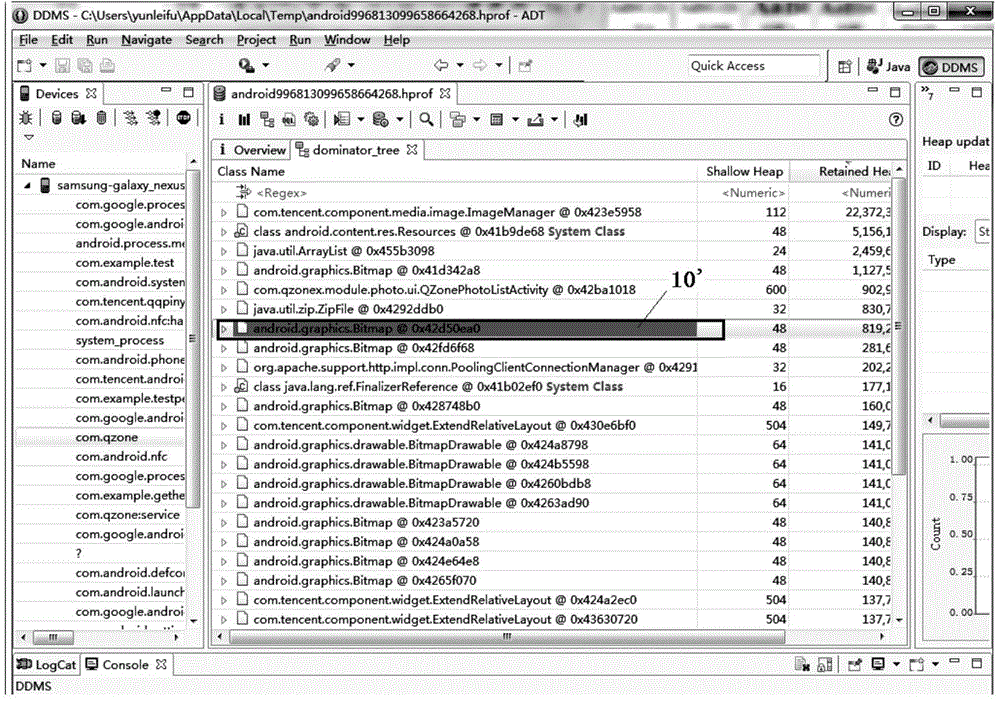

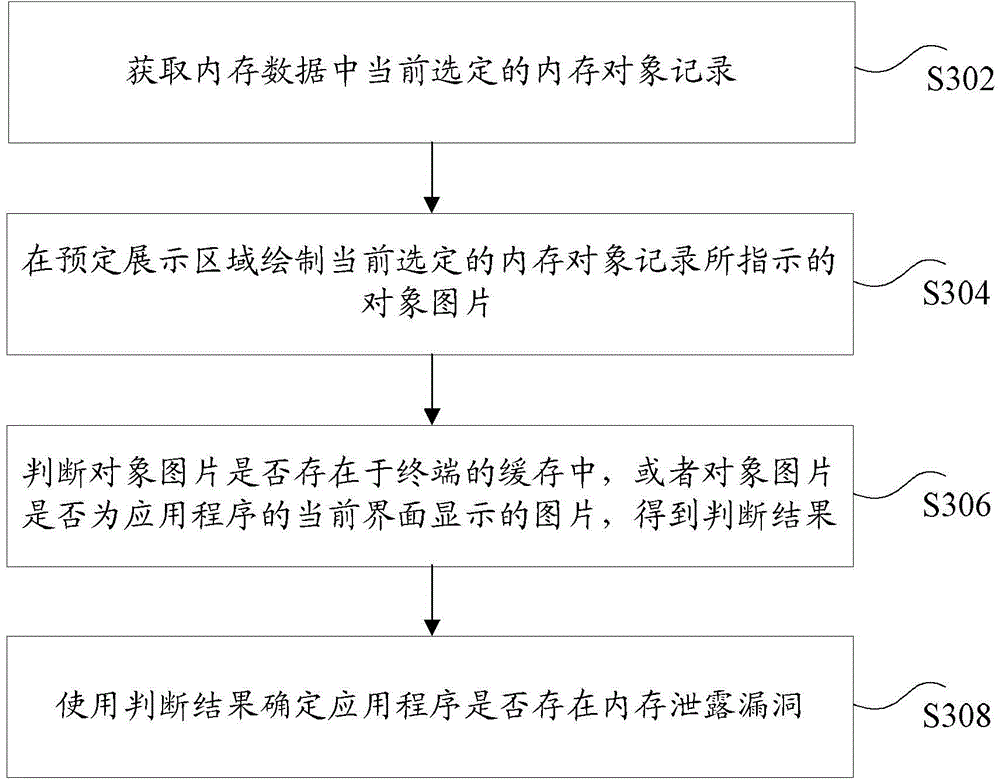

Detection method and apparatus for memory leak bug

ActiveCN105373471AAccurate detectionSolve the low detection accuracySoftware testing/debuggingPlatform integrity maintainanceParallel computingMemory object

Owner:TENCENT TECH (SHENZHEN) CO LTD

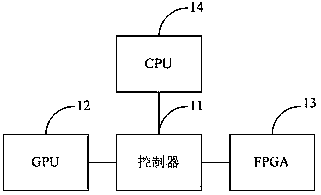

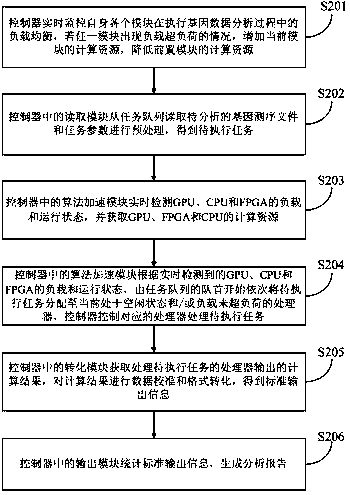

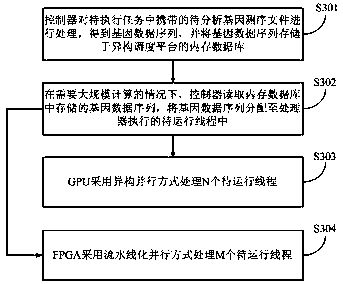

Gene data analysis method and heterogeneous scheduling platform

Owner:BGI TECH SOLUTIONS

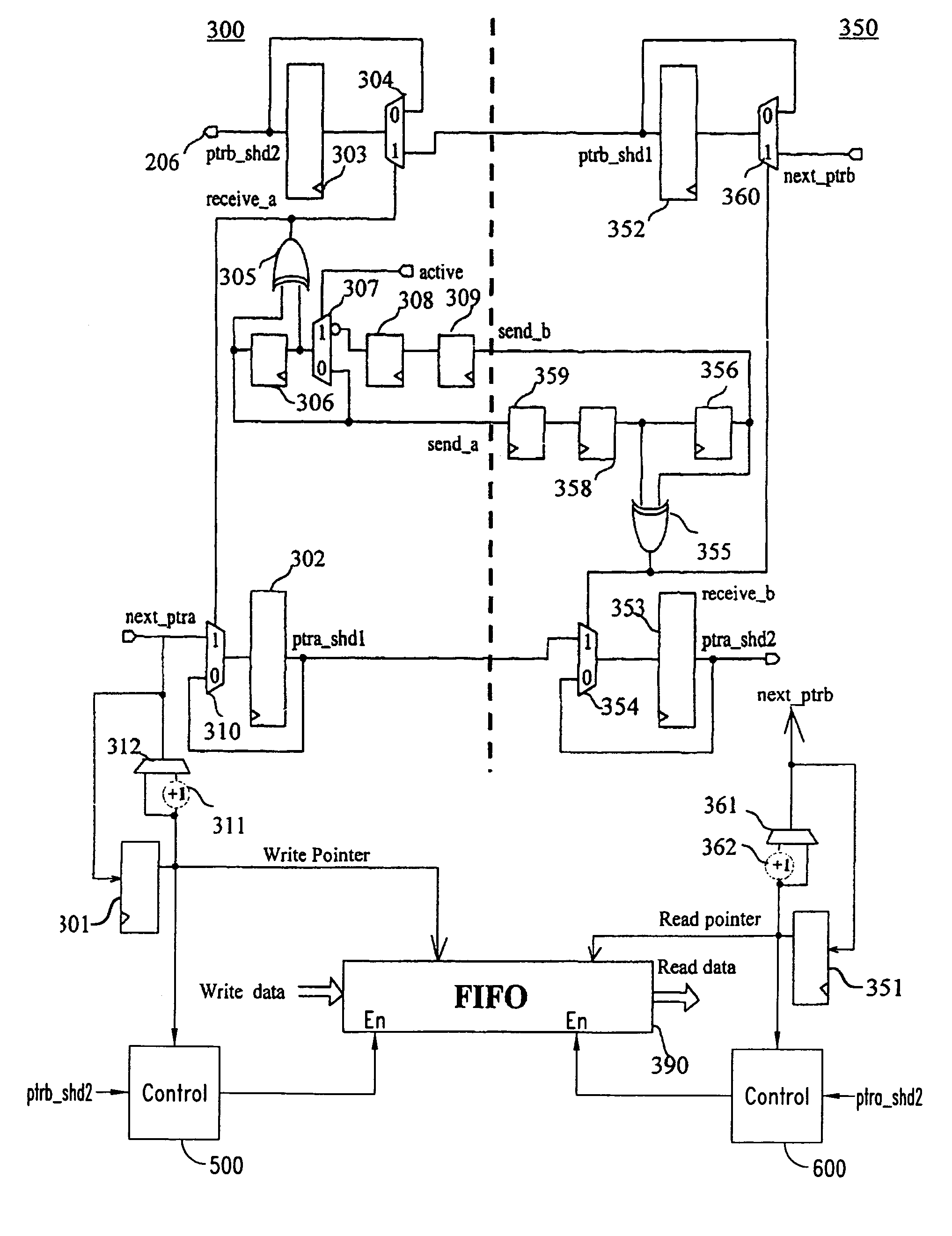

Device for transferring data via write or read pointers between two asynchronous subsystems having a buffer memory and plurality of shadow registers

ActiveUS7185125B2Digital computer detailsData switching by path configurationProcessor registerParallel computing

Owner:STMICROELECTRONICS SRL

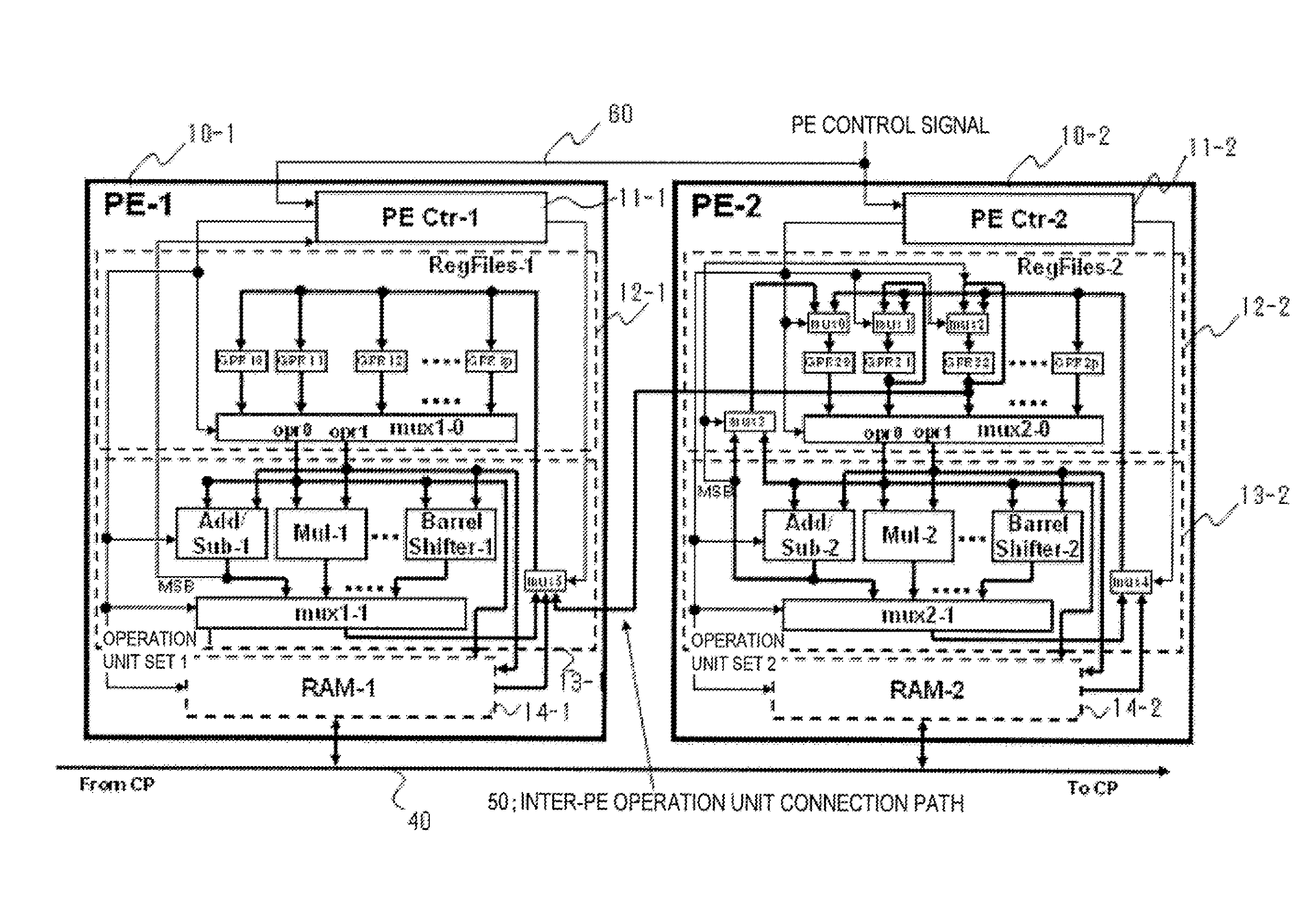

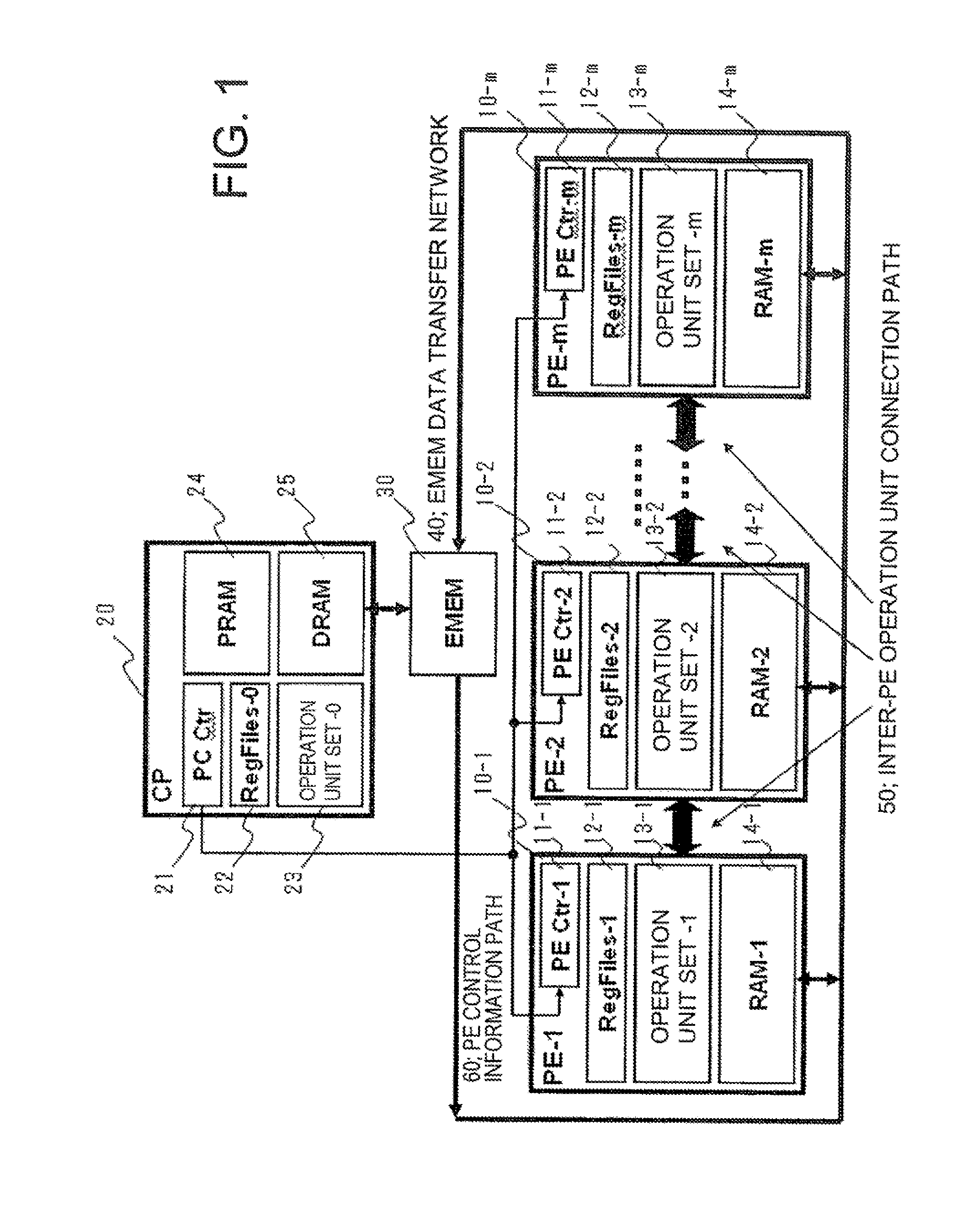

Reconfigurable simd processor and method for controlling its instruction execution

InactiveUS20100174891A1Increase resourcesImprove performanceSingle instruction multiple data multiprocessorsMemory systemsGeneral purposeParallel computing

Owner:NEC CORP

Cache system access method and device

The embodiment of the invention discloses a cache system access method which comprises the following steps that: a cache manager maintenance module acquires the configuration information of a cache system, loads an adapter matched with the cache system according to the configuration information, and configures a cache manager to enable a cache call object of the cache manager to be the cache system; a uniform interface receives a cache operation instruction to the cache system, transmitted by an ERP (Enterprise Resource Planning) system, and forwards the cache operation instruction to the cache manager; the cache manager forwards the cache operation instruction to the adapter; and the adapter performs interface standard conversion on the cache operation instruction and then transmits the converted cache operation instruction to the cache system, wherein the interface standard conversion is as follows: the interface standard of the uniform interface is converted into the interface standard of the cache system. The embodiment of the invention also provides a corresponding device. By adopting the method disclosed by the embodiment of the invention, different cache systems can be accessed for the ERP system according to the needs, and the accessed cache systems can be freely switched.

Owner:KINGDEE SOFTWARE(CHINA) CO LTD

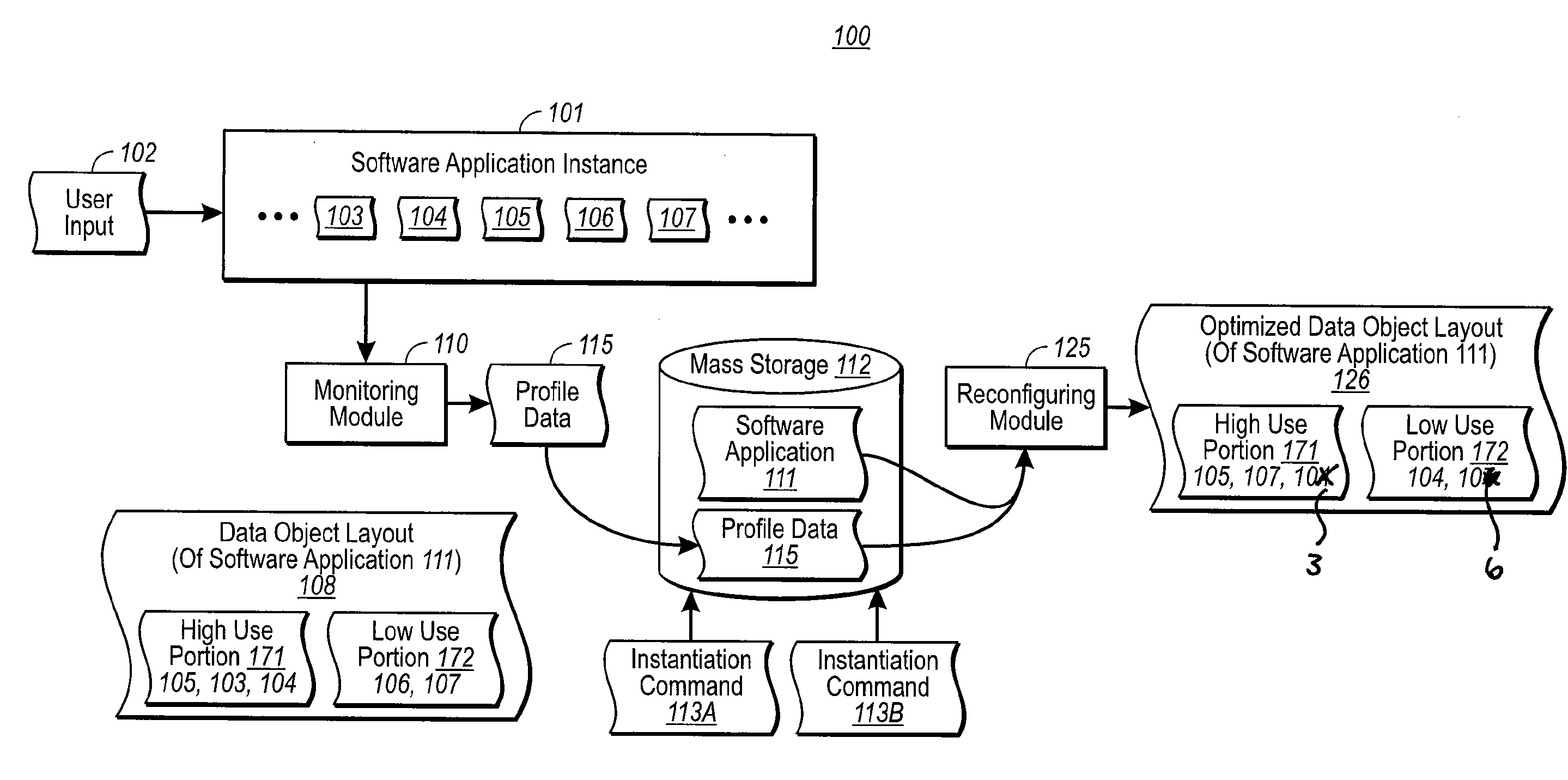

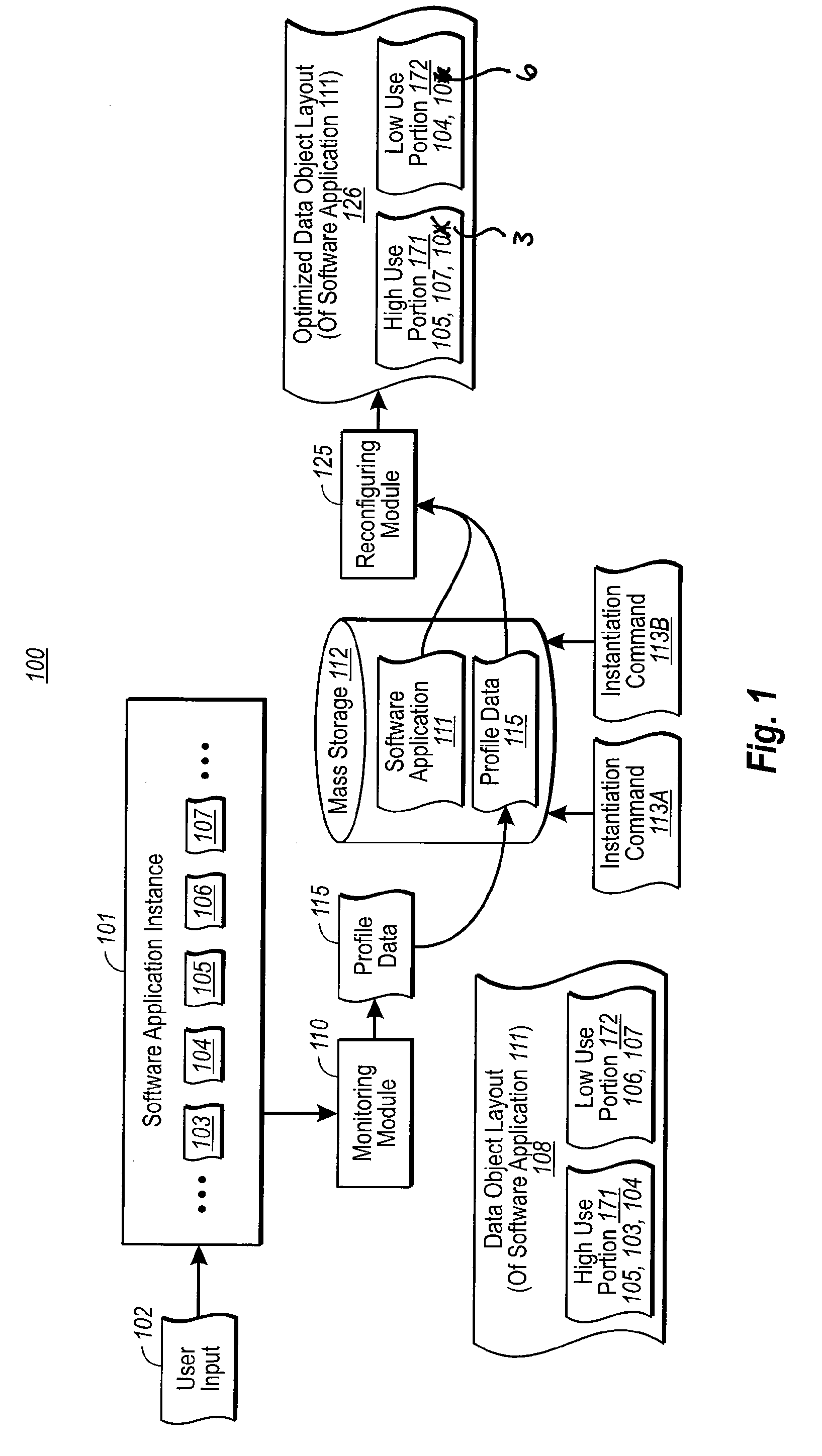

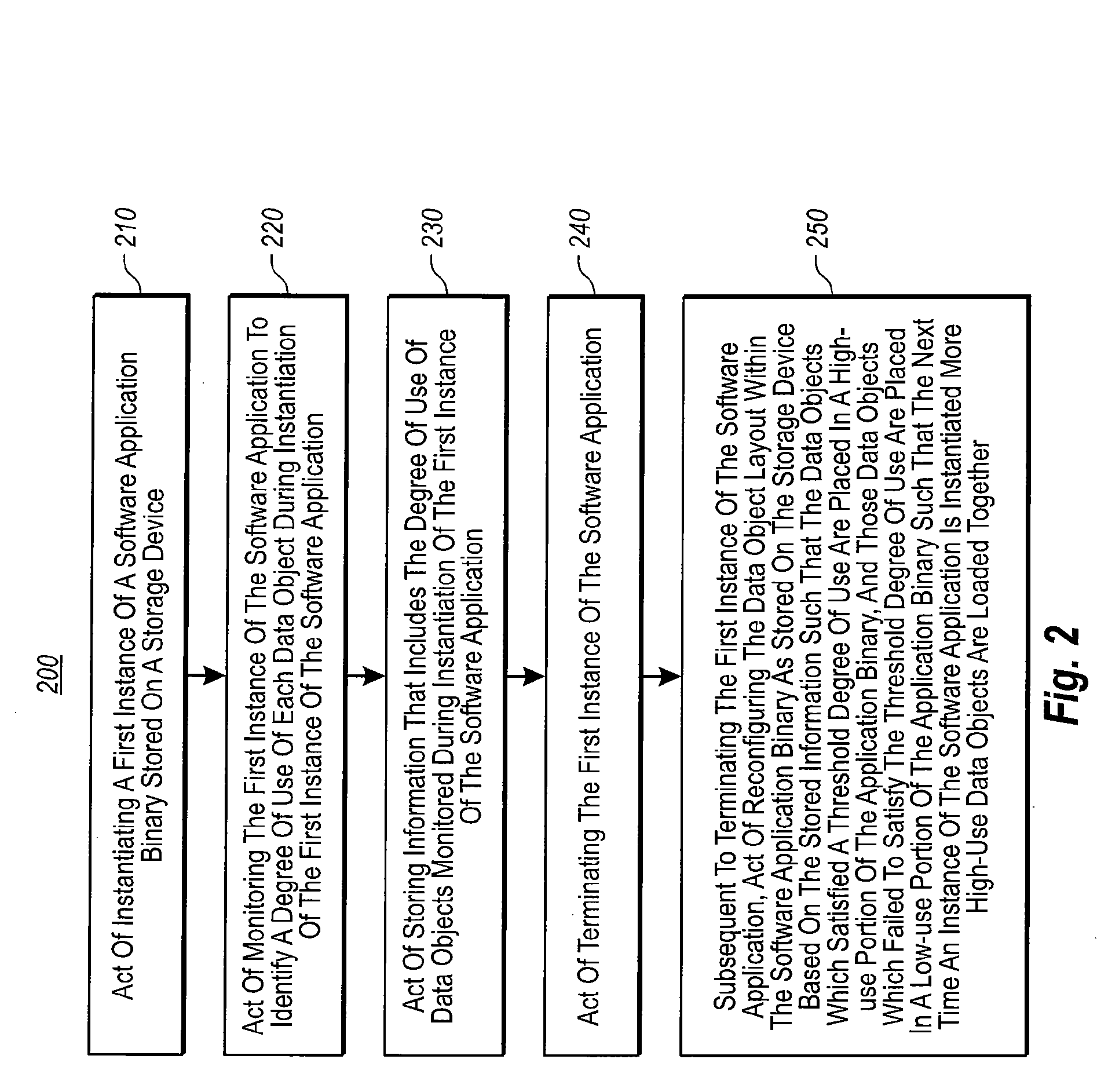

Incremental program modification based on usage data

InactiveUS20080034349A1Well formedError detection/correctionProgram loading/initiatingParallel computingComputerized system

Owner:MICROSOFT TECH LICENSING LLC

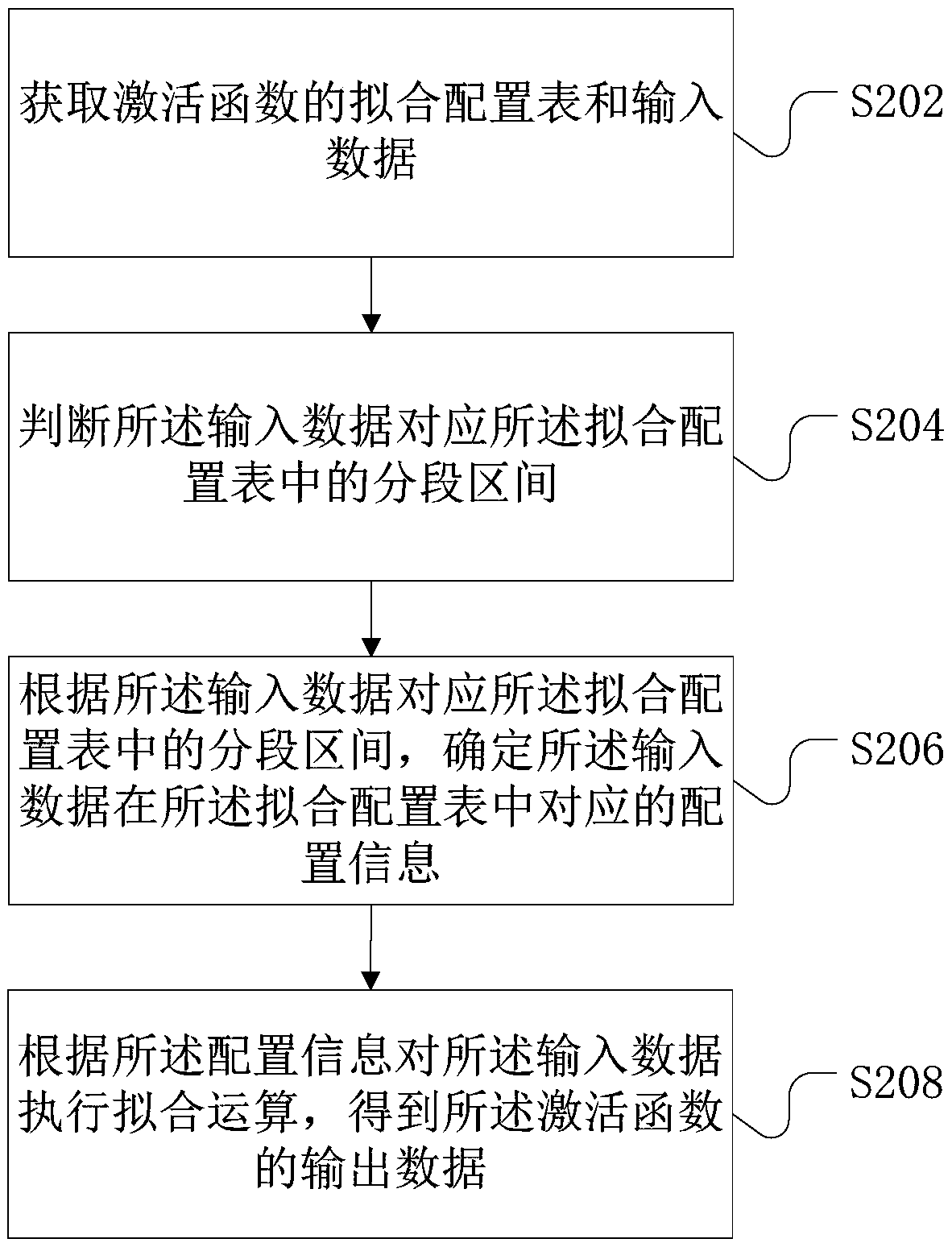

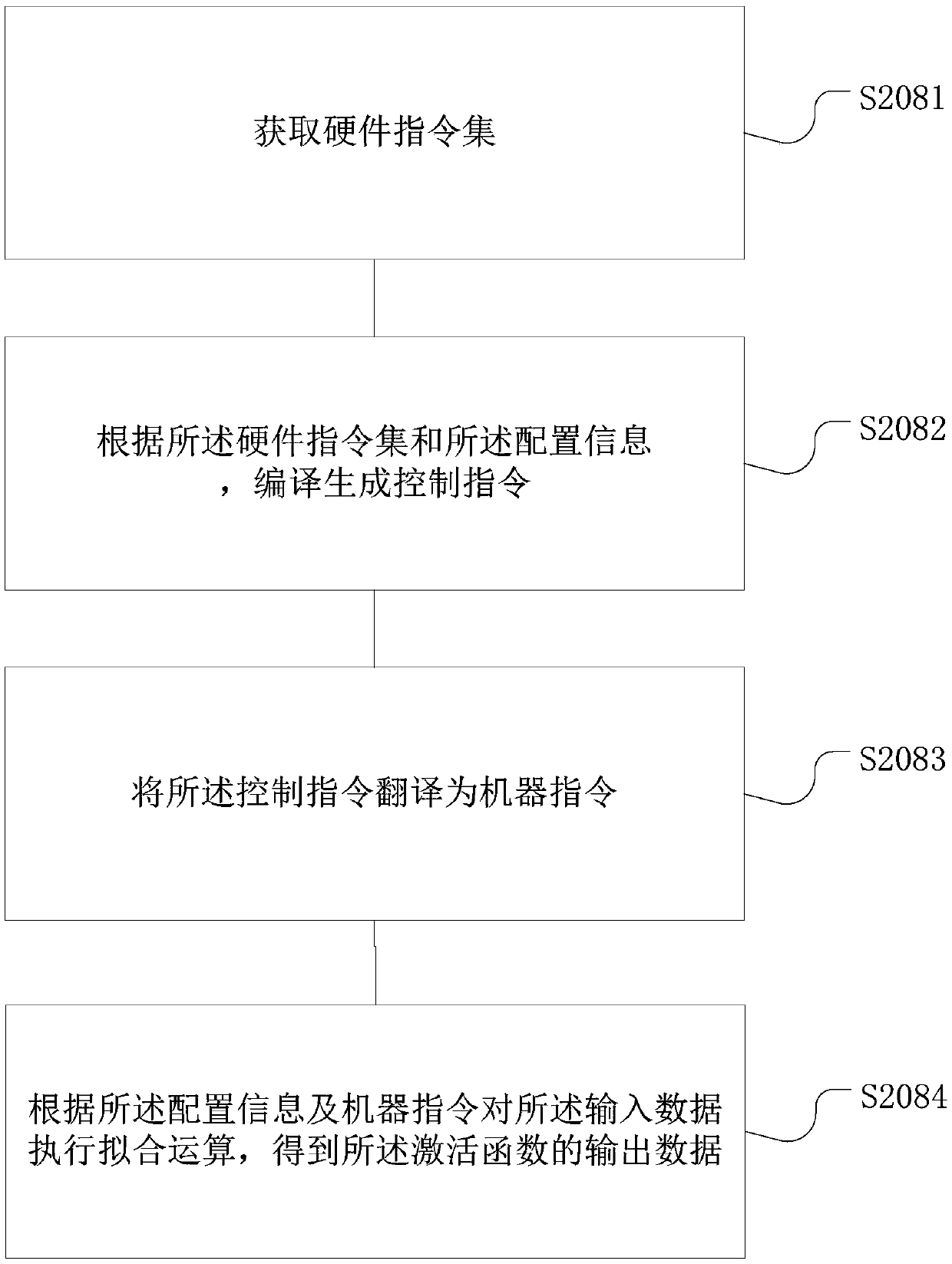

Data processing method and device and related product

Owner:CAMBRICON TECH CO LTD

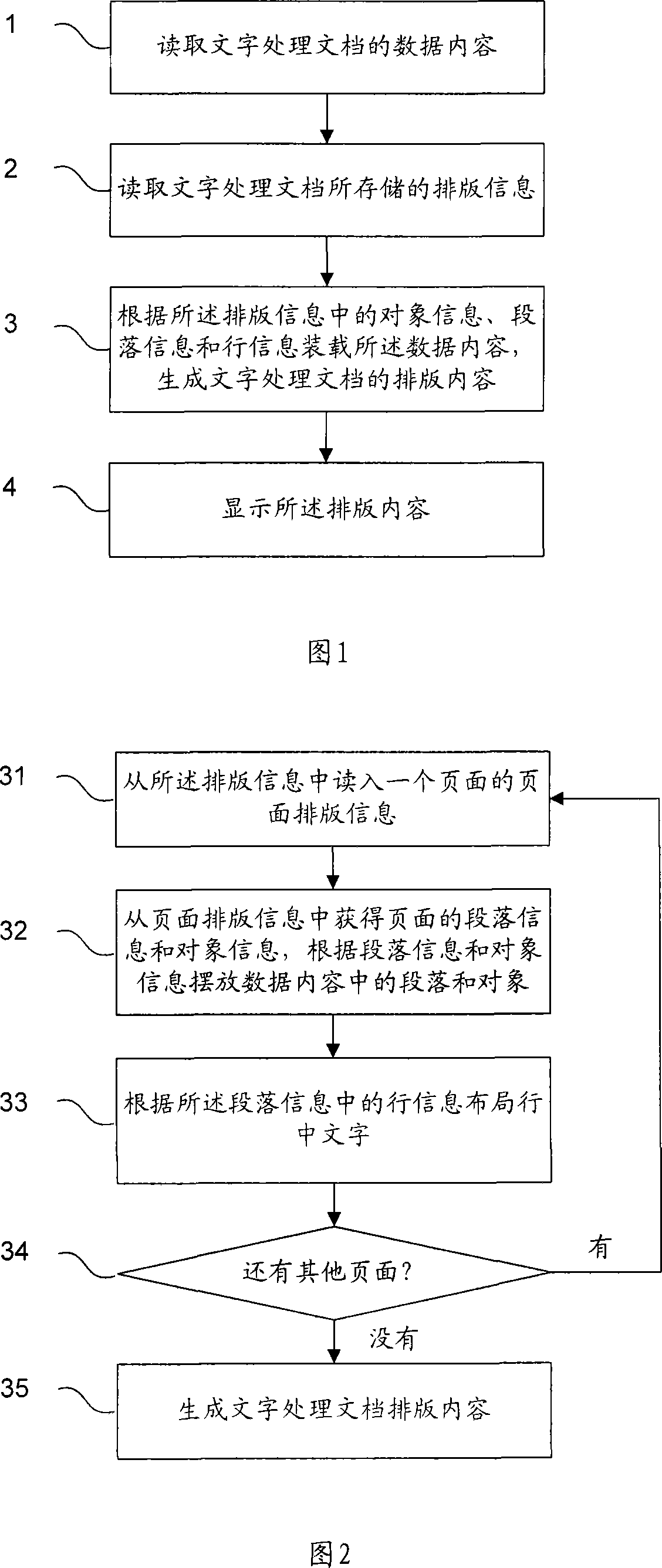

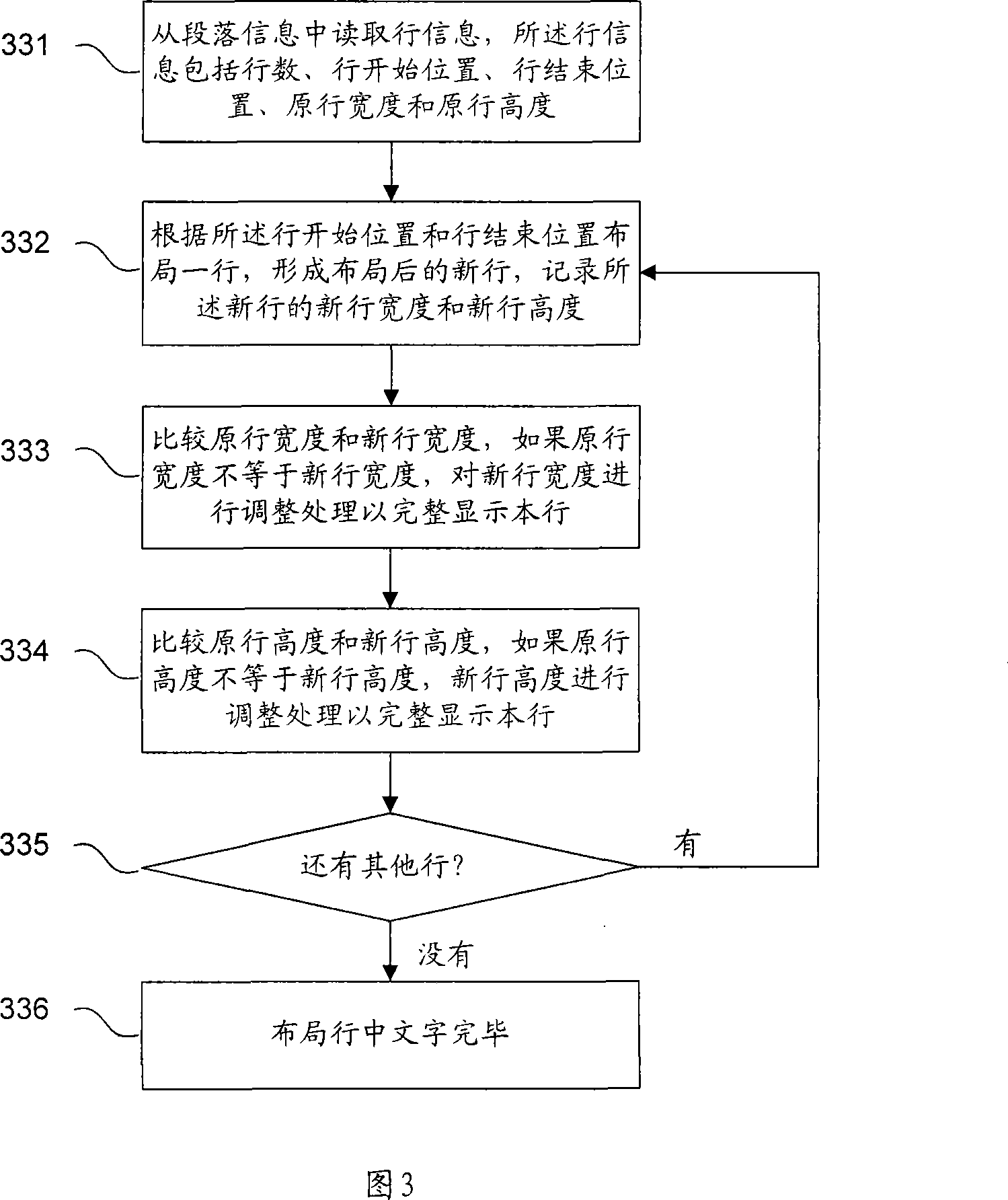

Method for implementing word processing software layout compatibility

InactiveCN101169777ANo flowEliminate the possibility of changeNatural language data processingSpecial data processing applicationsWord processingParallel computing

Owner:WUXI EVERMORE SOFTWARE

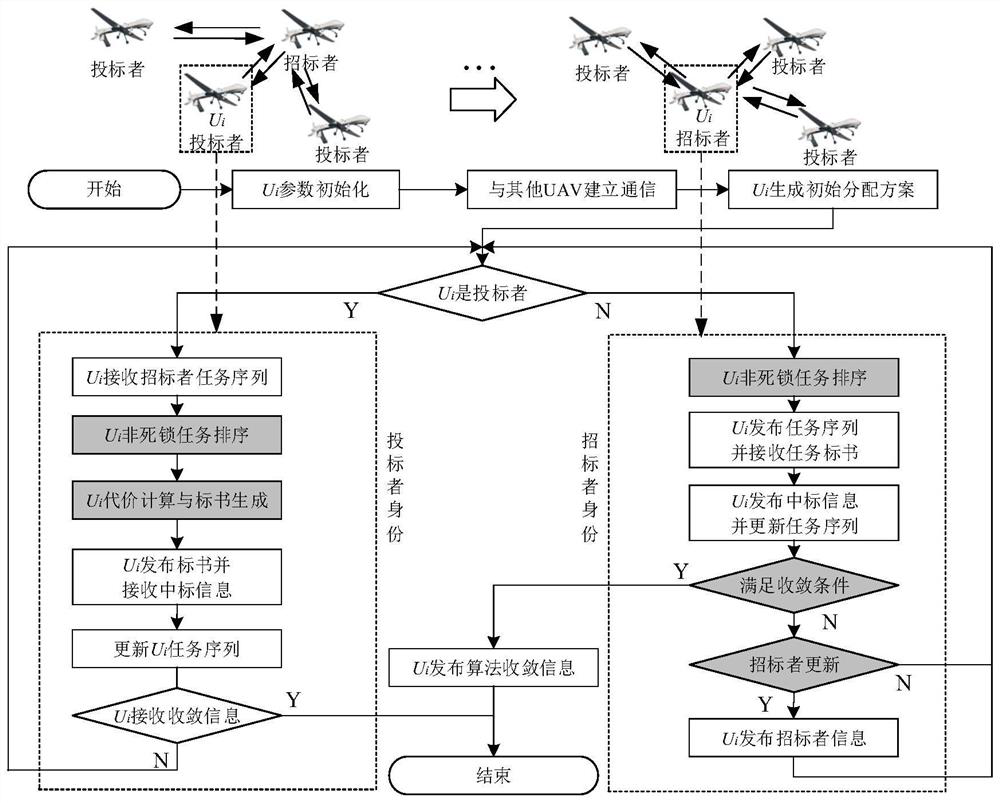

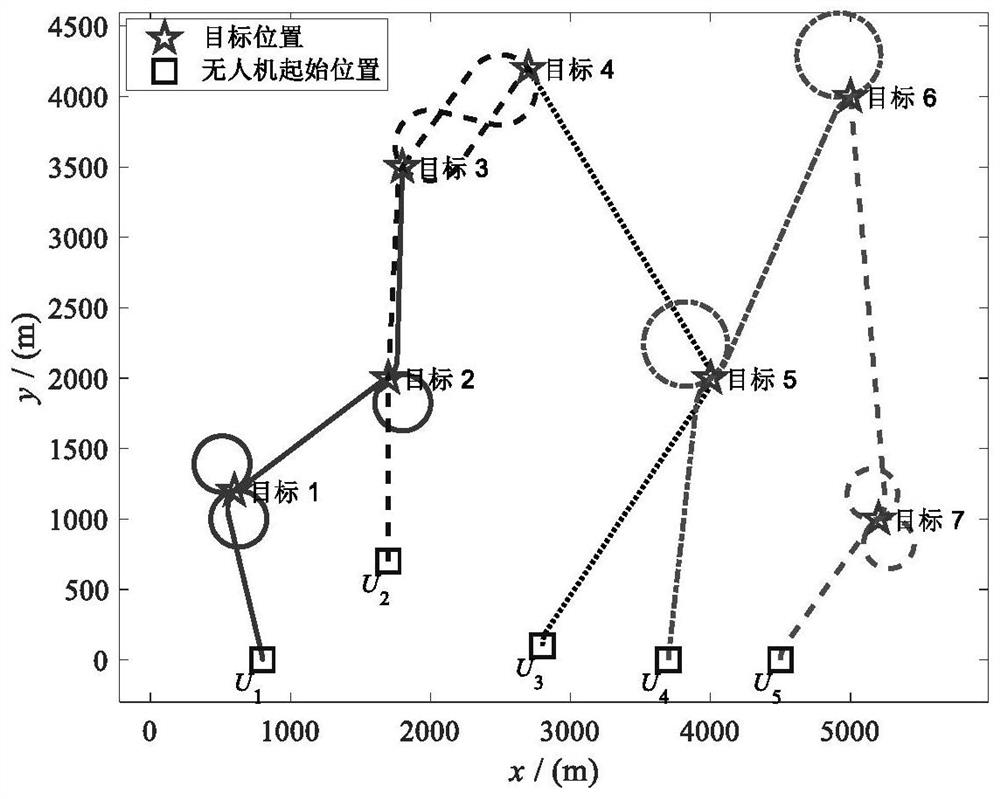

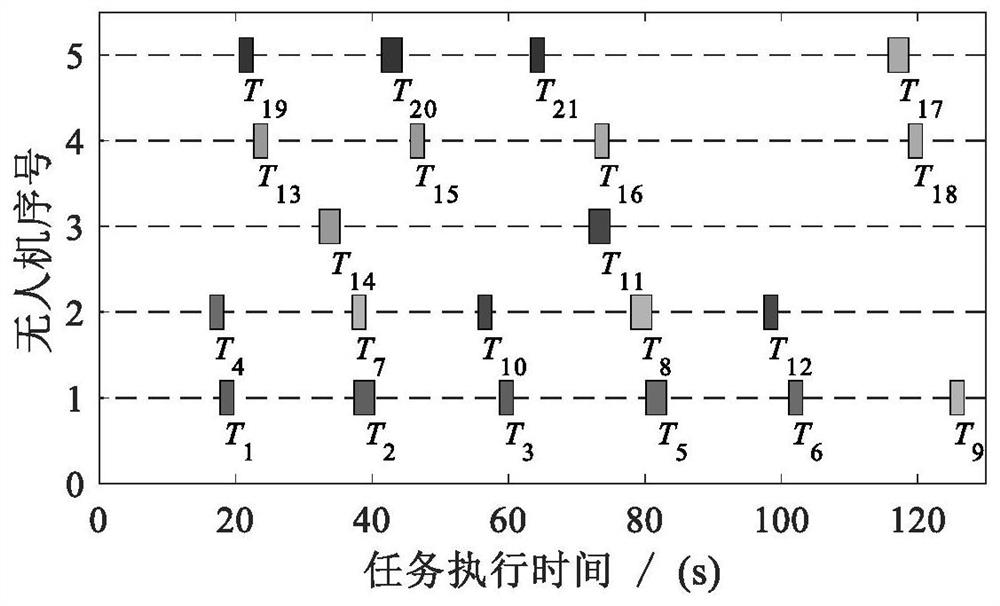

Multi-machine distributed time sequence task allocation method based on non-deadlock contract net algorithm

PendingCN113671987AEfficient outputImprove conveniencePosition/course control in three dimensionsDistribution methodParallel computing

Owner:BEIJING INSTITUTE OF TECHNOLOGYGY

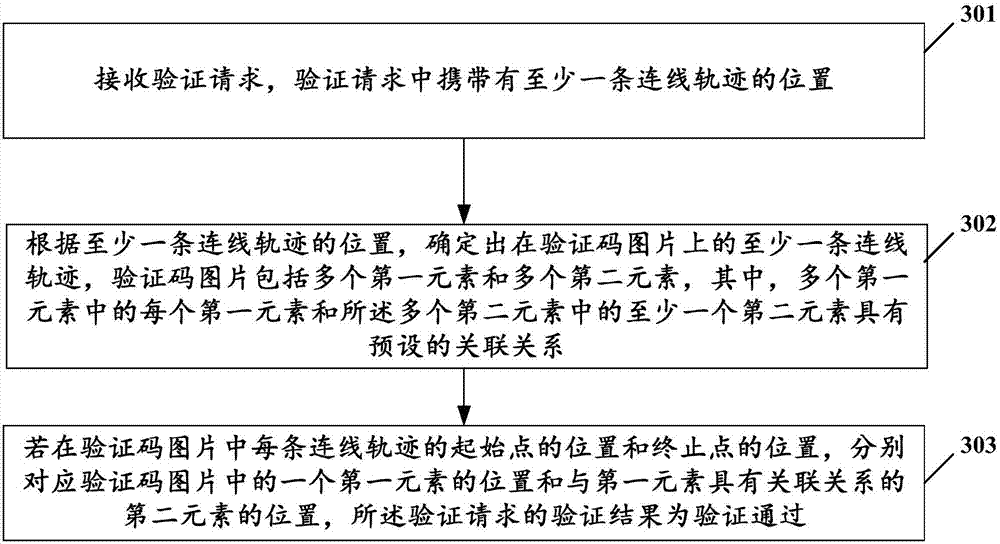

Generation and verification method and device of verification code picture

InactiveCN107483208AKey distribution for secure communicationUser identity/authority verificationValidation methodsParallel computing

Owner:CHINA MOBILE COMM LTD RES INST +1

Who we serve

- R&D Engineer

- R&D Manager

- IP Professional

Why Eureka

- Industry Leading Data Capabilities

- Powerful AI technology

- Patent DNA Extraction

Social media

Try Eureka

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic.

© 2024 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap