Patents

Literature

Hiro is an intelligent assistant for R&D personnel, combined with Patent DNA, to facilitate innovative research.

91results about "Resource allocation" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

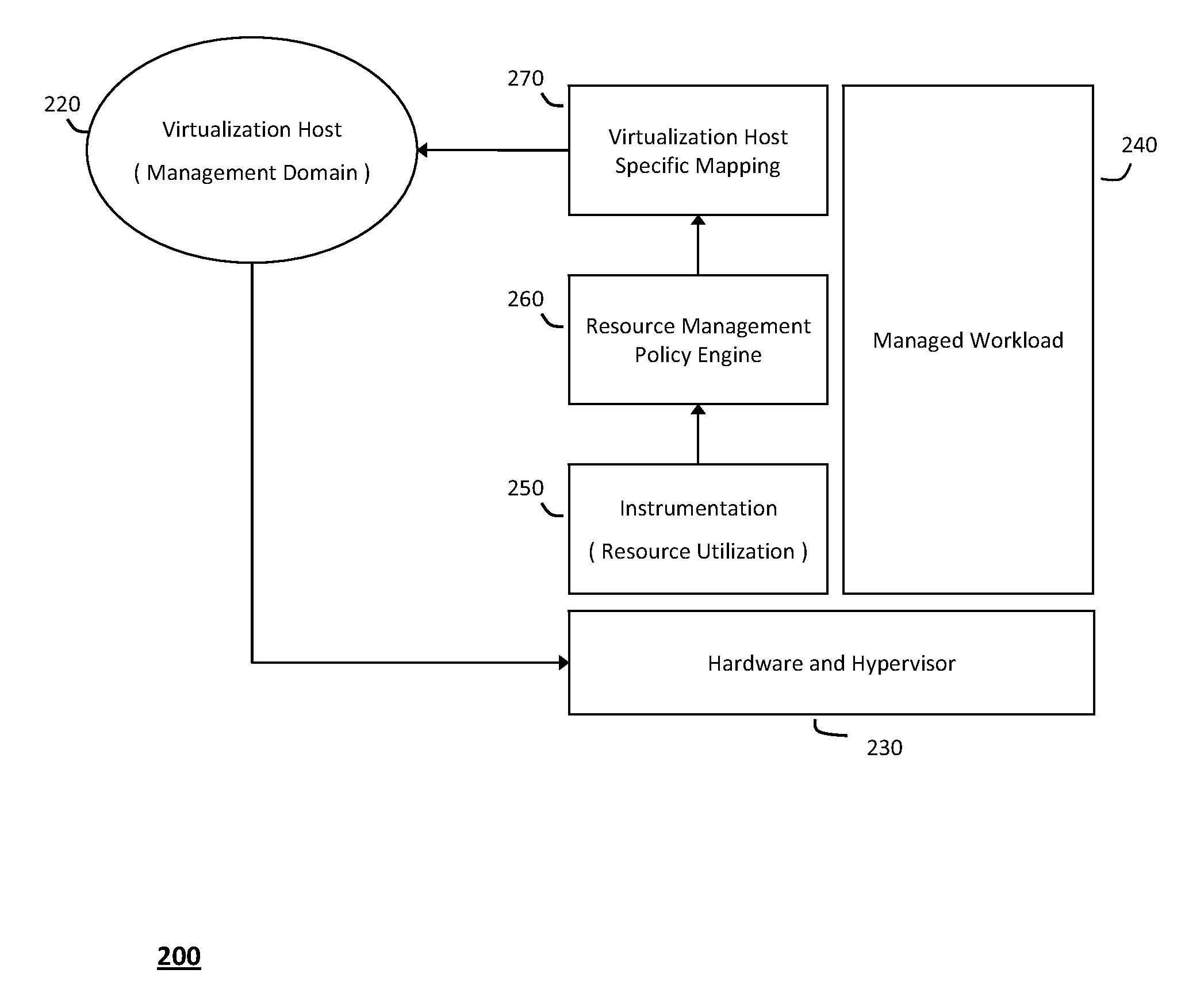

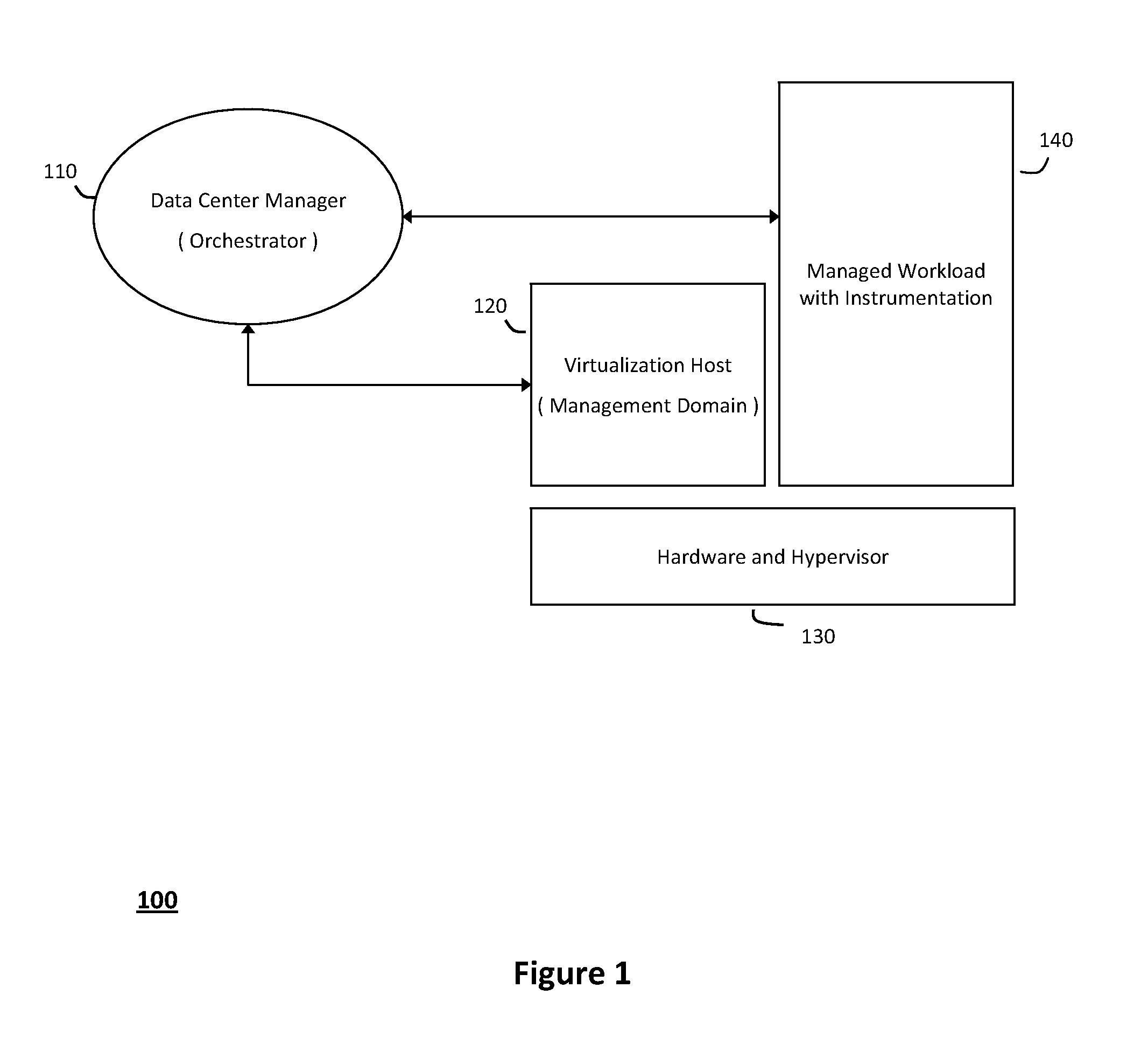

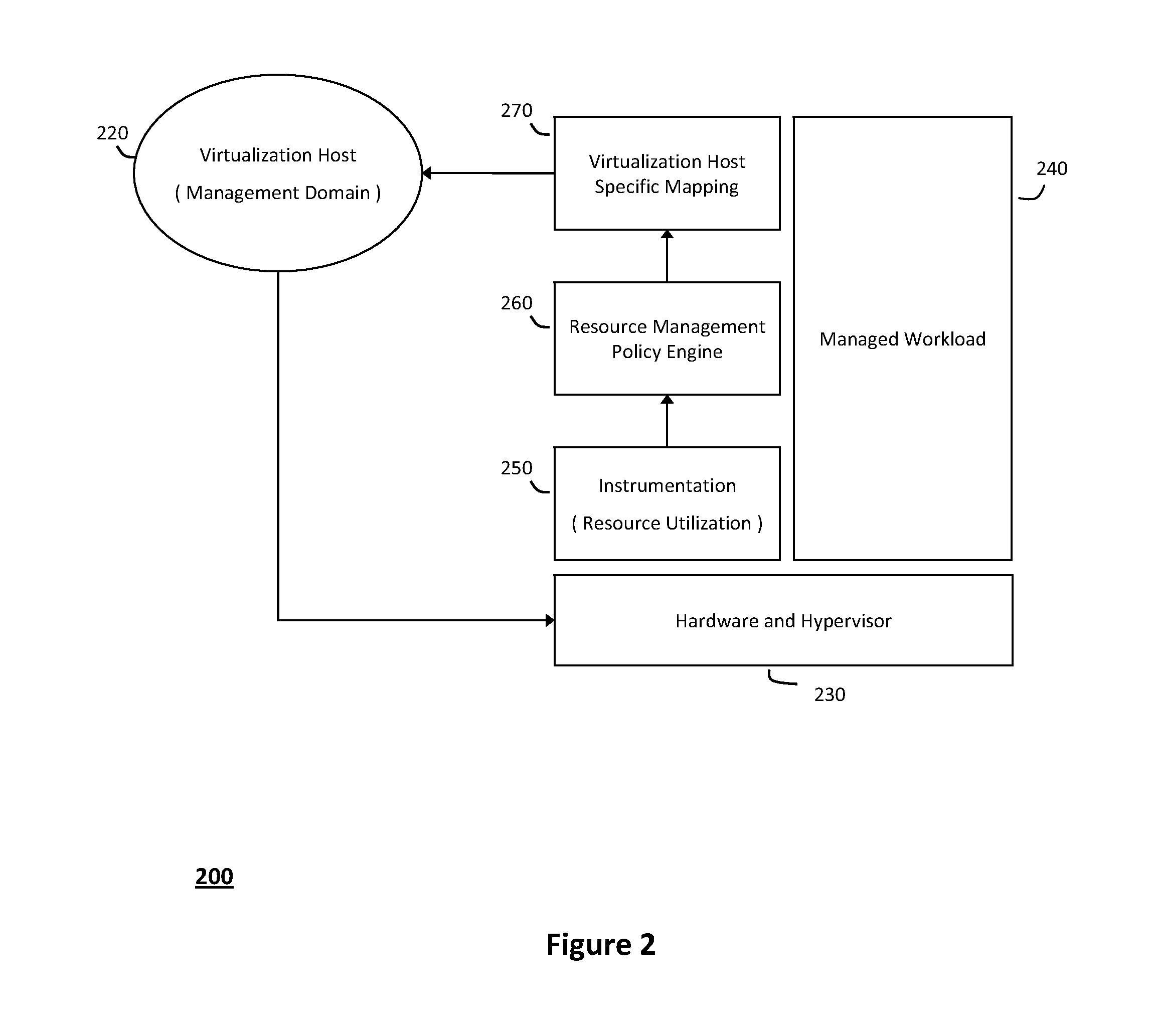

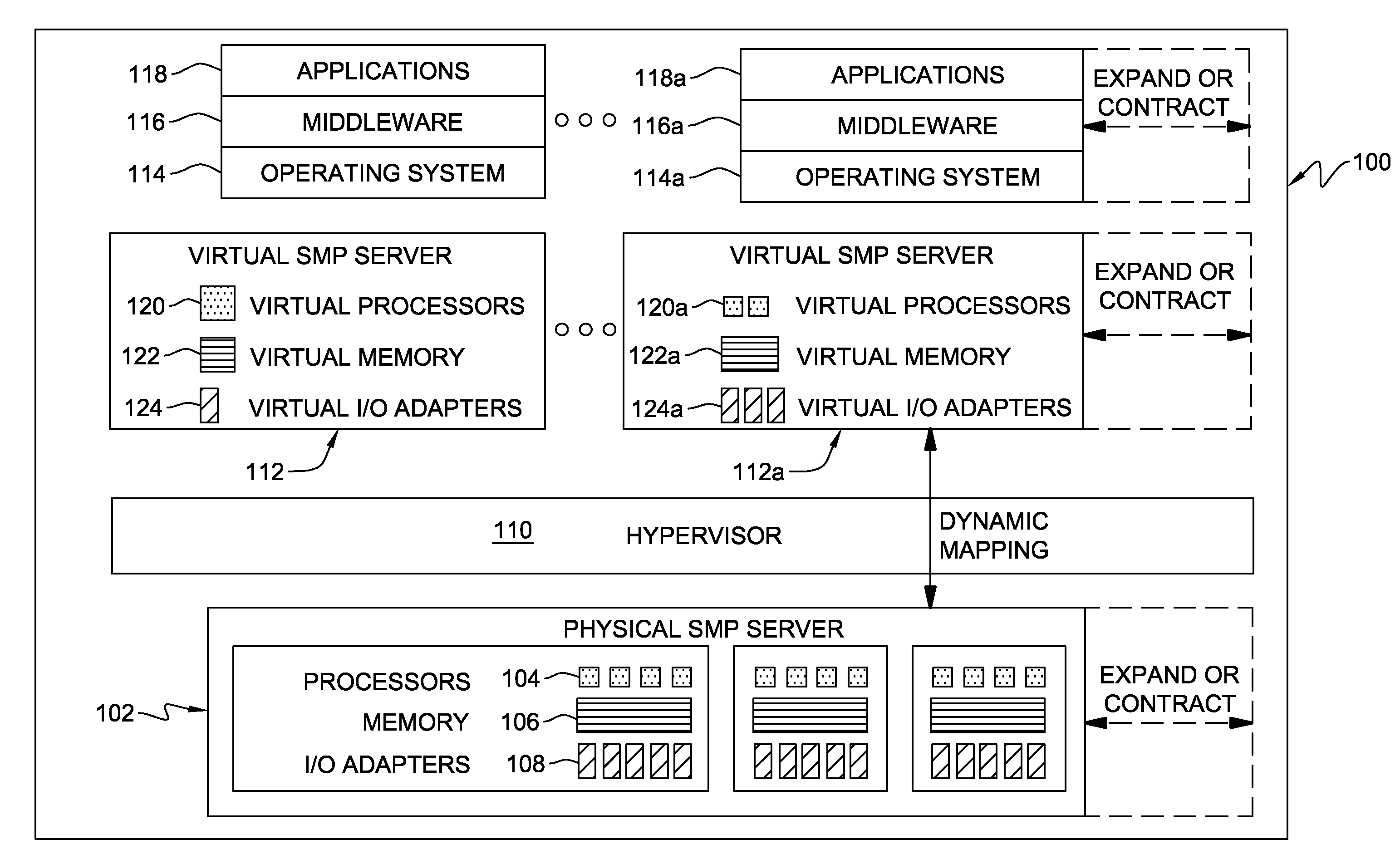

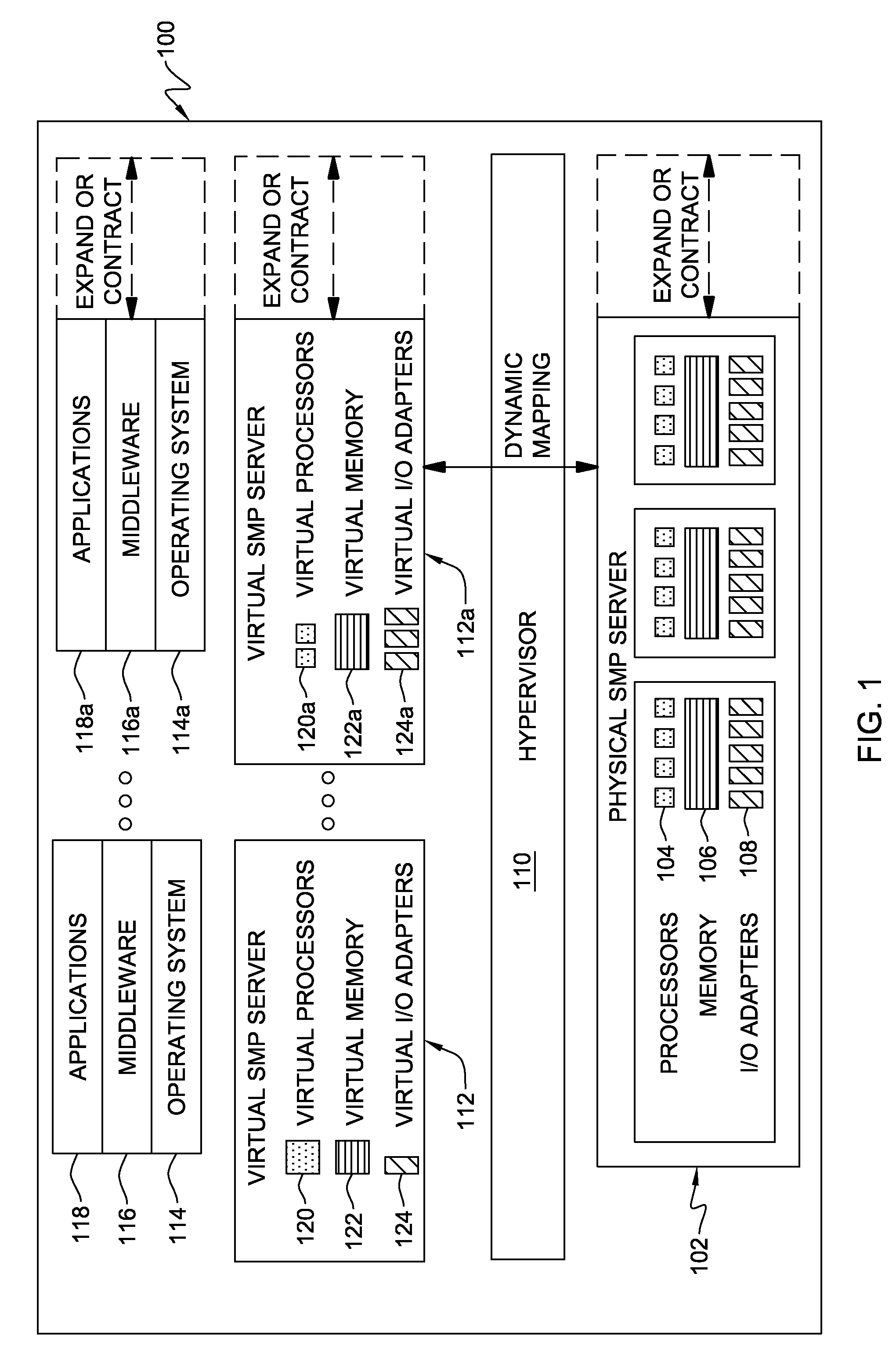

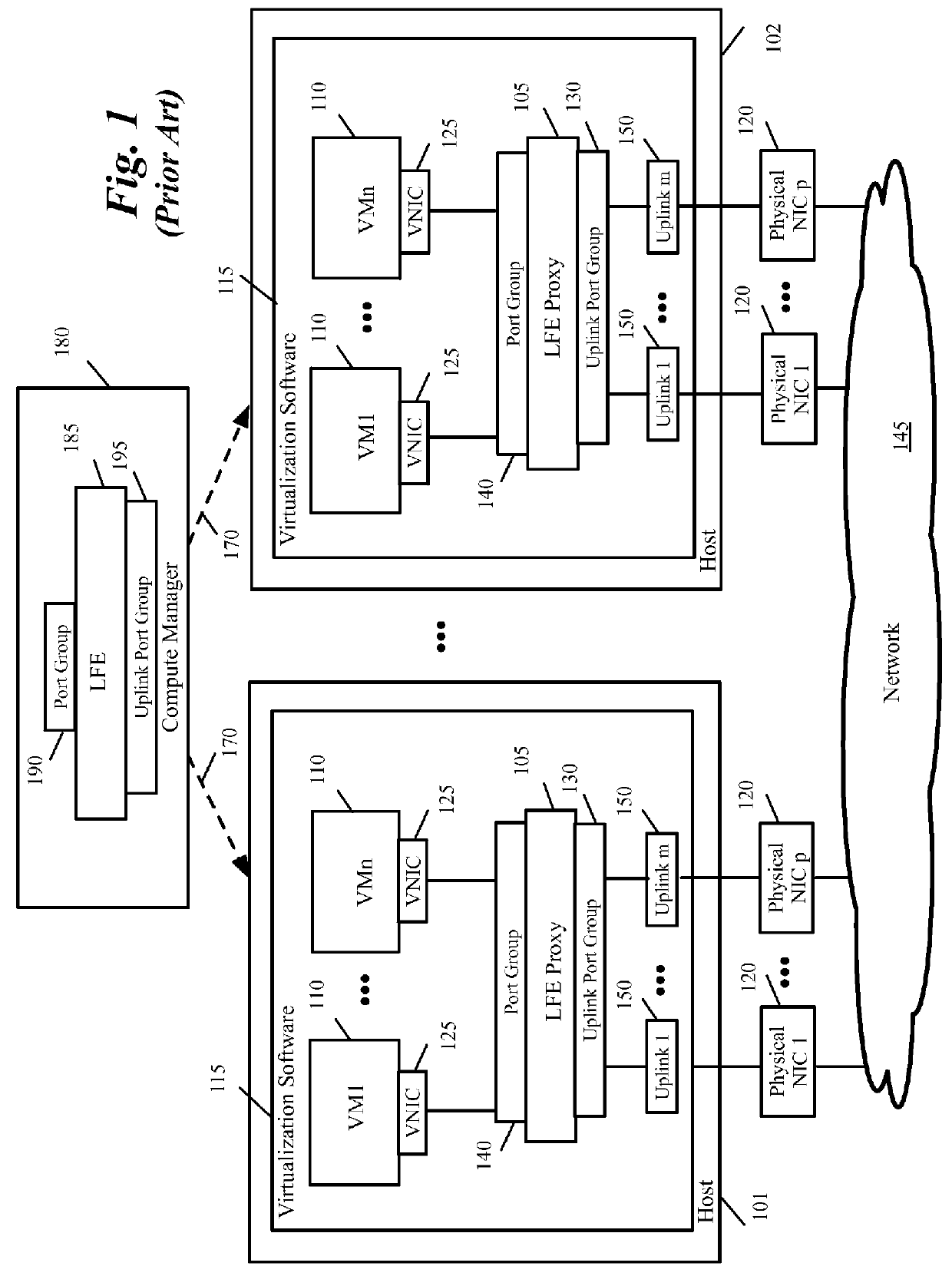

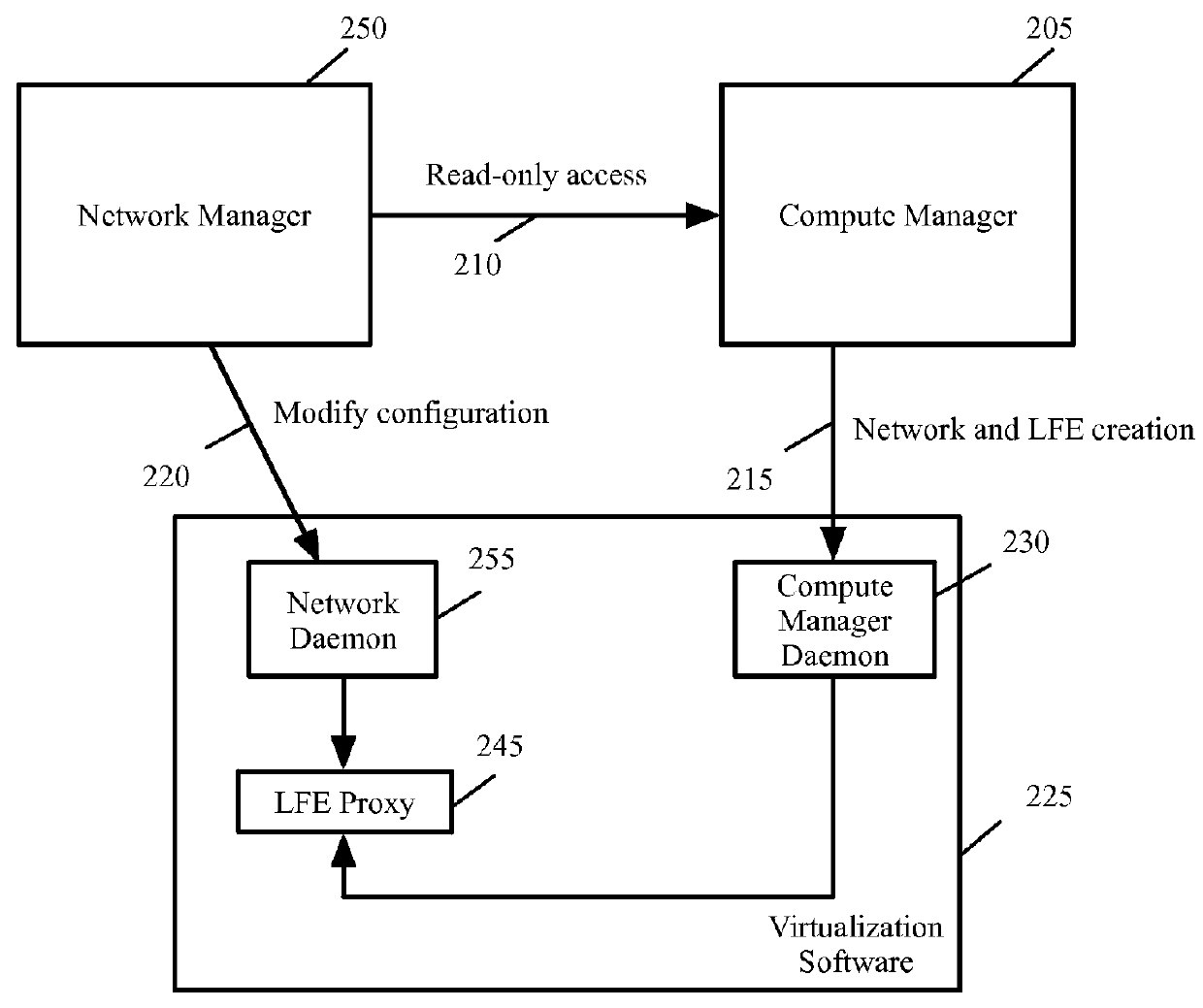

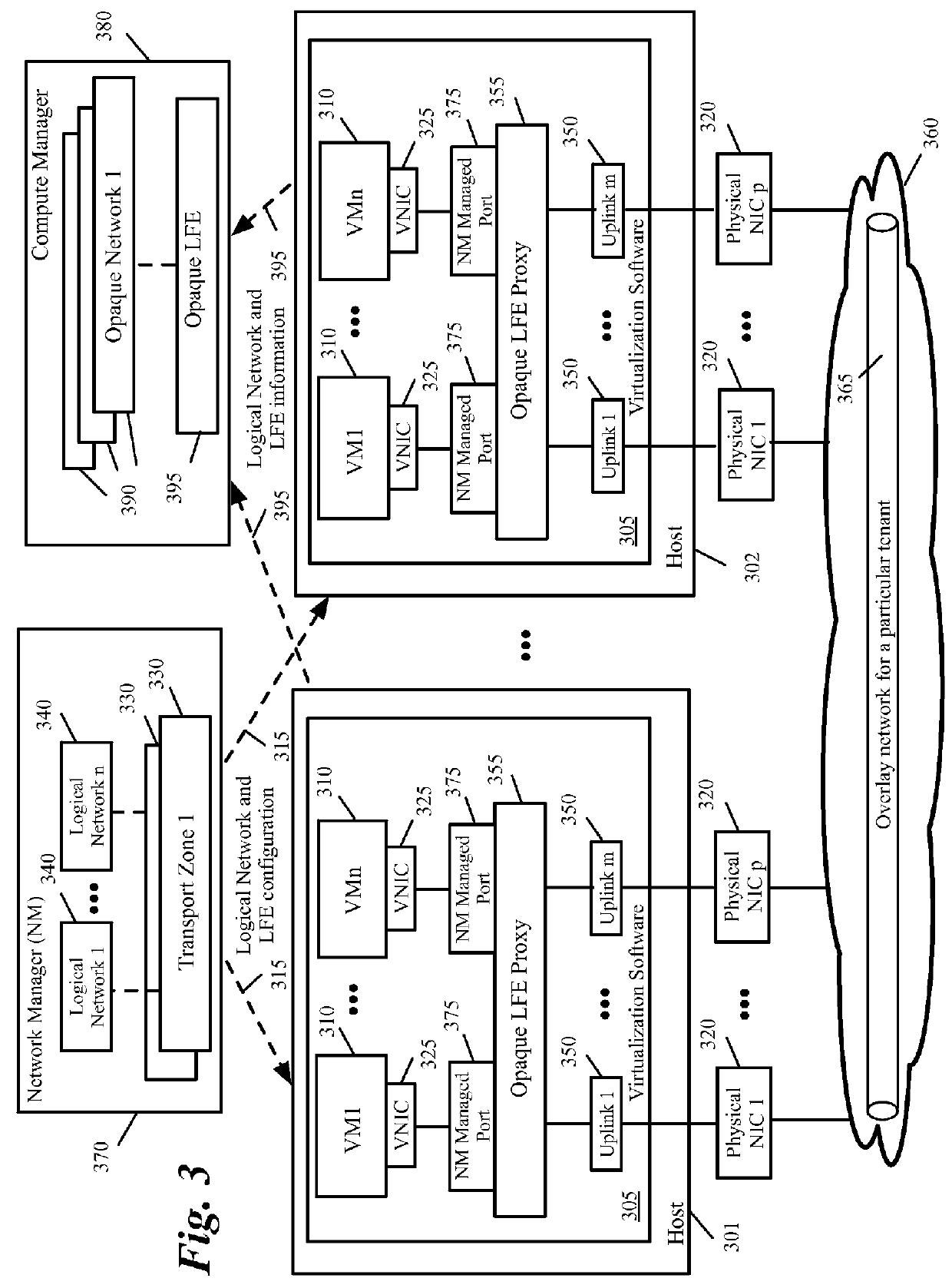

System and method for structuring self-provisioning workloads deployed in virtualized data centers

ActiveUS20120054763A1Improve portabilityResource allocationDigital computer detailsVirtualizationData center

Owner:SUSE LLC

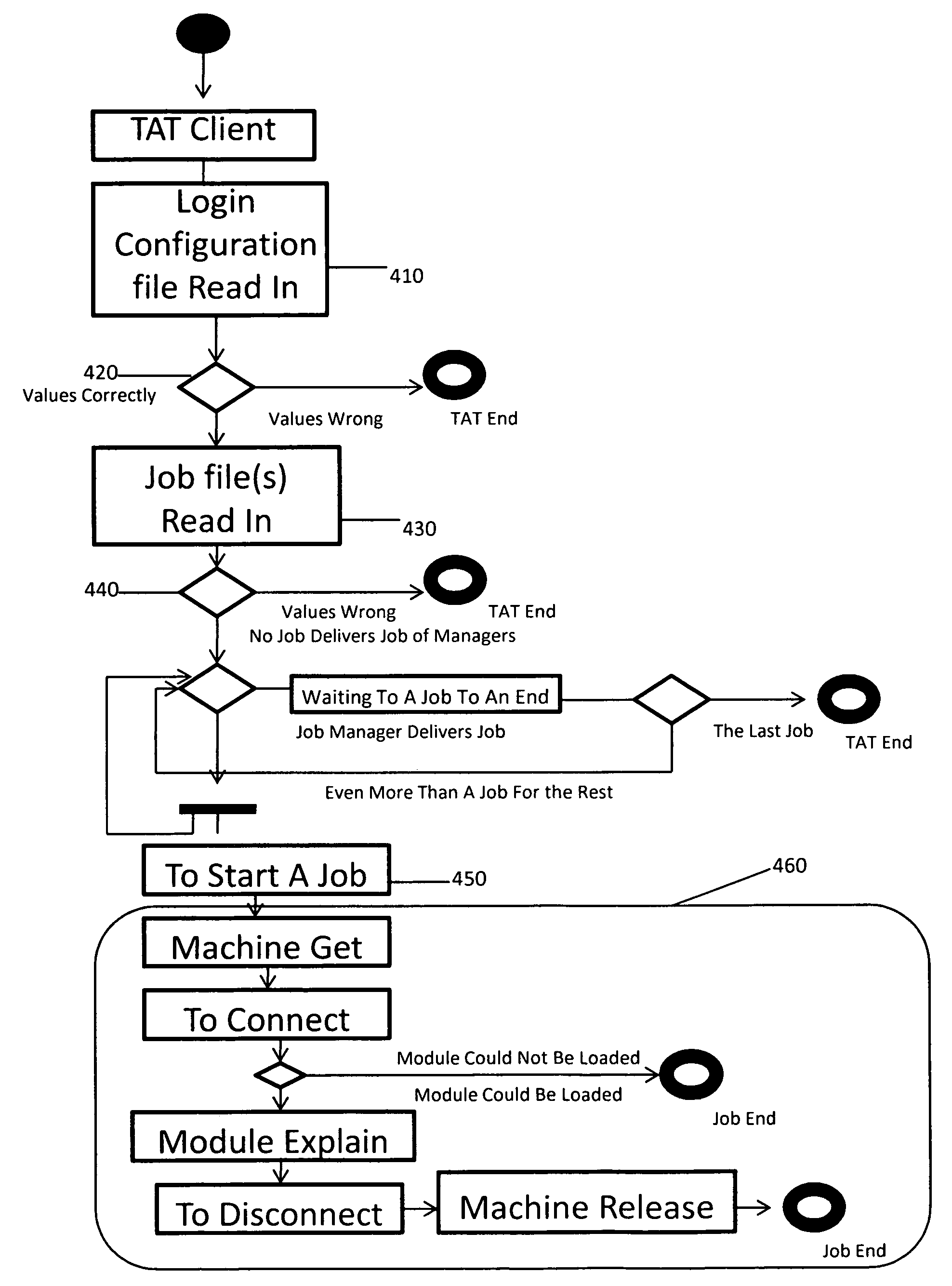

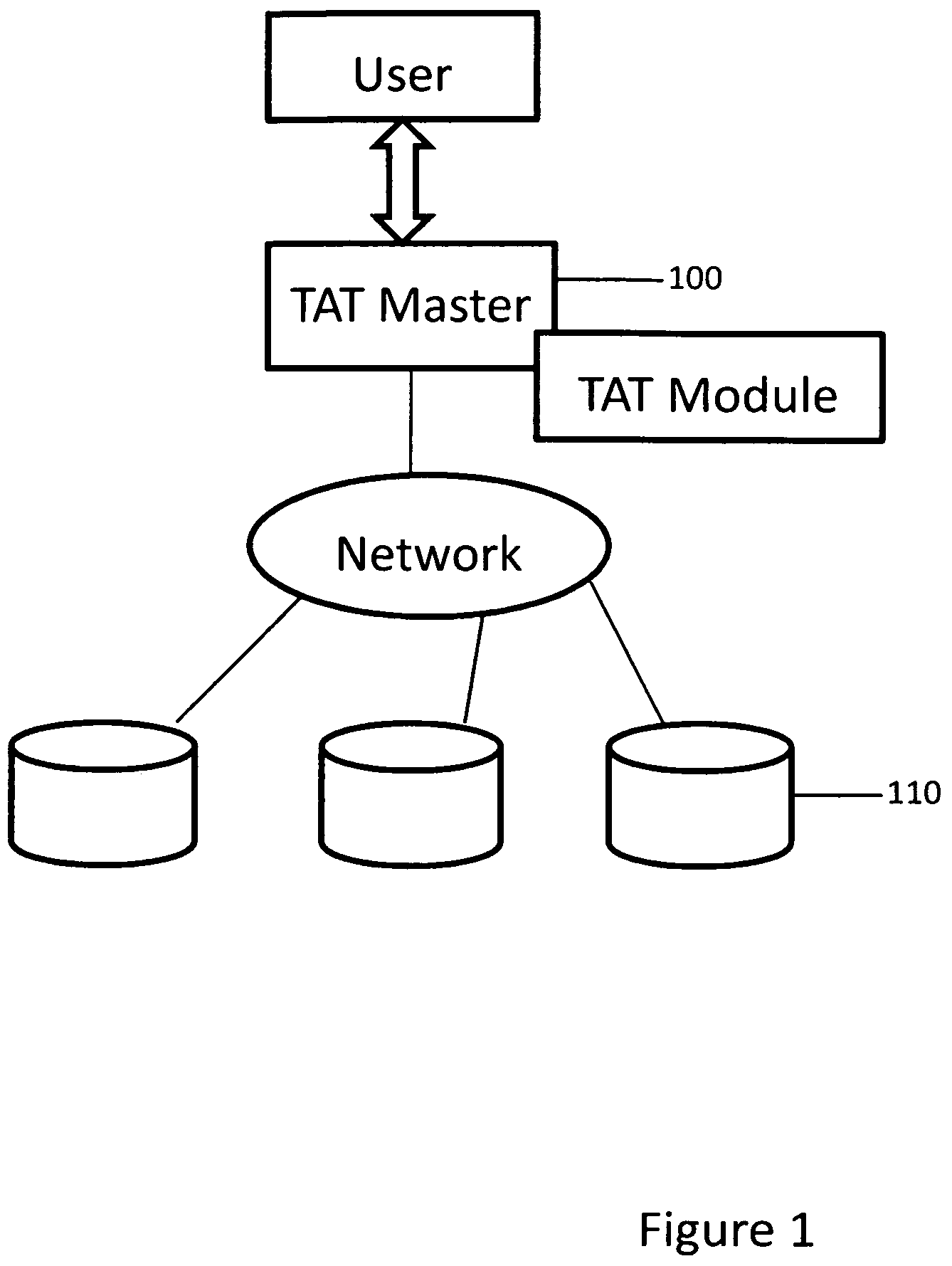

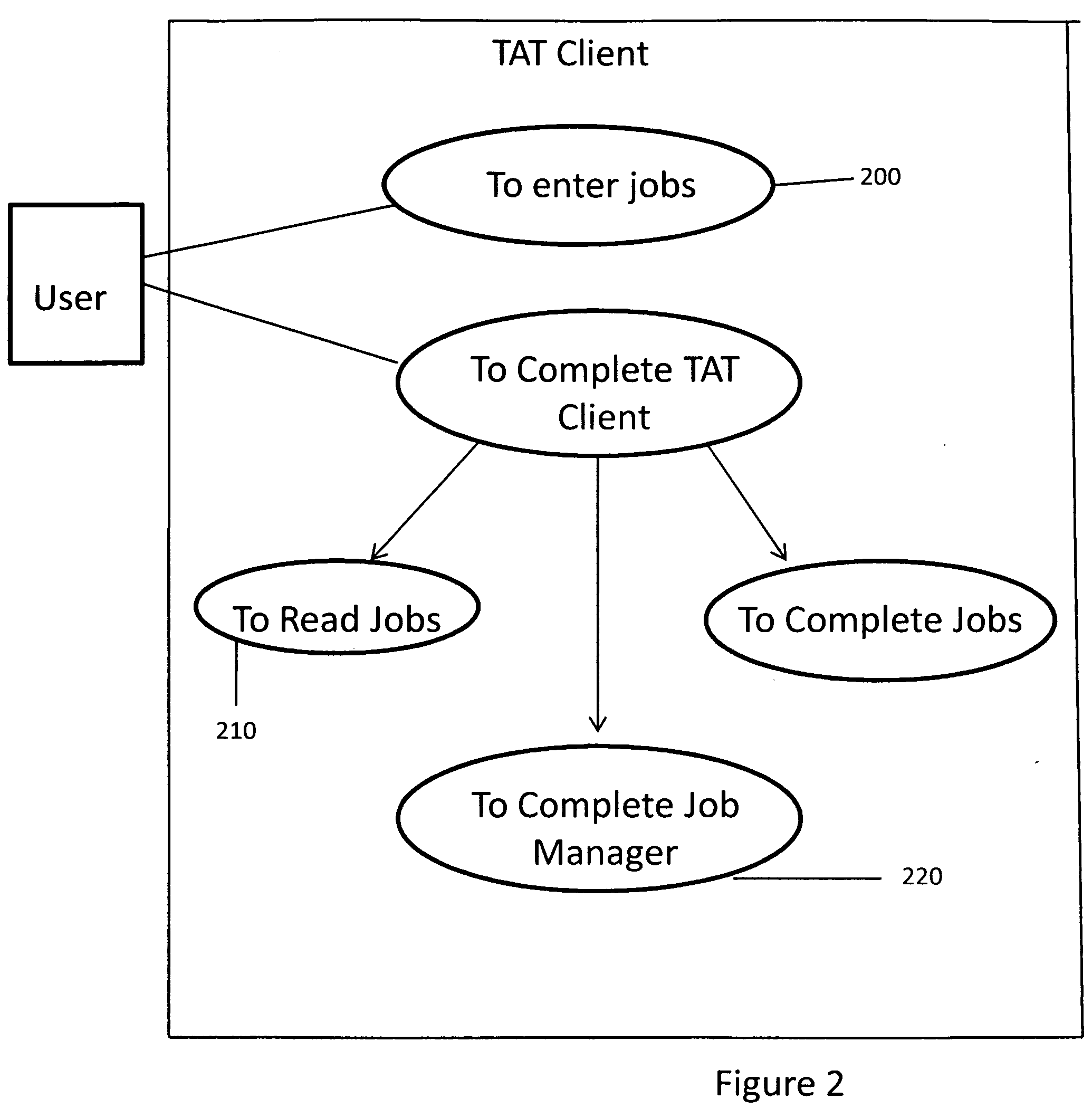

Test management system and method

InactiveUS20100146514A1Complex in executionResource allocationError detection/correctionExecution planTest management

Owner:IBM CORP

Centralized Device Virtualization Layer For Heterogeneous Processing Units

ActiveUS20100146620A1Operational speed enhancementResource allocationVirtualizationOperational system

A method for providing an operating system access to devices, including enumerating hardware devices and virtualized devices, where resources associated with a first hardware device are divided into guest physical resources creating a software virtualized device, and multiple instances of resources associated with a second hardware device are advertised thereby creating a hardware virtualized device. First and second permission lists are generated that specify which operating systems are permitted to access the software virtualized device and the hardware virtualized device, respectively. First and second sets of virtual address maps are generated, where each set maps an address space associated with either the software virtualized device or the hardware virtualized device into an address space associated with each operating system included in the corresponding permission list. The method further includes arbitrating access requests from each of the plurality of operating systems based on the permission lists and the virtual address maps.

Owner:NVIDIA CORP

Shared Memory Partition Data Processing System With Hypervisor Managed Paging

InactiveUS20090307445A1Resource allocationNon-redundant fault processingData processing systemPaging

Owner:IBM CORP

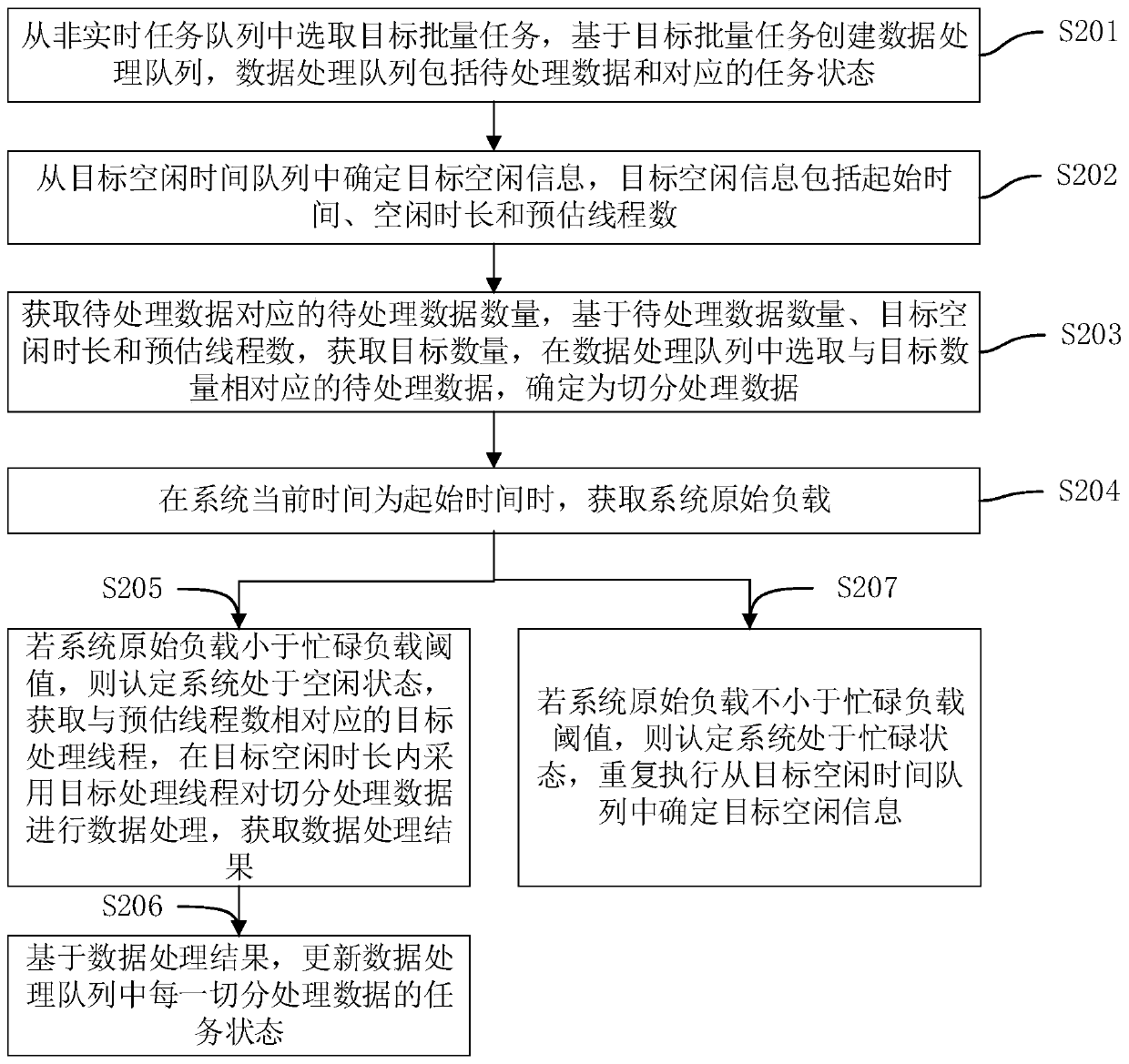

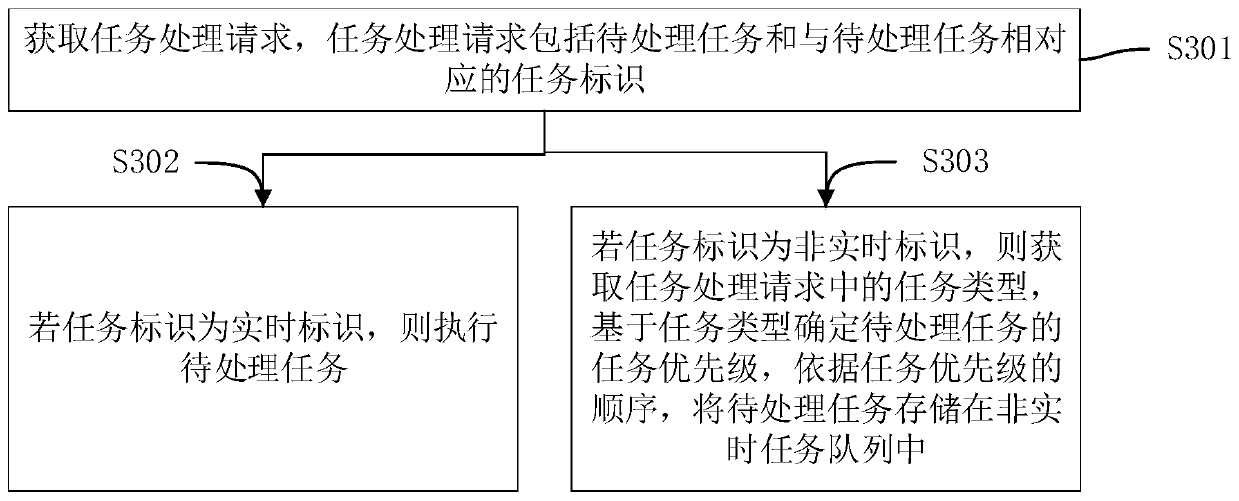

Batch data processing method and device, computer equipment and storage medium

PendingCN110297711AEnsure successful processingImprove determination efficiencyResource allocationMachine learningNon real timeBatch processing

Owner:PING AN TECH (SHENZHEN) CO LTD

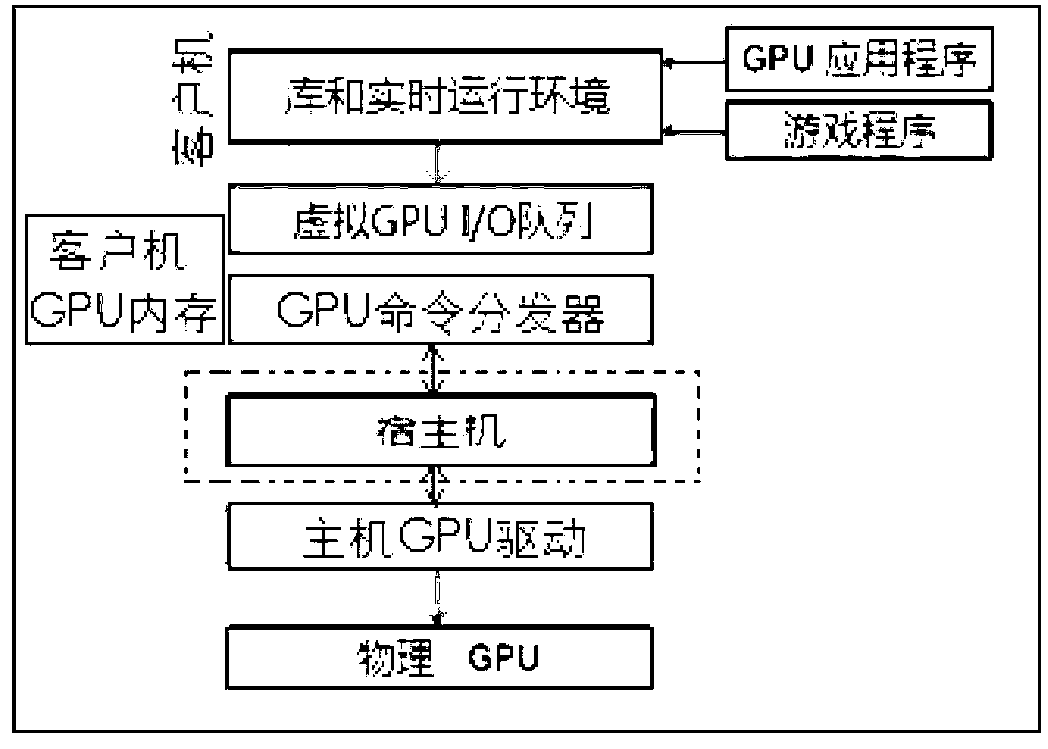

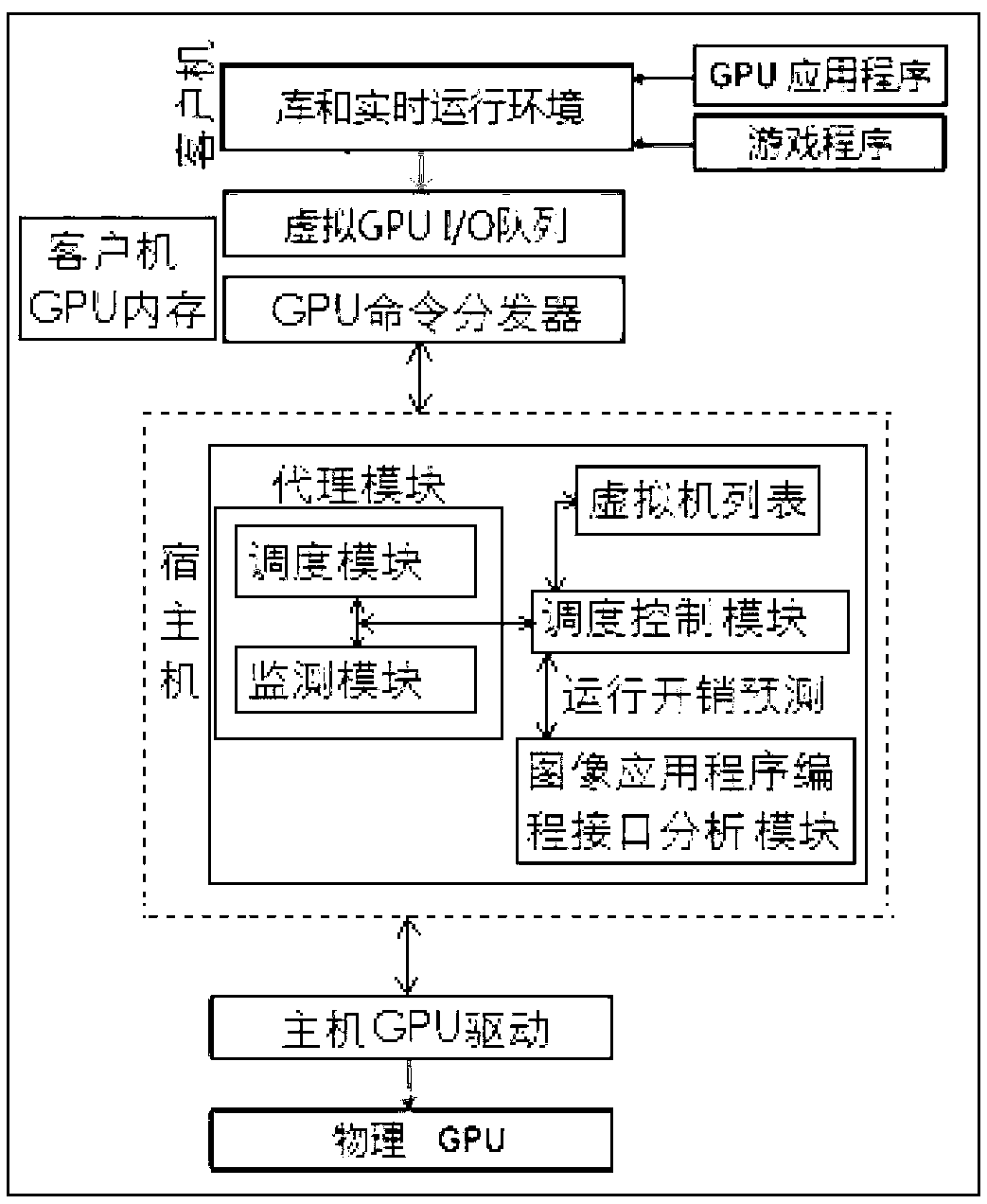

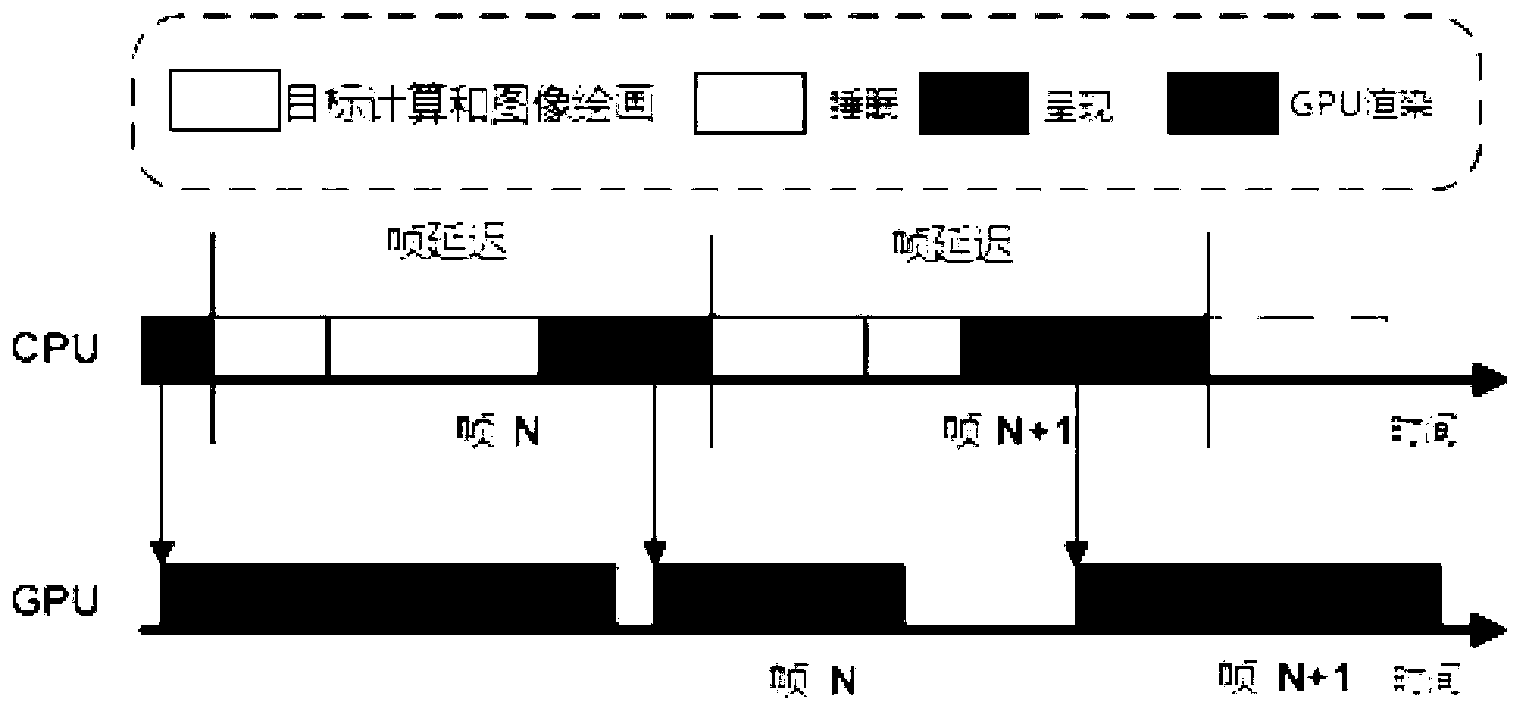

Adaptive scheduling host system and scheduling method of GPU virtual resources in cloud game

Owner:SHANGHAI JIAO TONG UNIV

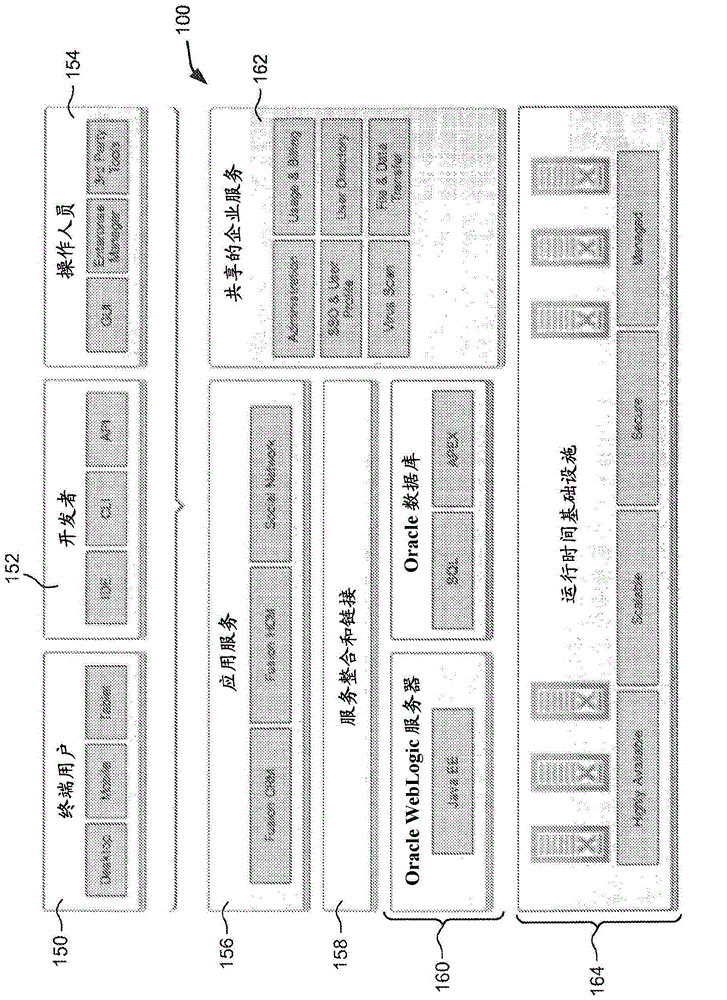

Infrastructure for providing cloud services

Owner:ORACLE INT CORP

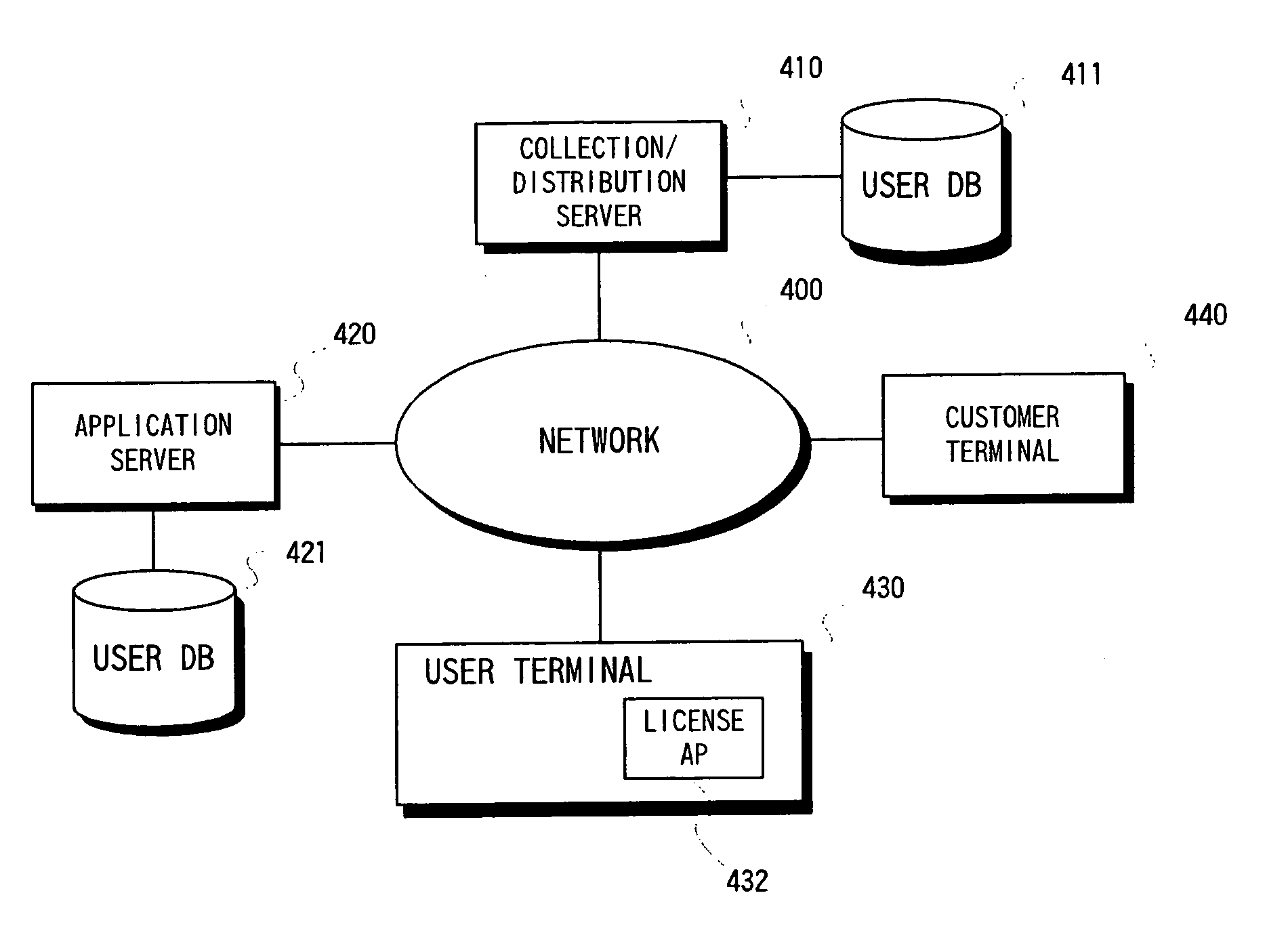

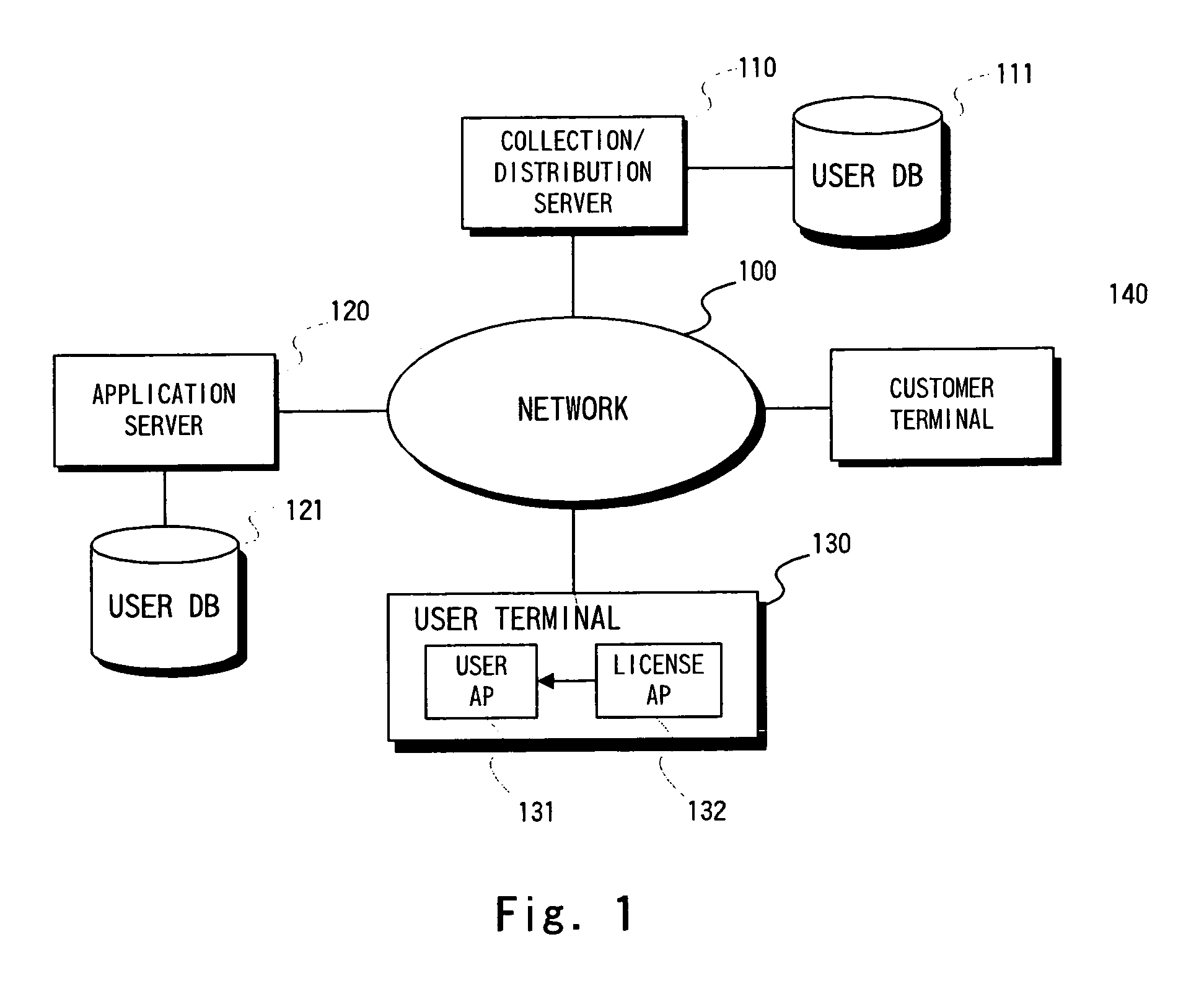

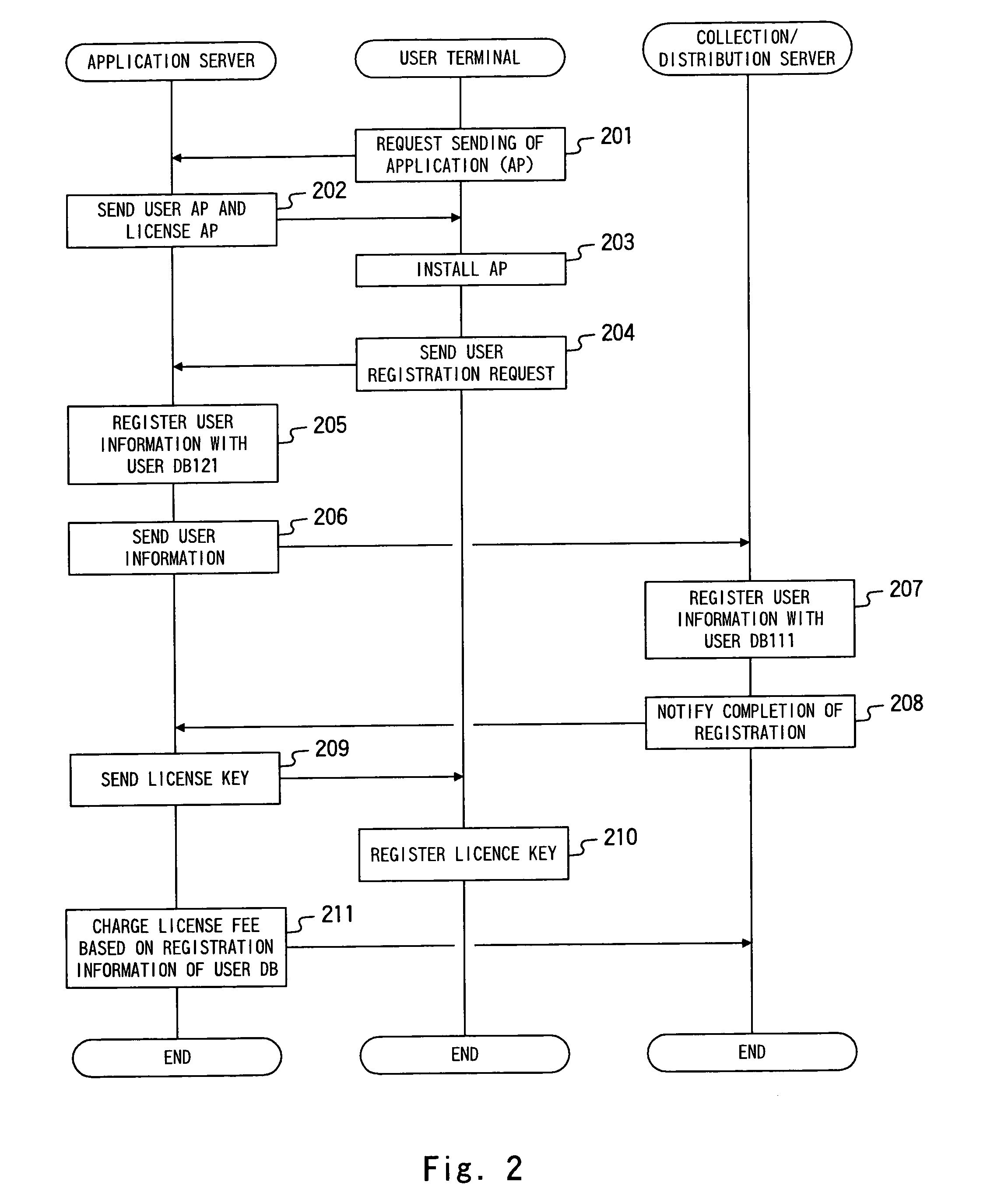

Distributed processing system, method of the same

InactiveUS7031944B2Increase valueResource allocationMultiple digital computer combinationsApplication serverComputer terminal

Owner:NEC CORP

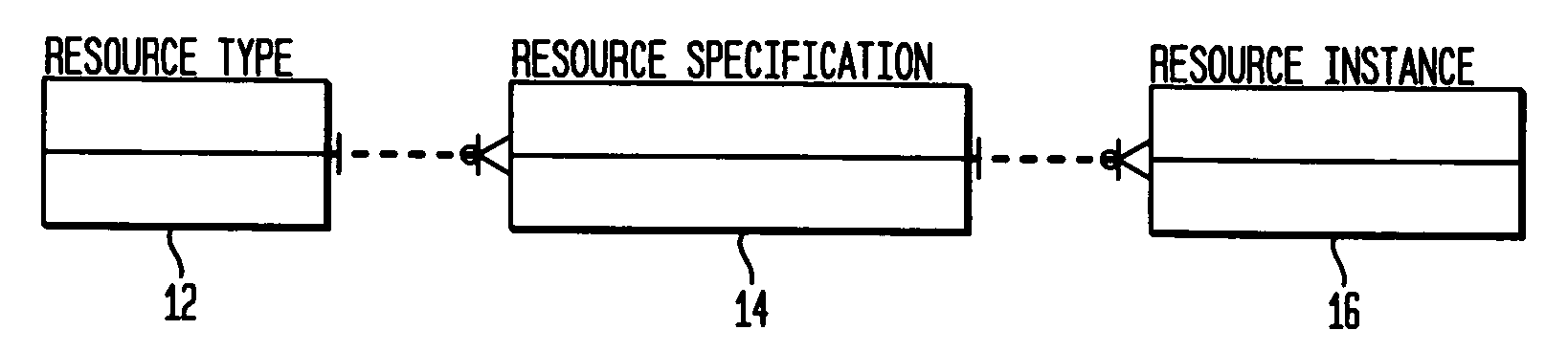

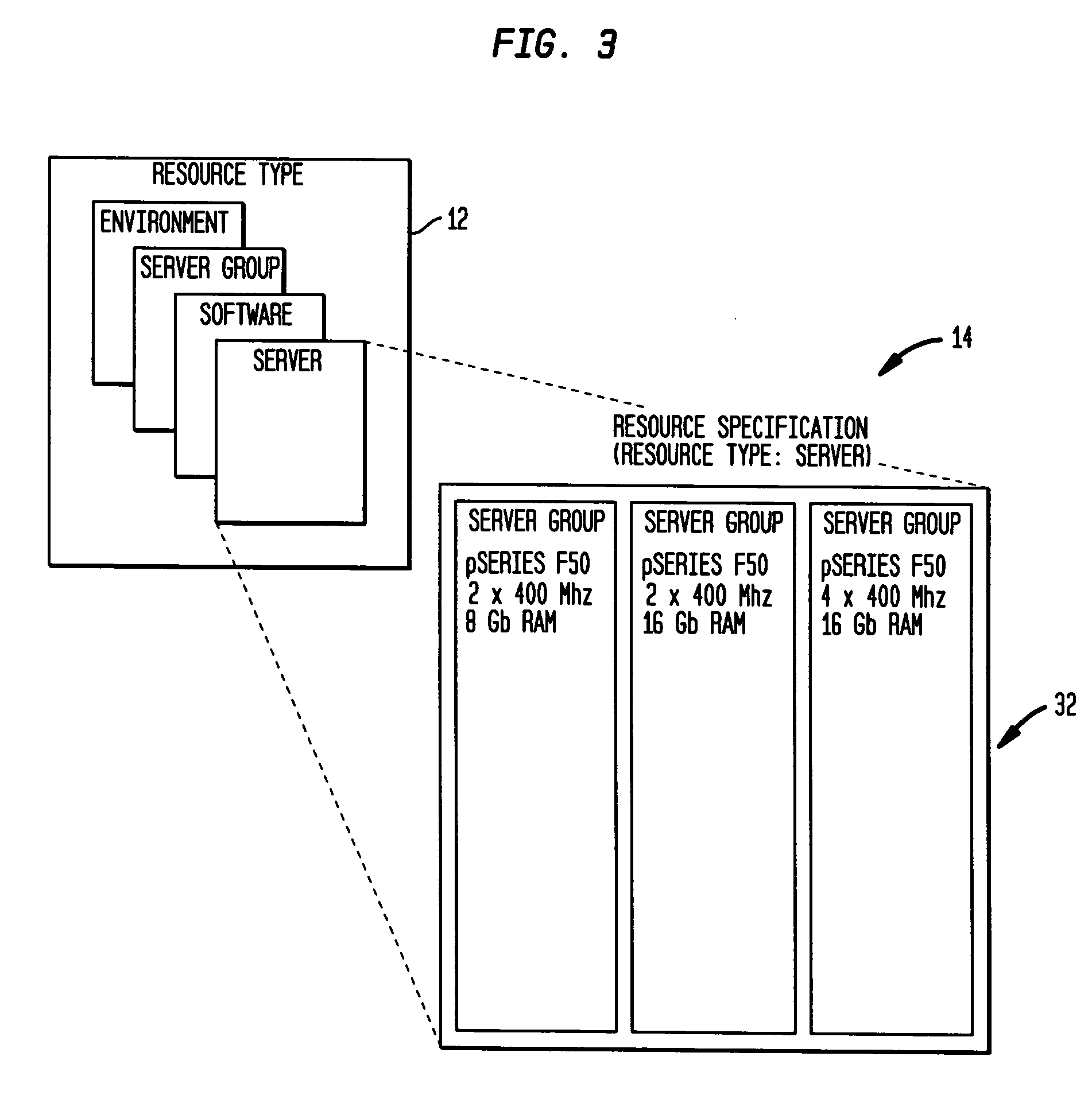

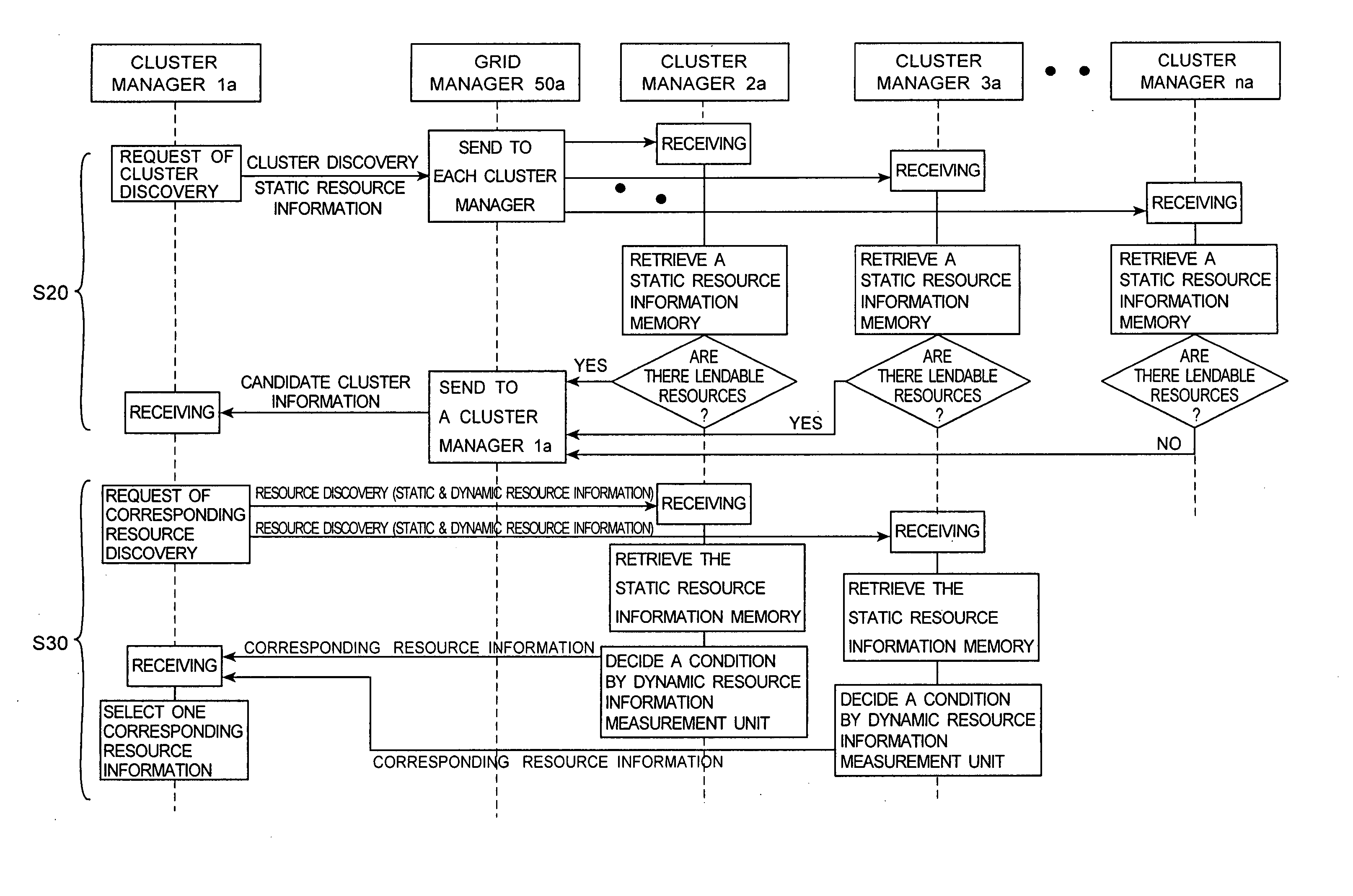

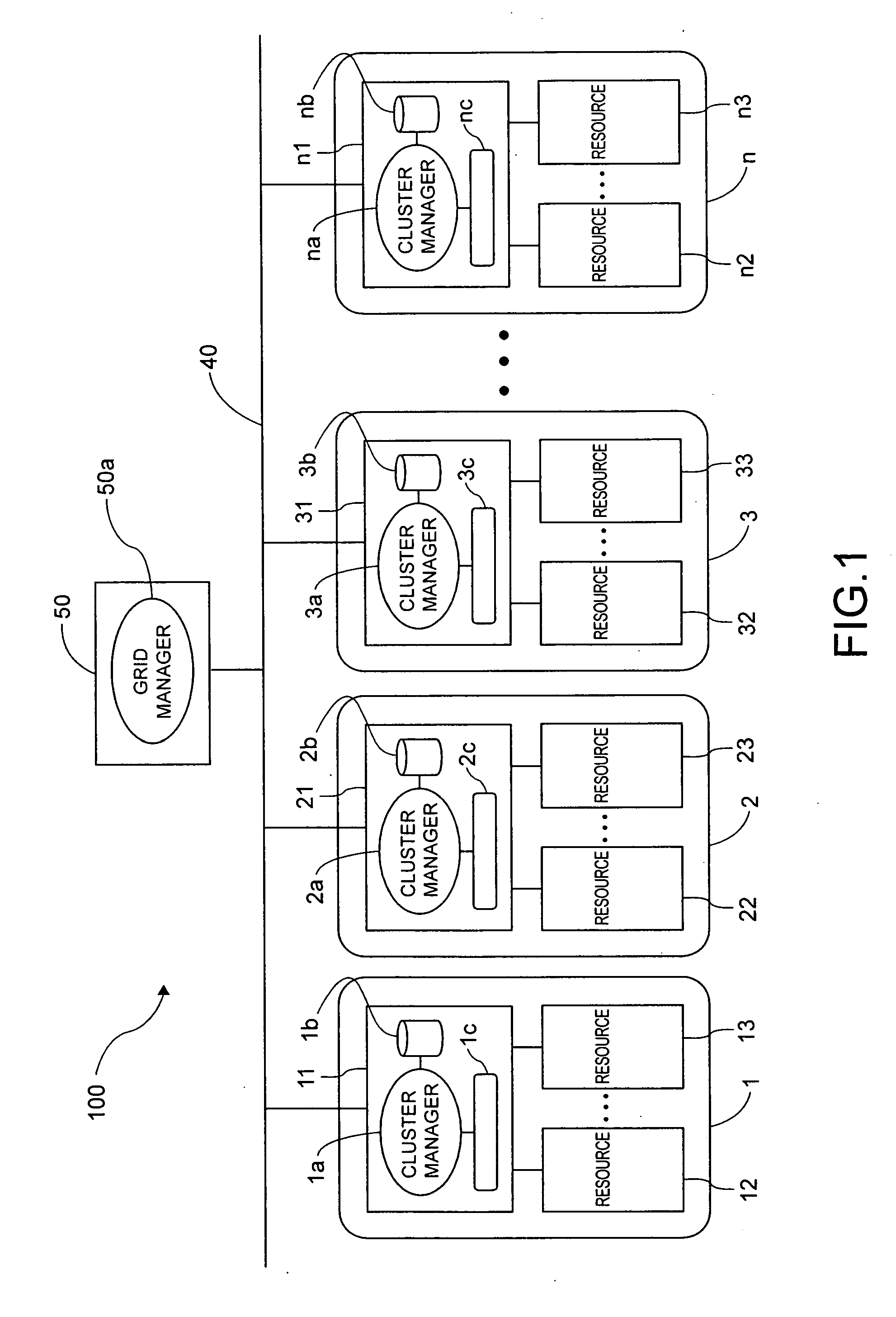

Resource discovery method and cluster manager apparatus

InactiveUS20050188191A1Reduce communication quantity quantityReduce quantity processing quantityResource allocationMultiple digital computer combinationsDynamic resourceDistributed computing

Owner:KK TOSHIBA

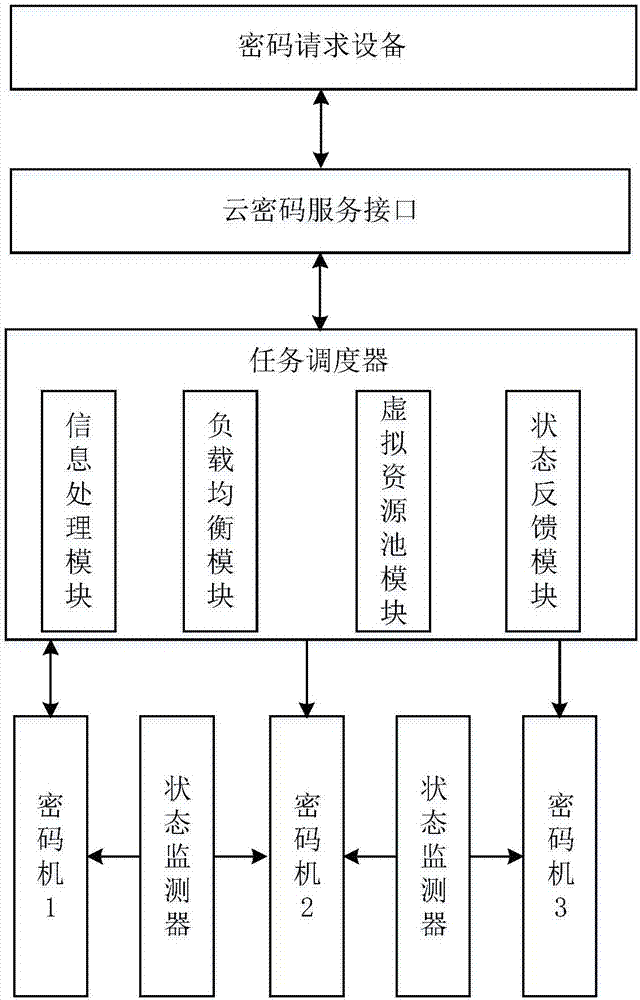

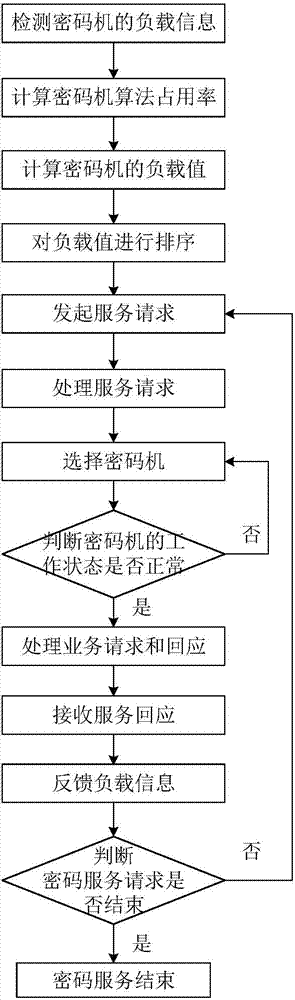

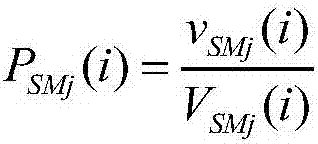

System and method for providing cryptographic service through virtual cryptographic equipment cluster

ActiveCN107040589ARealize dynamic managementOvercome underutilizationResource allocationDigital data protectionOccupancy rateVirtualization

Owner:XIDIAN UNIV

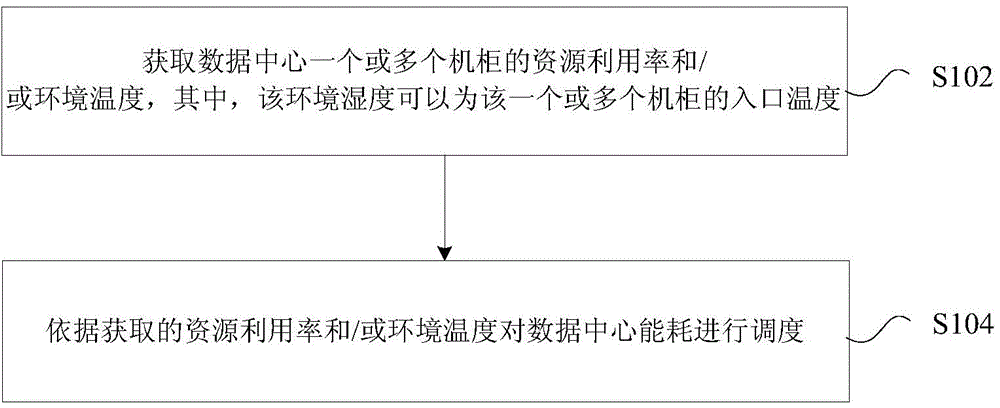

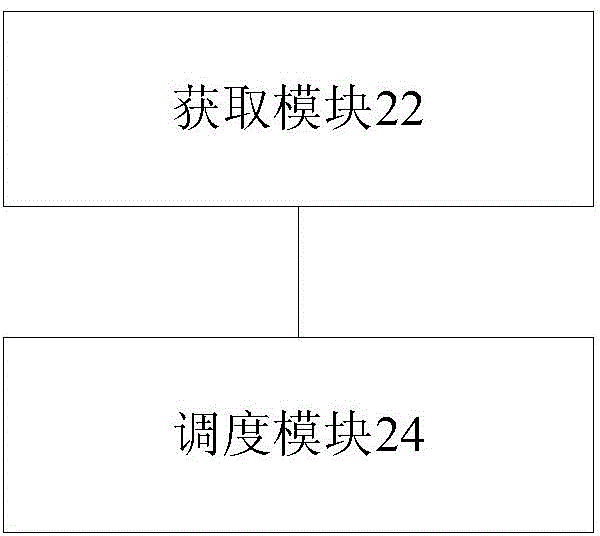

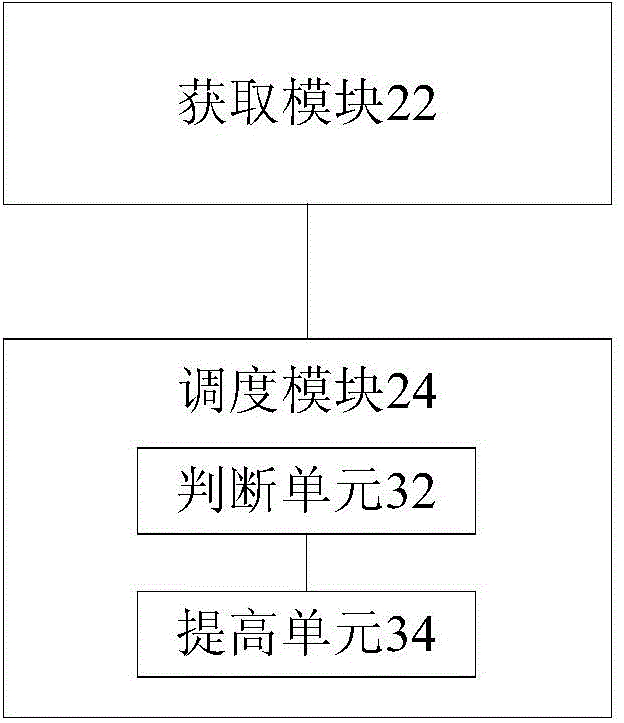

Data center energy consumption scheduling method and data center energy consumption scheduling device

InactiveCN104423531ASolve the problem of low energy utilization efficiencyReduce energy consumptionResource allocationPower supply for data processingReduction treatmentResource utilization

Owner:ZTE CORP

Allocating resources among tasks under uncertainty

InactiveUS20170277568A1Efficient budgetEfficient taskResource allocationResourcesRisk modelOperating system

A model is built of benefit of each of a plurality of computing tasks under uncertainty as a function of computing resources invested in each of the computing tasks, and a model of risk is built of each of the computing tasks under uncertainty as a function of the computing resources invested in each of the computing tasks. Risk of a task allocation is calculated with the risk model, and benefit of a task allocation is calculated with the benefit model. An allocation of the computing resources is found to increase the benefit and manage the risk. The allocation of computing resources is applied to the computing tasks.

Owner:INT BUSINESS MASCH CORP

Hierarchical weighting of donor and recipient pools for optimal reallocation in logically partitioned computer systems

InactiveUS7299469B2Evaluates the equivalency of donorsResource allocationMemory systemsPerformance enhancementWorkload

A method and system for reallocating resources in a logically partitioned environment using hierarchical weighting comprising a Performance Enhancement Program (PEP), a Reallocation Program (RP), and a Hierarchical Weighting Program (HWP). The PEP allows an administrator to designate several performance parameters and rank the priority of the resources. The RP compiles the performance data for the resources and calculates a composite parameter, a recipient workload ratio, and a donor workload ratio. The RP determines the donors and recipients. RP allocates the resources from the donors to the recipients using the HWP. The HWP evaluates and ranks the equivalency of donors and recipients based on the noise factor. HWP then reallocates the resource in each class and subclass from the highest ranked donor to the highest ranked recipient. The RP continues to monitor and update the workload statistics based on either a moving window or a discrete window sampling system.

Owner:GOOGLE LLC

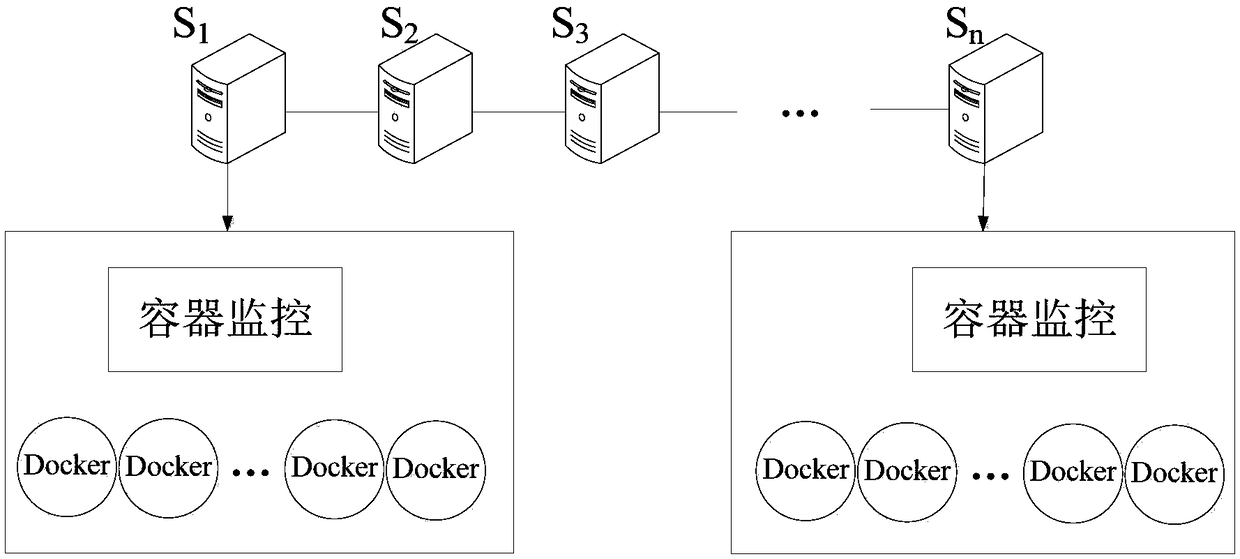

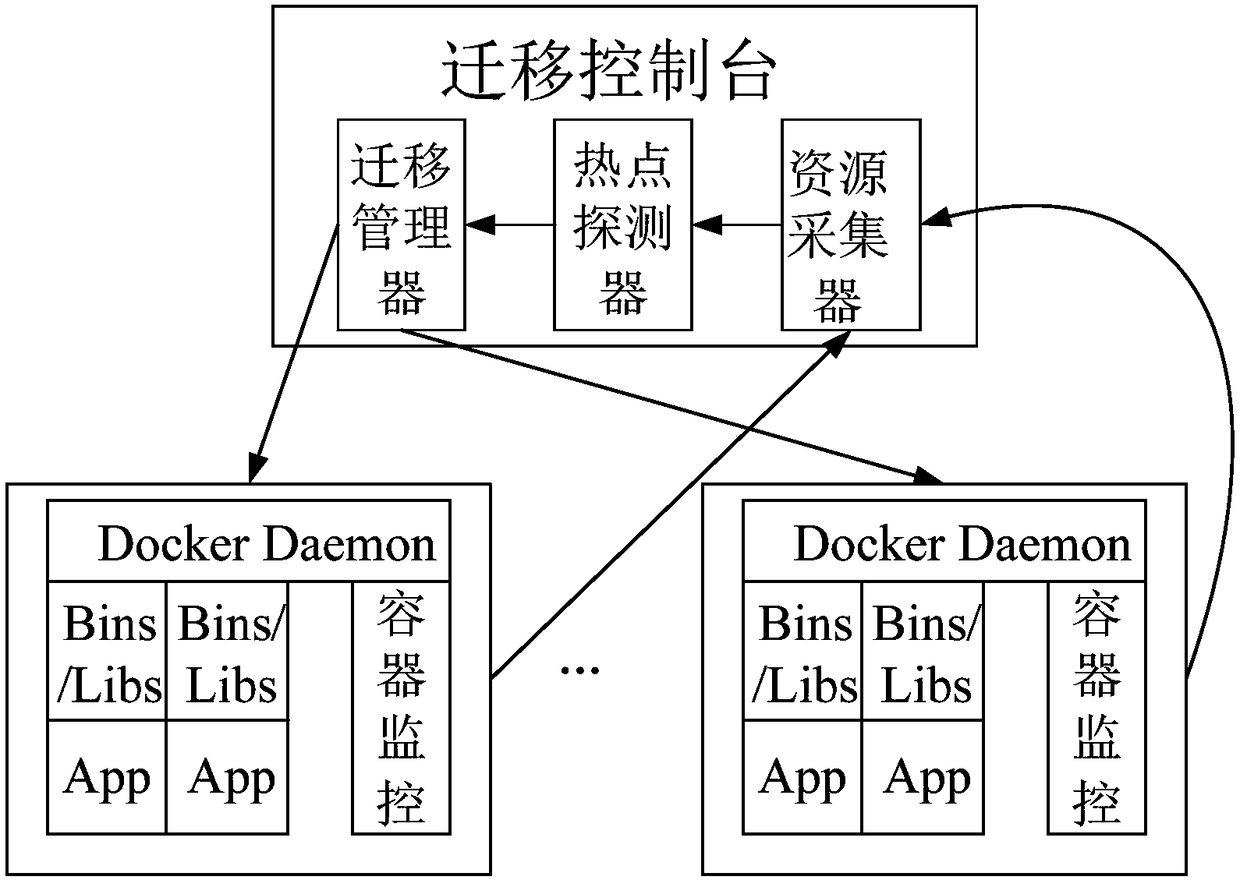

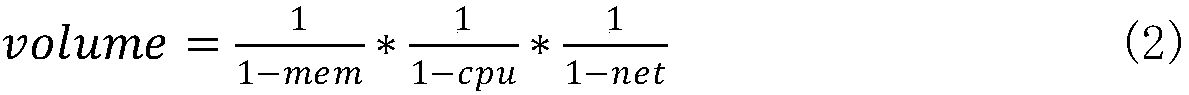

Local dynamic migration method and control system based on Docker container technology

PendingCN108182105AResource allocationSoftware simulation/interpretation/emulationControl systemResource utilization

Owner:SUZHOU UNIV

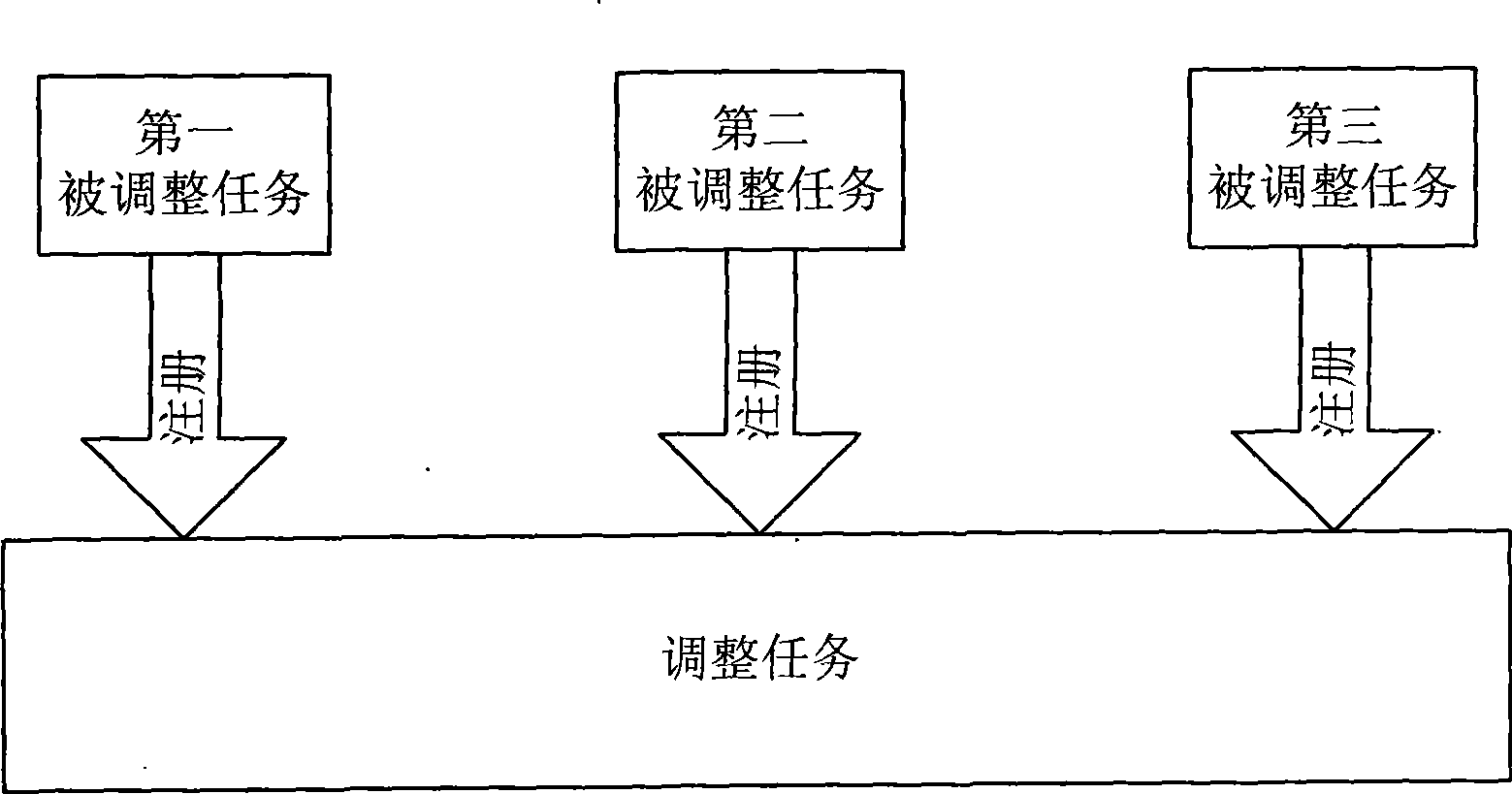

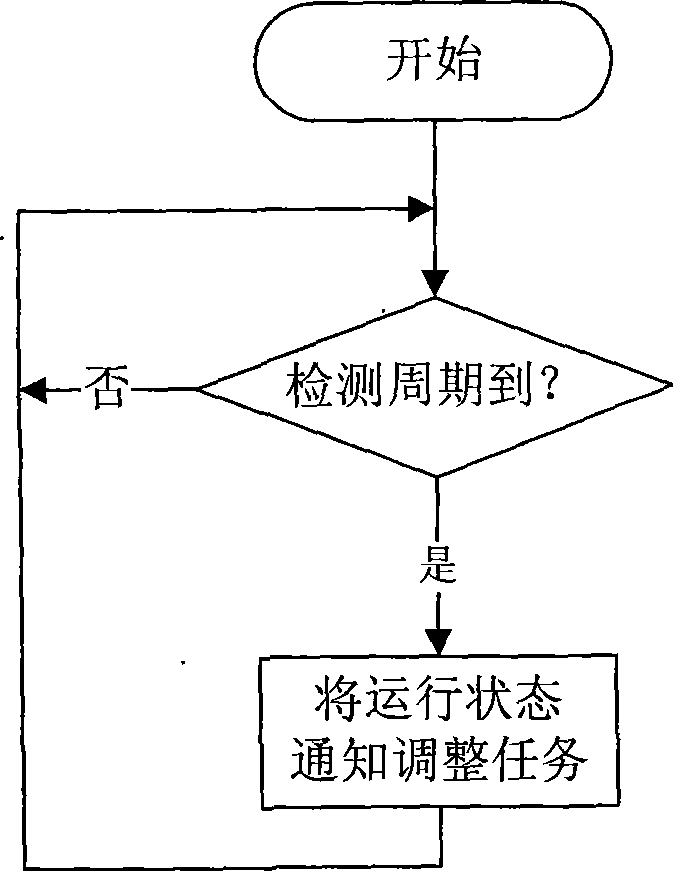

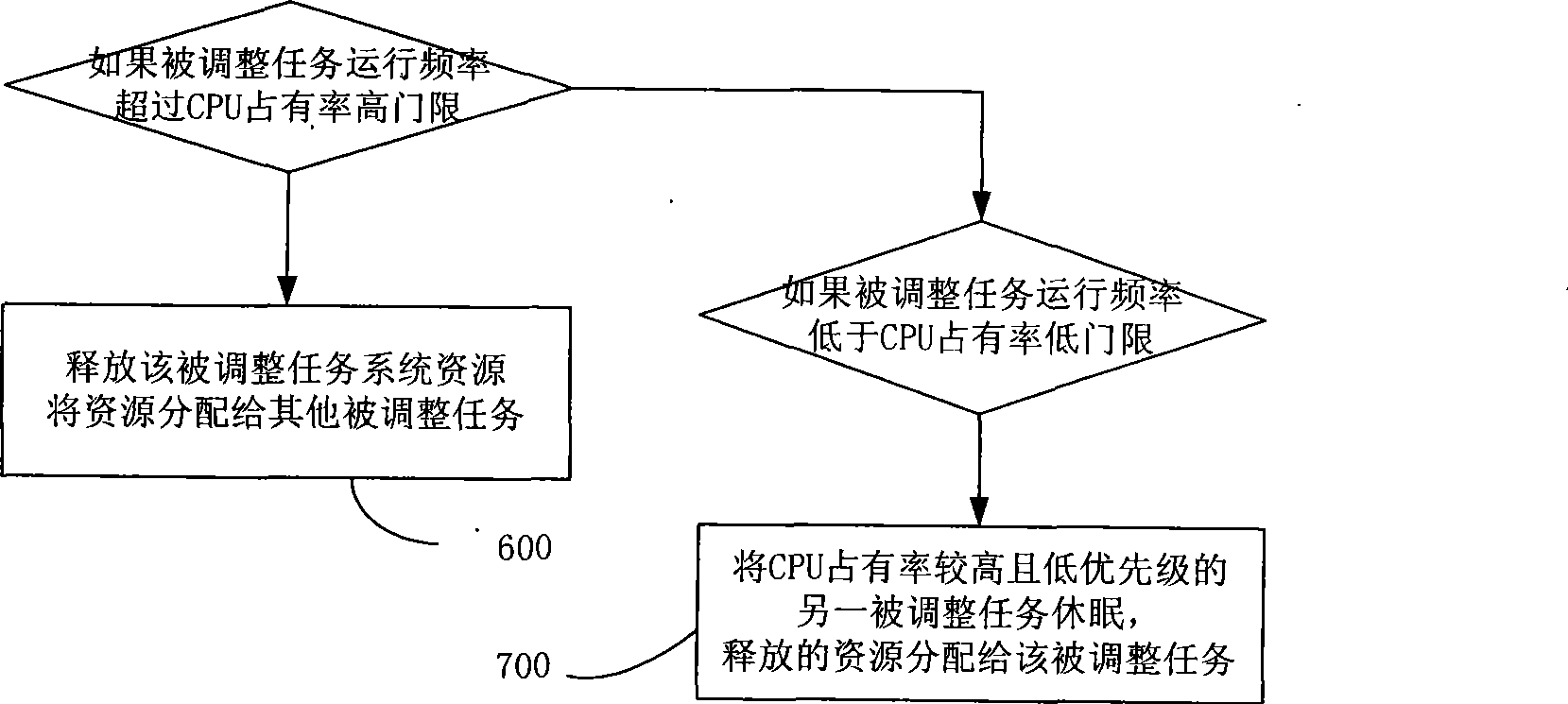

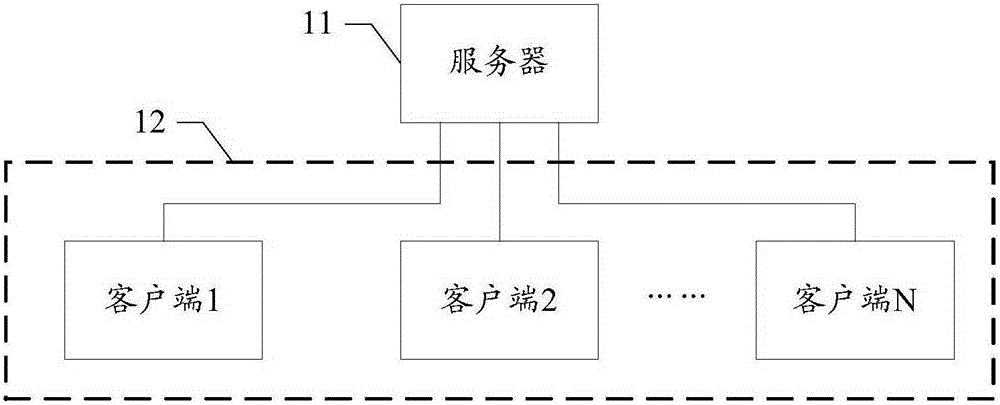

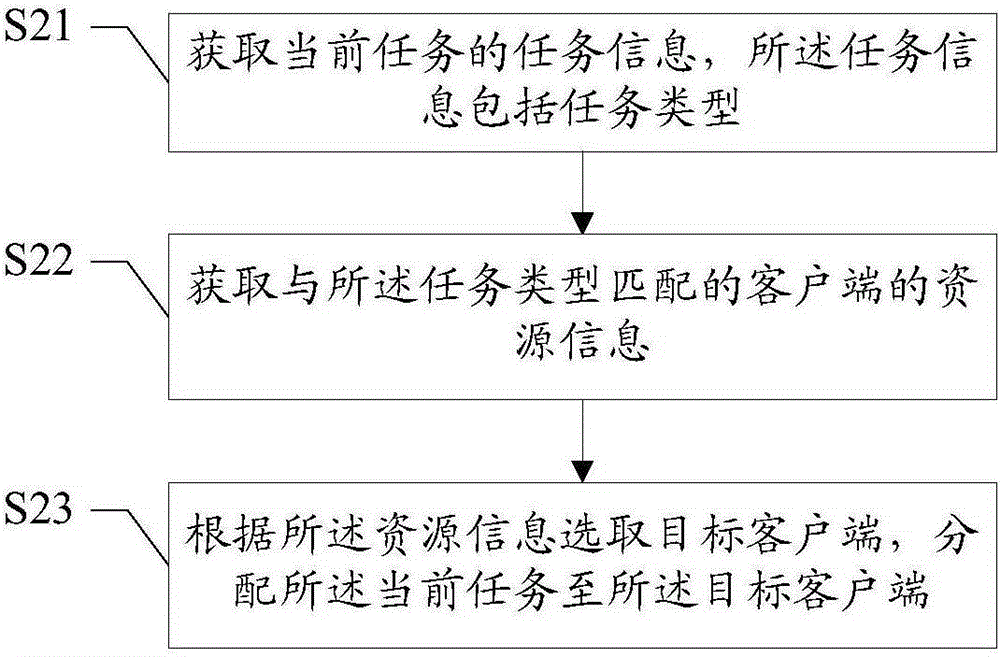

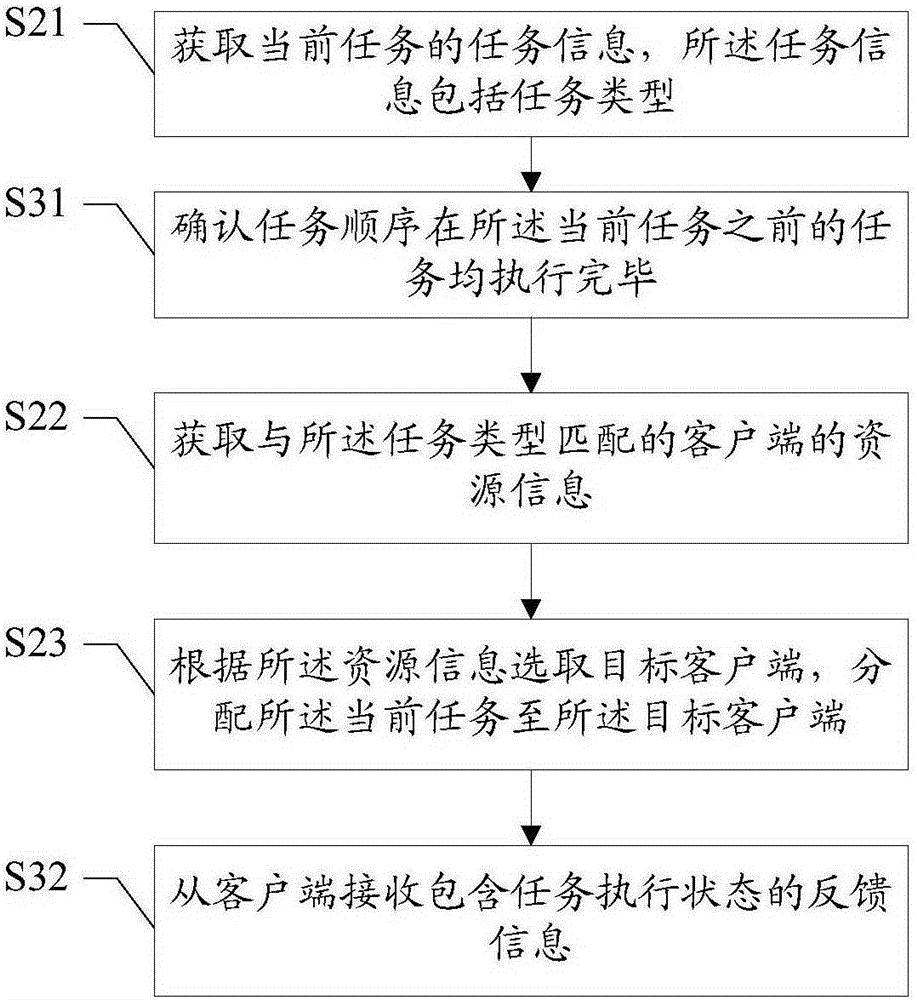

Task scheduling method and apparatus

InactiveCN106557471AResource allocationSpecial data processing applicationsOperating systemResource information

Owner:SAIC MOTOR

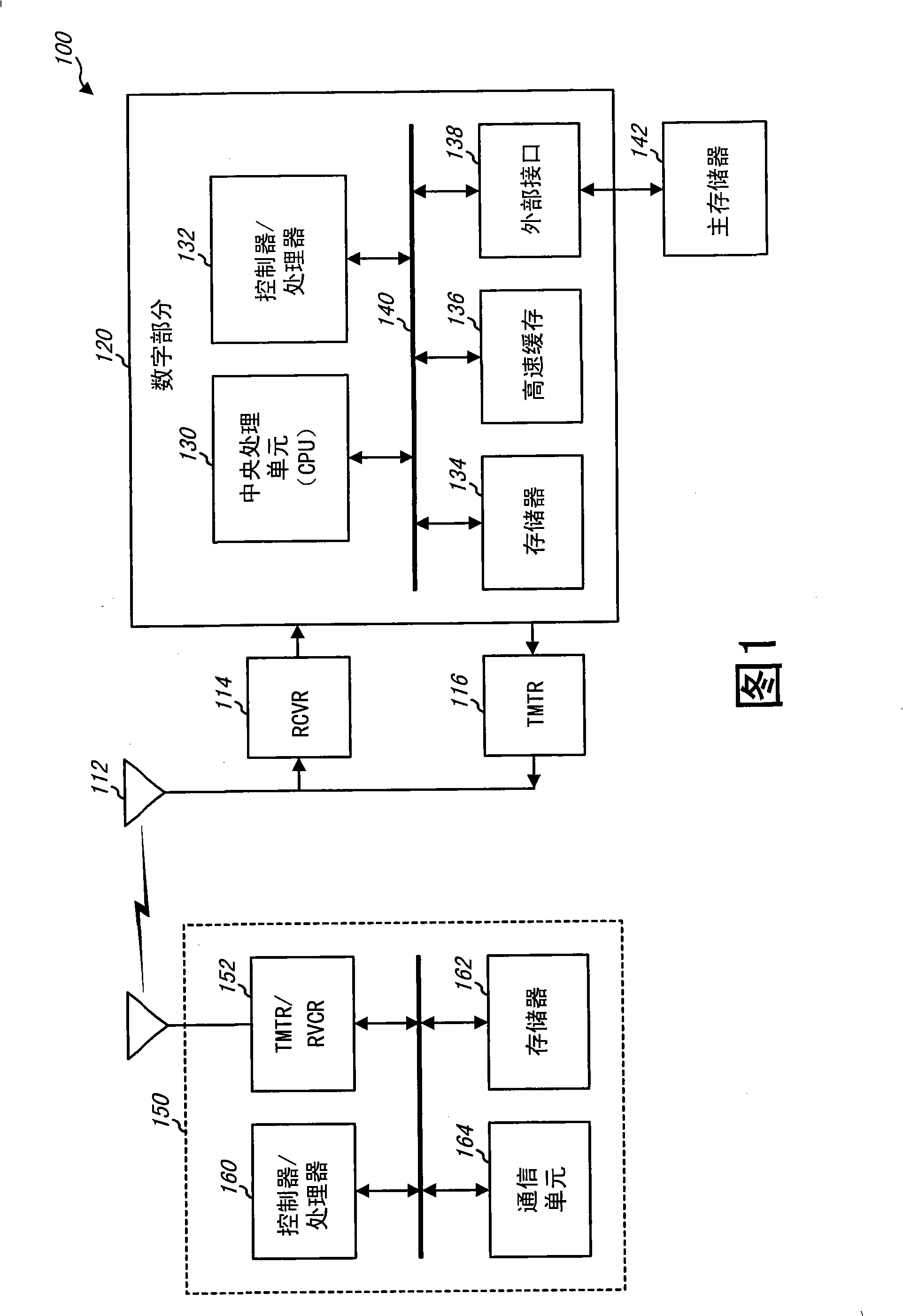

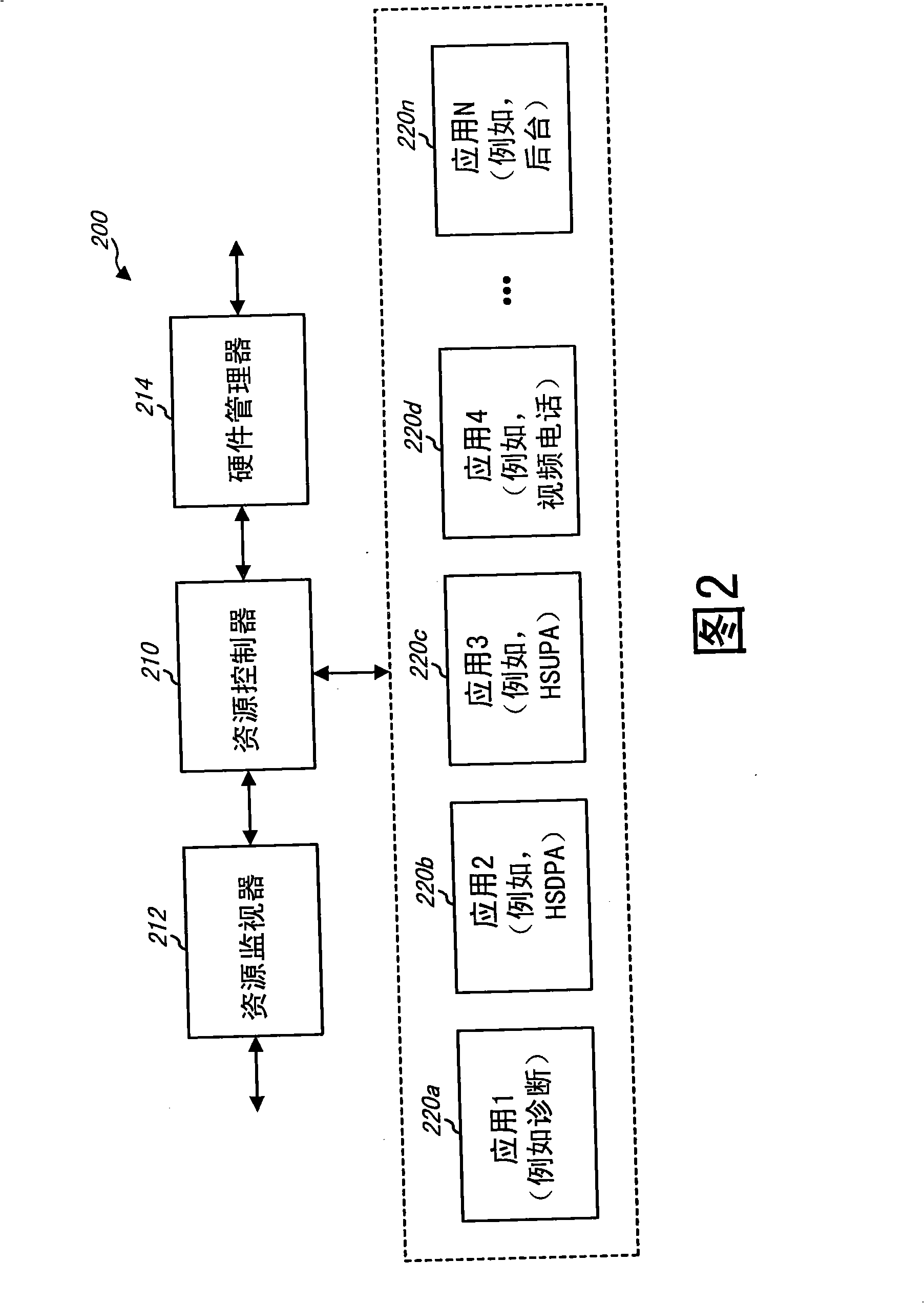

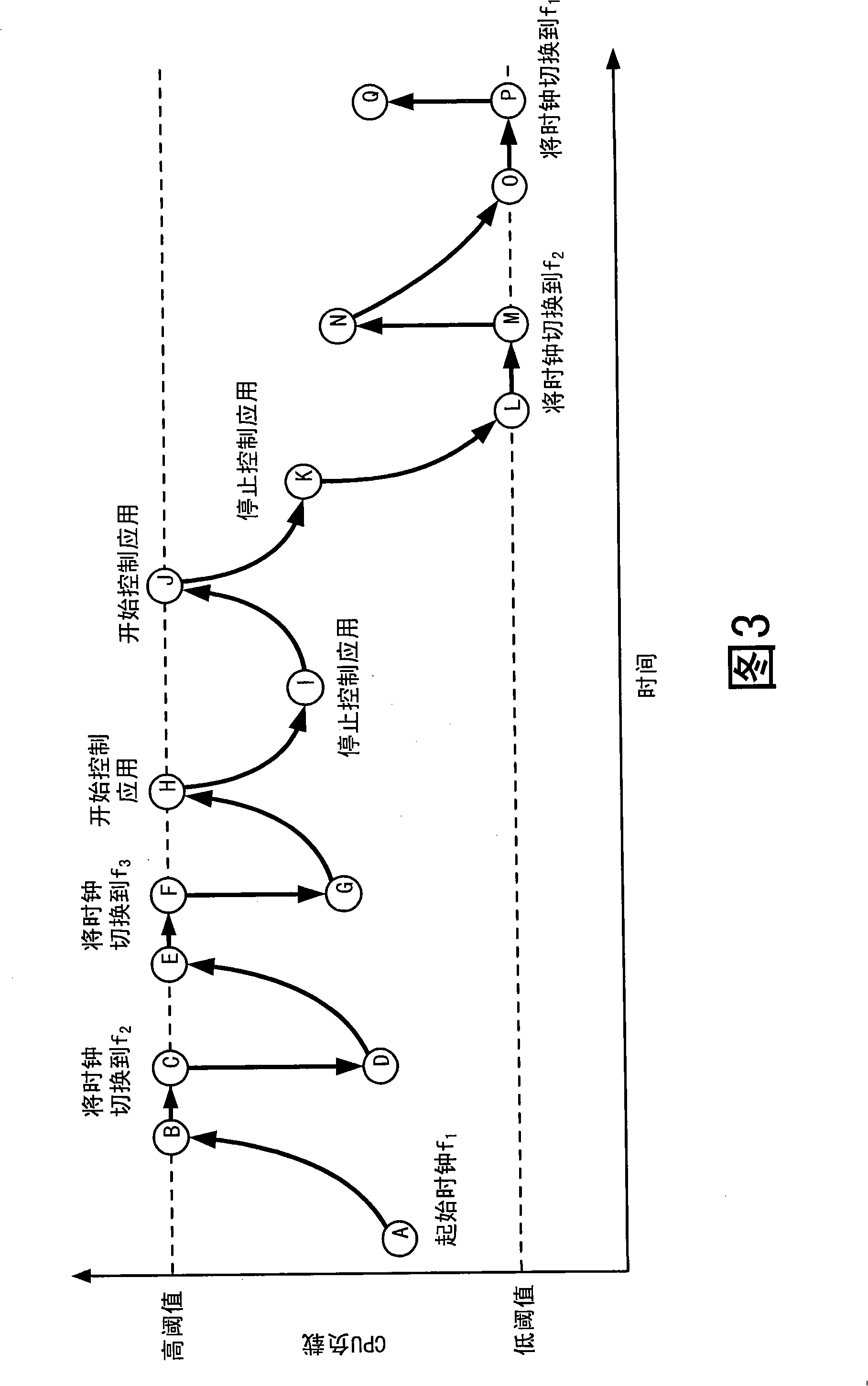

Method and apparatus for managing resources at a wireless device

Owner:QUALCOMM INC

Real-time task calculation control method, device, server and storage medium

InactiveCN110377429AImprove development efficiencyFast and efficient real-time data processingProgram initiation/switchingResource allocationReal-time dataData source

The invention discloses a real-time task calculation control method, a real-time task calculation control device, a server and a storage medium. The method comprises the steps of obtaining the information of a data source, and enabling the information of the data source to be configured and input through executing a first page; obtaining calculation result storage information after the data sourceis calculated, wherein the storage information is input by executing second page configuration; obtaining calculation logic formed by an SQL control chain based on a page, the SQL control chain configuring input by executing a third page, the SQL control chain comprising one or more controllable SQL controls based on the page, and the calculation logic being execution logic for calculating the data source; and packaging the information of the data source, the calculation result storage information and the calculation logic of the same real-time task into an executable file, and sending the executable file to a cluster system for running. According to the technical scheme, the effect of rapidly and efficiently completing real-time data processing through SQL control operation and SQL statement writing is achieved.

Owner:SHENZHEN LEXIN SOFTWARE TECH CO LTD

Apparatus for resource management in a real-time embedded system

Owner:TELOGY NETWORKS

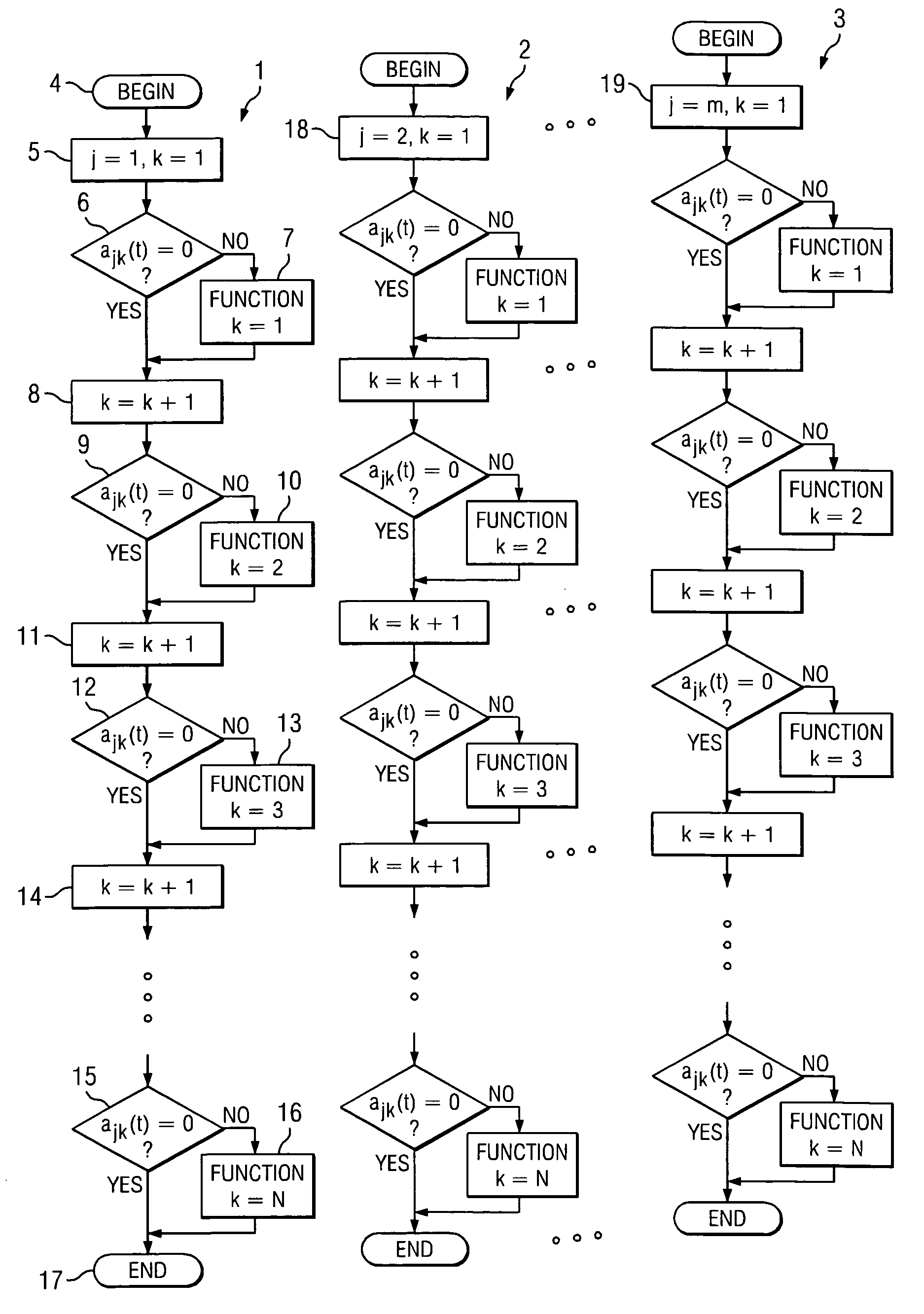

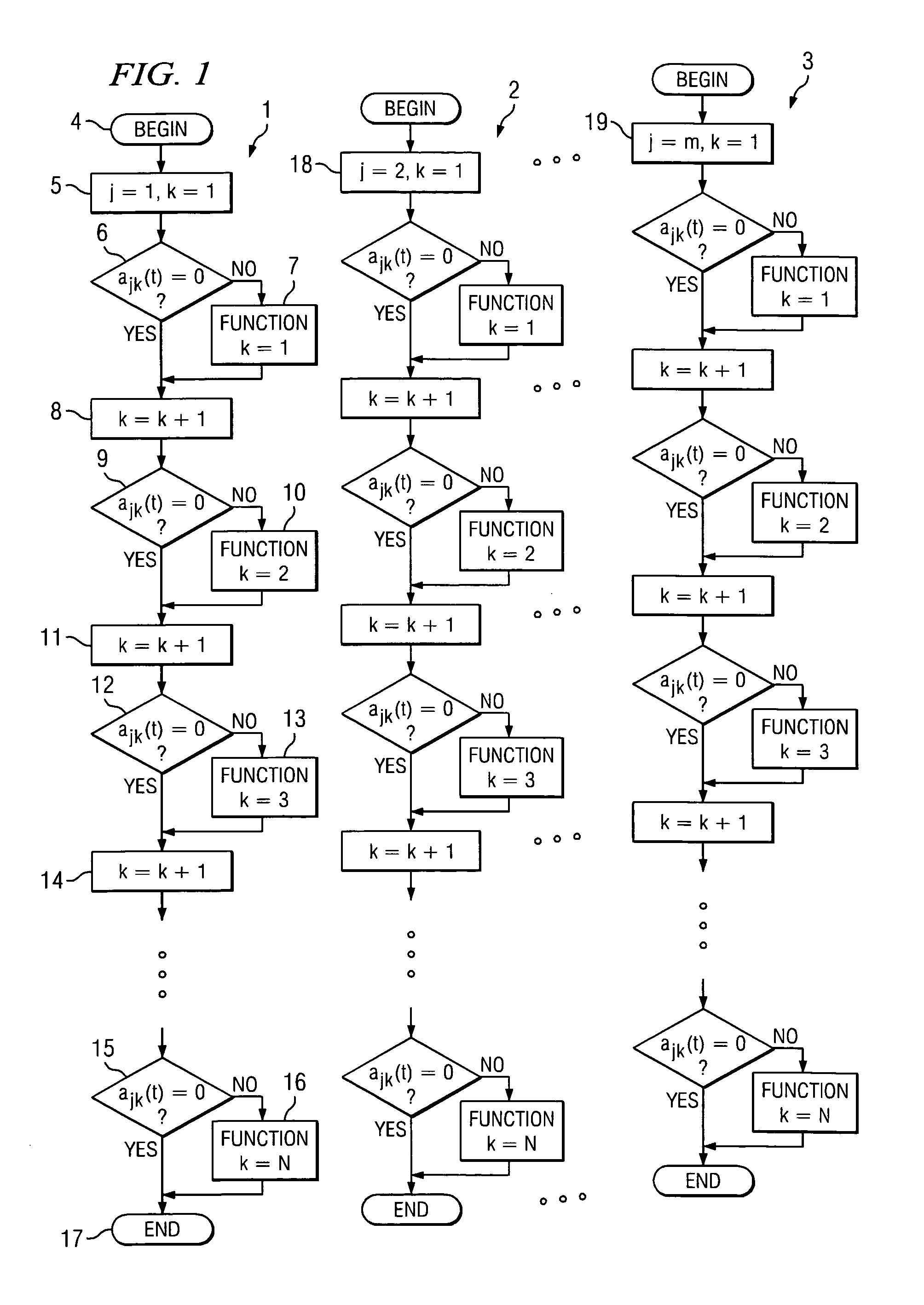

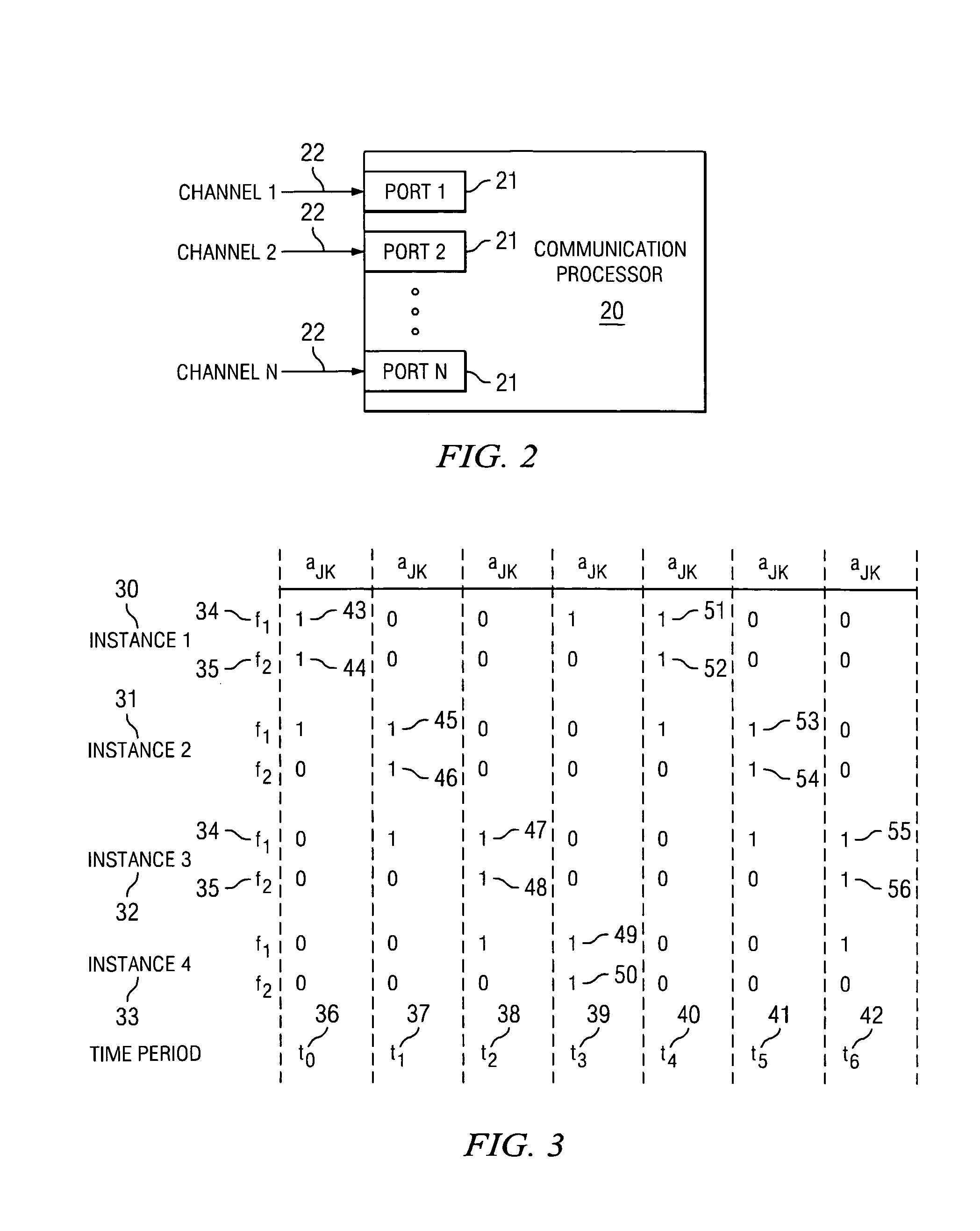

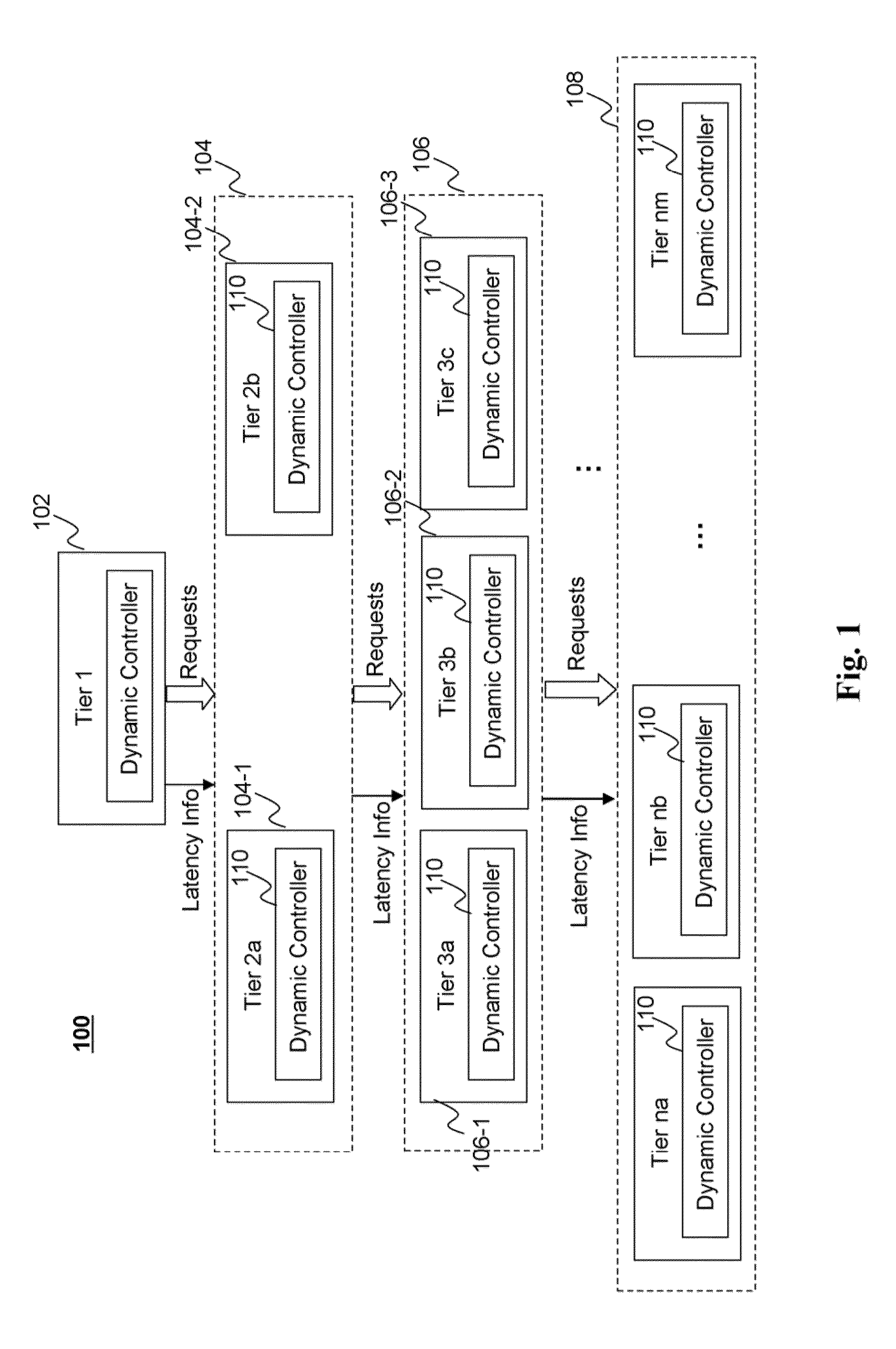

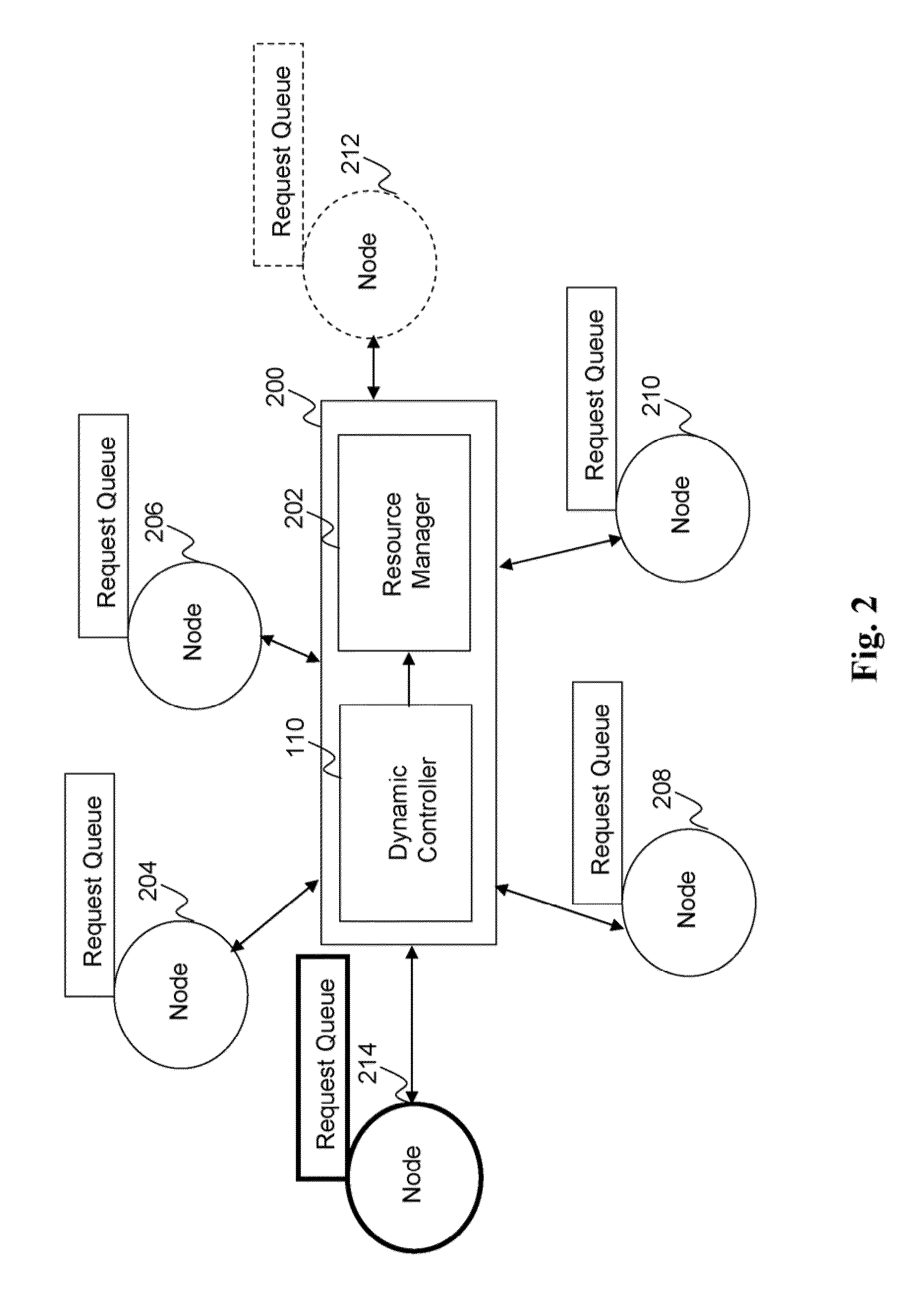

Method and system for dynamic control of a multi-tier processing system

Owner:R2 SOLUTIONS

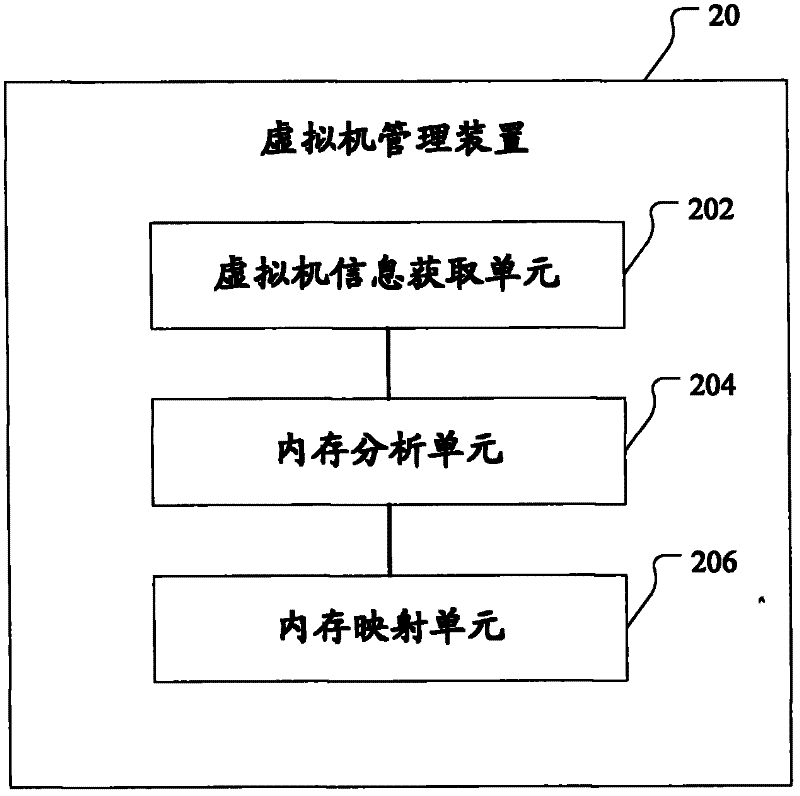

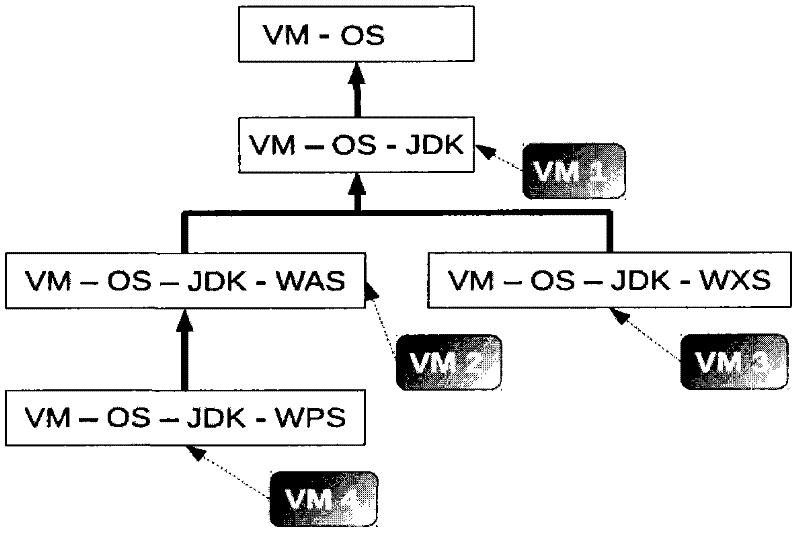

Management device and method for virtual machine

ActiveCN102541619ARemain relatively independent and isolatedKeep isolatedResource allocationSoftware simulation/interpretation/emulationVirtual machineLogical Memory I

The invention provides a management device and method for a virtual machine. The management device comprises a virtual machine information acquisition unit, a memory analyzing unit and a memory mapping unit, wherein the virtual machine information acquisition unit is configured to acquire software hierarchy information of the current virtual machine, the memory analyzing unit is configured to divide a logic memory distributed to the current virtue machine into a special part and a shared part with reference to existing software hierarchy information of at least one installed virtual machine and the software hierarchy information of the current virtual machine, the memory mapping unit is configured to map the shared part to a shared section of a physical memory, and the shared section is used by the at least one installed virtual machine. The invention also provides a management method corresponding to the management device. According to the management device and method provided by the invention, a plurality of virtual machines on the same physical platform can share part of a memory, so that relative independence and isolation of the virtual machines can be maintained at the same time while memory resources are effectively utilized.

Owner:IBM CORP

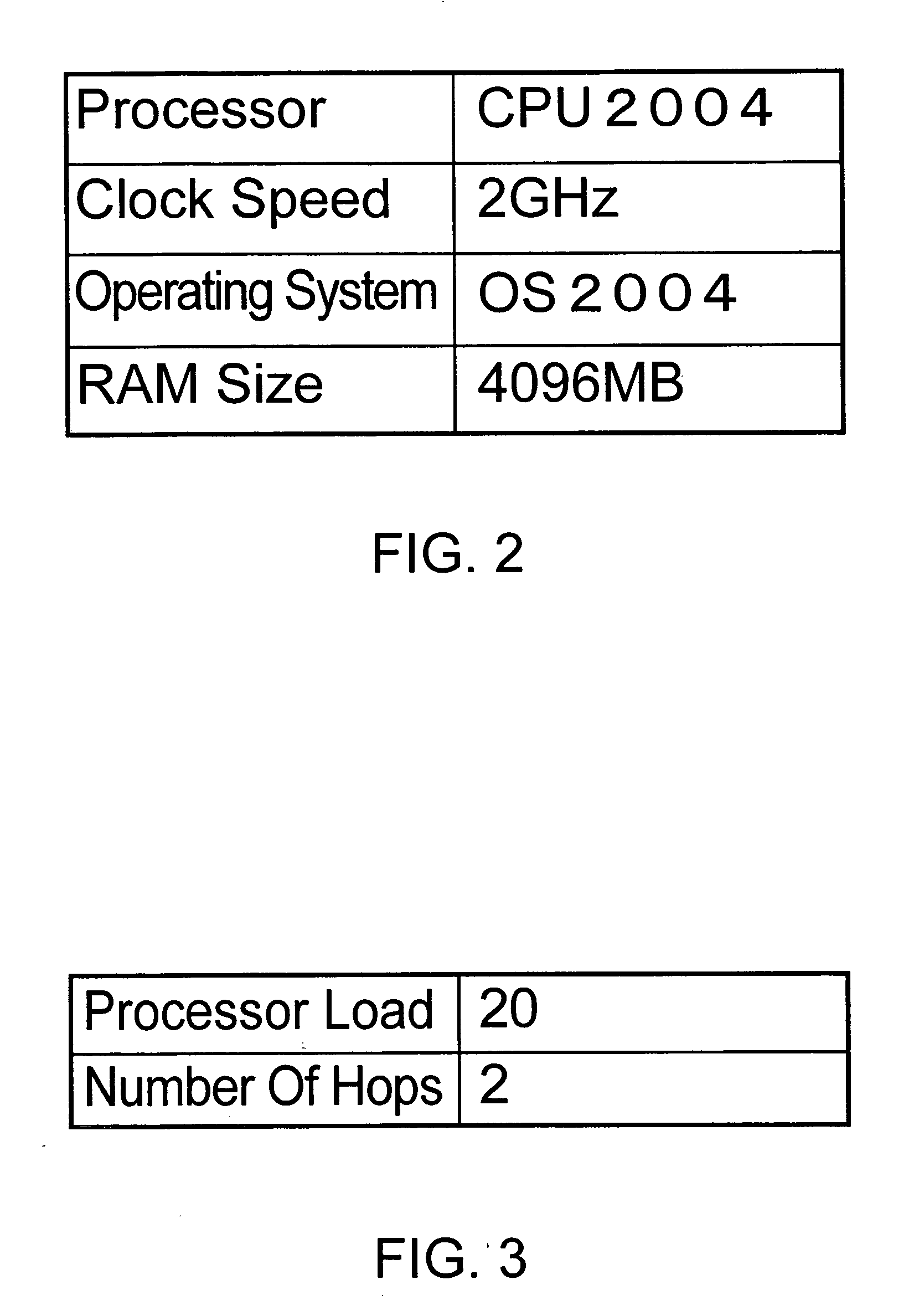

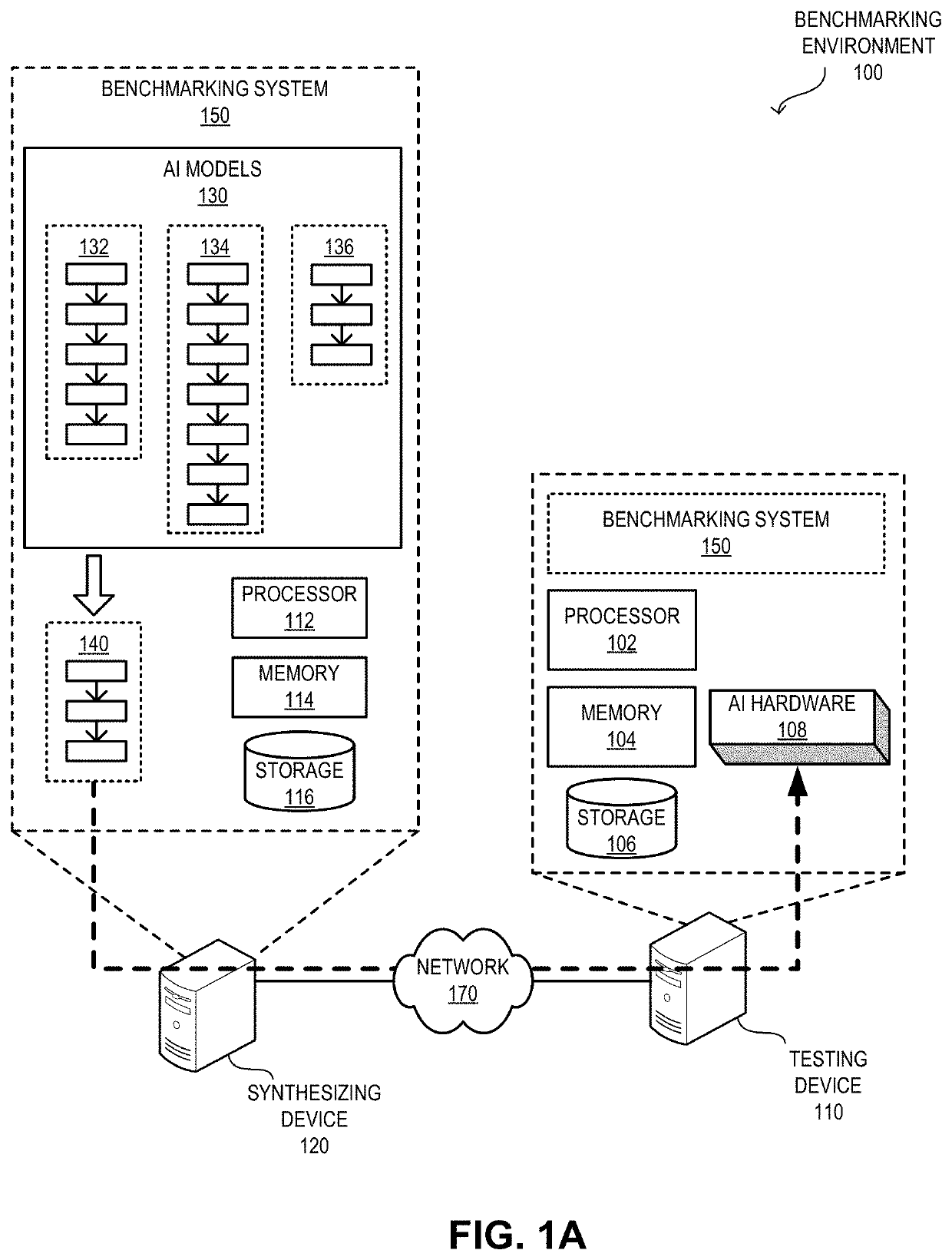

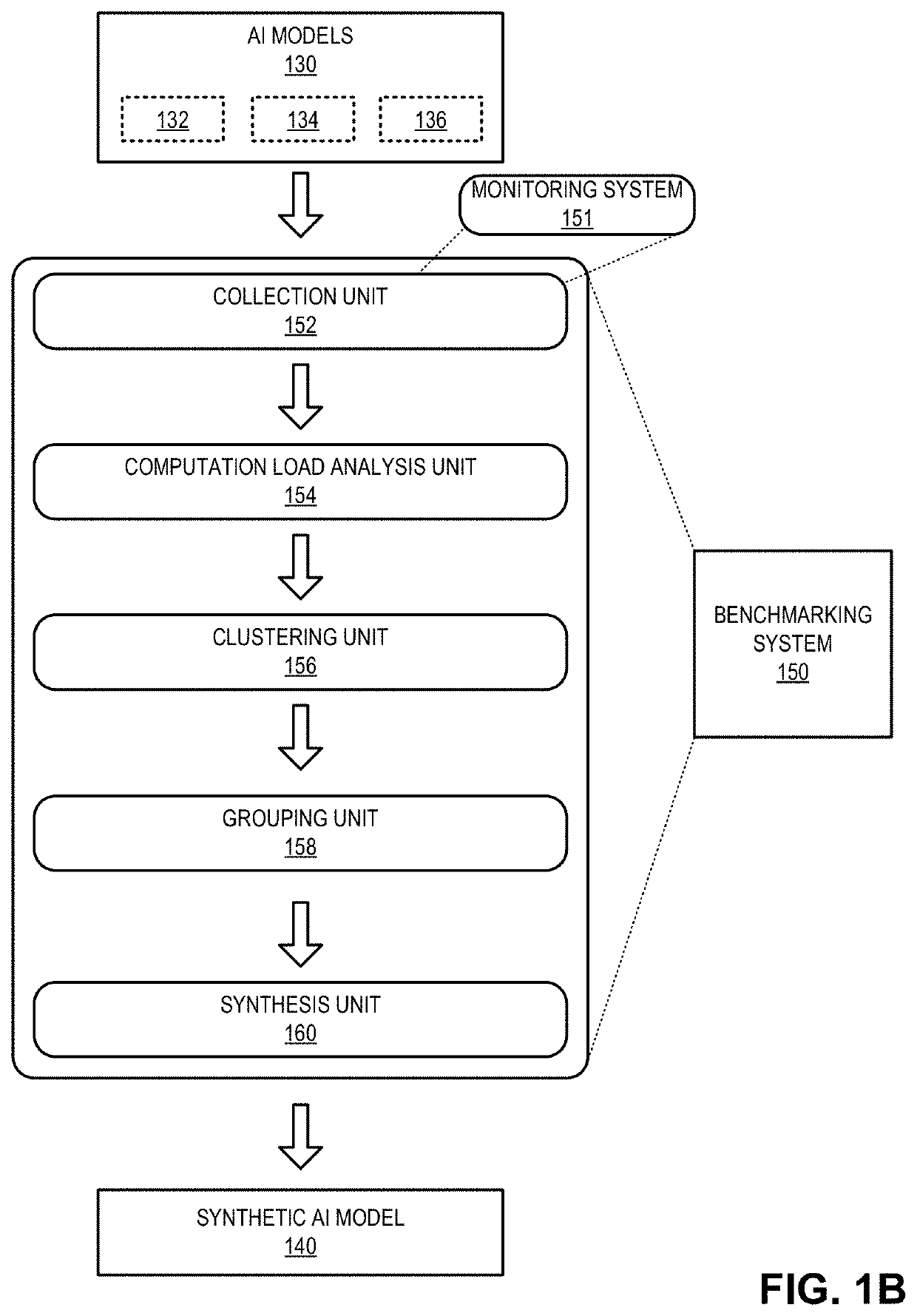

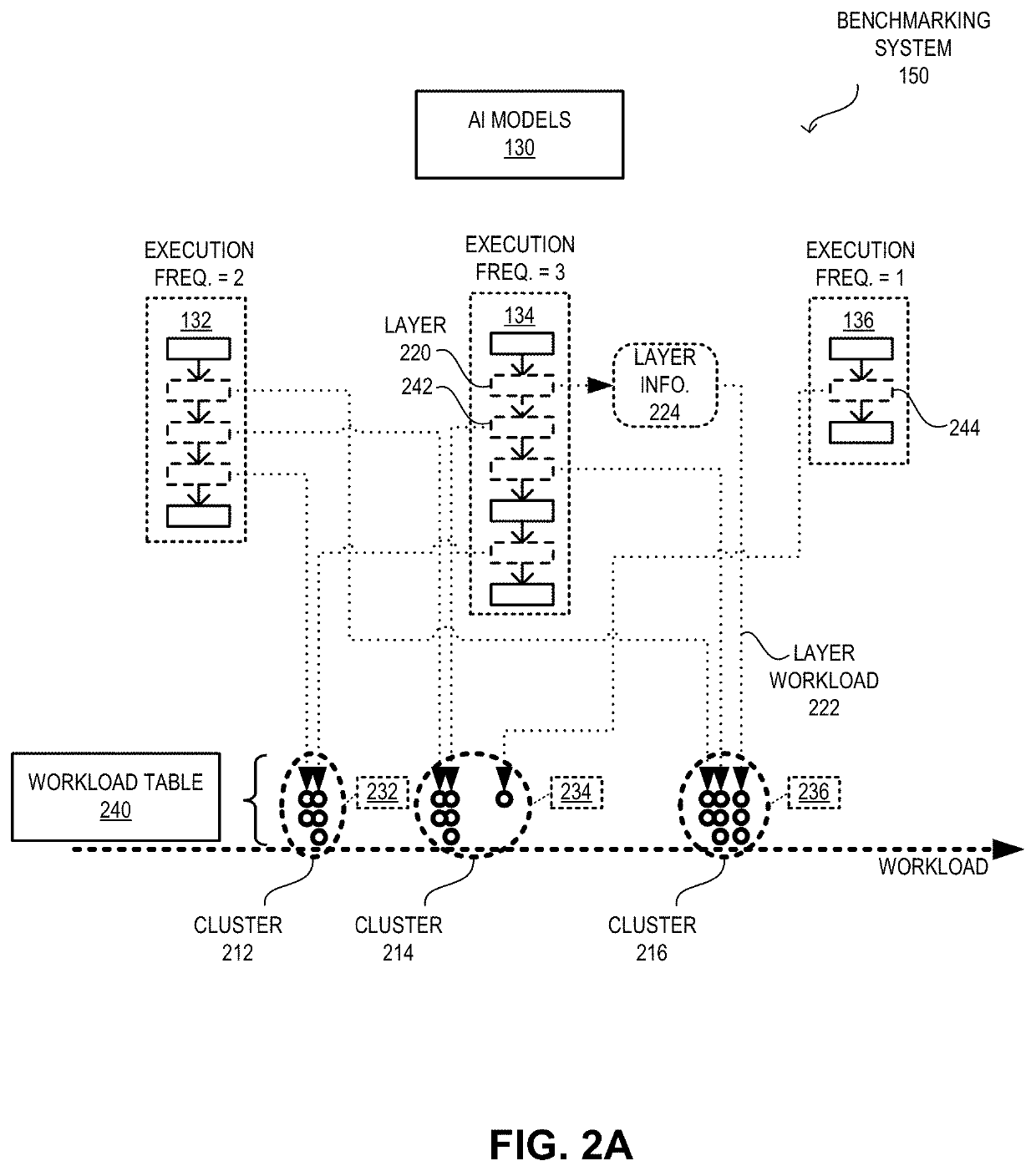

System and method for benchmarking ai hardware using synthetic ai model

InactiveUS20200042419A1Efficient benchmarkingResource allocationHardware monitoringWorkloadComputer hardware

Owner:ALIBABA GRP HLDG LTD

Method and apparatus for allocating resources to virtual machines in cluster

InactiveCN108241531AReduce wasteReduce resource fragmentationResource allocationResource utilizationUtilization rate

The invention provides a method for allocating resources to virtual machines in a cluster. The method is characterized by comprising the steps of obtaining a request of allocating the resources to thevirtual machines; according to virtual machine resource demand information carried by the request, determining to-be-allocated physical machines matched with virtual machine resource demands in the cluster; and creating virtual machines in the to-be-allocated physical machines. The physical machines matched with the virtual machine resource demands can be determined for the virtual machines as far as possible, so that the effects of remarkably reducing resource fragments and reducing cluster resource waste are achieved and the effects of increasing resource utilization rate and reducing costare achieved.

Owner:ALIBABA GRP HLDG LTD

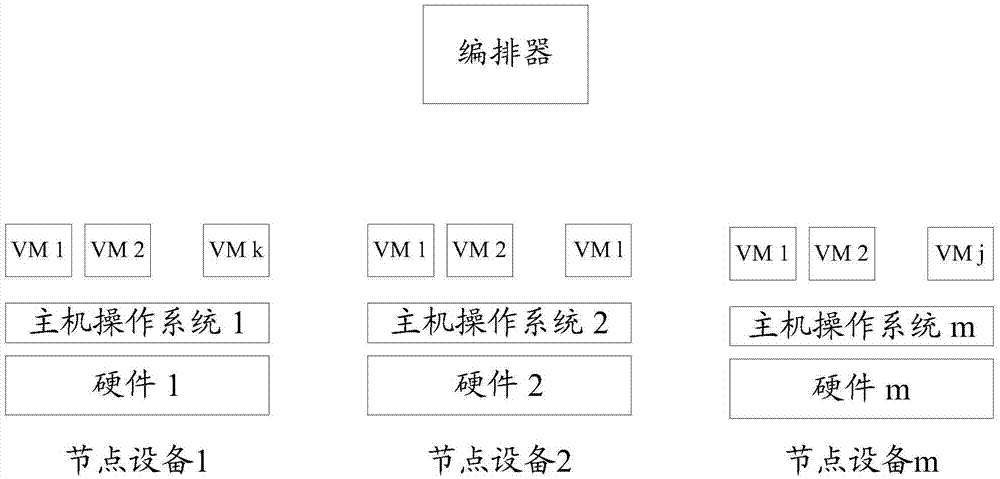

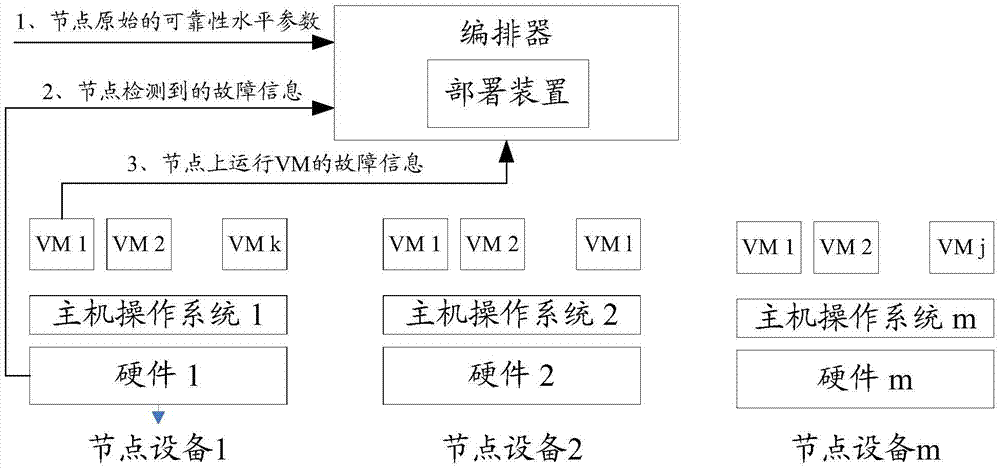

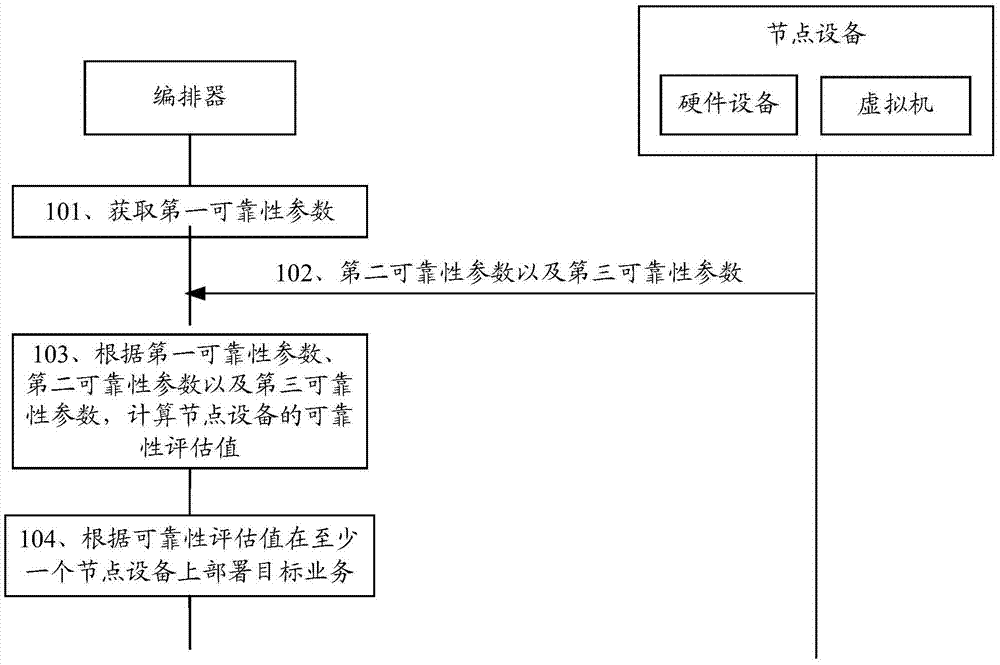

Business processing method, related device and system

ActiveCN105446818APrecise deploymentImprove efficiencyResource allocationComputer scienceVirtual machine

Owner:HUAWEI TECH CO LTD

Multimodal transmission of packetized data

InactiveCN108541312AResource allocationPosition fixationLanguage processorApplication programming interface

The invention provides a system of multi-modal transmission of packetized data in a voice activated data packet based computer network environment. A natural language processor component can parse aninput audio signal to identify a request and a trigger keyword. Based on the input audio signal, a direct action application programming interface can generate a first action data structure, and a content selector component can select a content item. An interface management component can identify first and second candidate interfaces, and respective resource utilization values. The interface management component can select, based on the resource utilization values, the first candidate interface to present the content item. The interface management component can provide the first action data structure to the client computing device for rendering as audio output, and can transmit the content item converted for a first modality to deliver the content item for rendering from the selected interface.

Owner:GOOGLE LLC

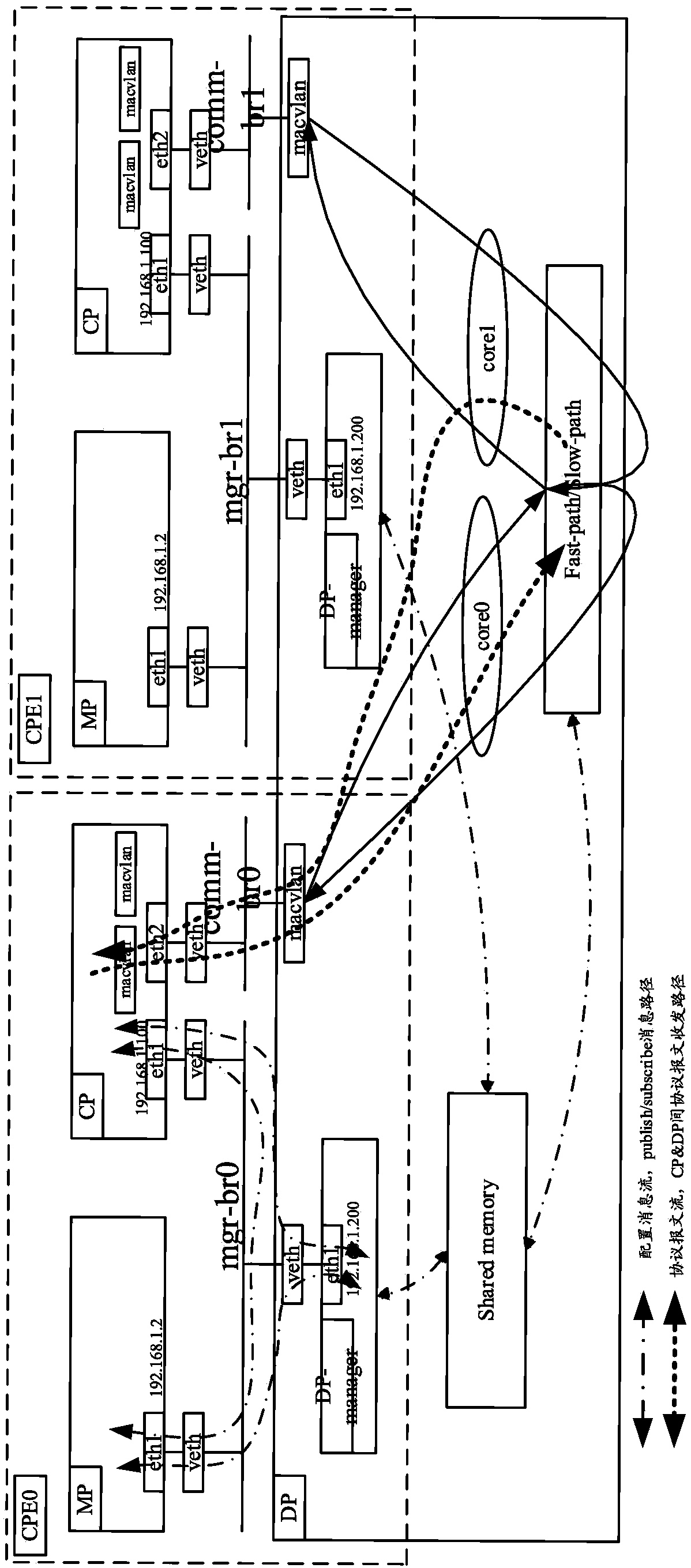

Virtualization management system for logic CPE equipment based on Docker technology and configuration method thereof

ActiveCN108270856ASolve resource problemsSolve efficiency problemsResource allocationTransmissionVirtualizationResource consumption

Owner:CERTUS NETWORK TECHNANJING

Face snapshot gunlock load balancing method

PendingCN111209119AIncrease workloadReduce code rateResource allocationCo-operative working arrangementsFace detectionComputer graphics (images)

The invention relates to the technical field of face recognition, in particular to a face snapshot gunlock load balancing method, which comprises the steps that two paths of original images are acquired, and an acquisition module of a snapshot gunlock transmits the original images to an image encoding and decoding module; an image encoding and decoding module outputs one path to a CPU encoding module for encoding, and outputs the other path to the GPU module for face detection; after receiving the image, a CPU image application module stores the image to a local storage card and uploads the image to a far-end server through a network; a CPU image analysis module analyzes the locally stored images, counts the number N of face snapshots in a set time and transmits a code rate value X needingto be adjusted, and the CPU encoding module acquires and configures an initial code rate value as the adjusted code rate value X; the coding code rate is dynamically adjusted in real time, the code rate is reduced when the snapshot amount is large, and the effects of giving way to CPU resources, locally storing pictures and uploading the pictures to a remote server are achieved; on the contrary,the code rate can be recovered and even improved in the time period with small snapshot amount, and a load balancing strategy is achieved.

Owner:CHENGDU GUOYI ELECTRONICS TECH CO LTD

Popular searches

Digital data processing details Unauthorized memory use protection Analogue secracy/subscription systems Digital storage Computer security arrangements Energy efficient computing Buying/selling/leasing transactions Program/content distribution protection Securing communication Data switching networks

Who we serve

- R&D Engineer

- R&D Manager

- IP Professional

Why Eureka

- Industry Leading Data Capabilities

- Powerful AI technology

- Patent DNA Extraction

Social media

Try Eureka

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic.

© 2024 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap