Patents

Literature

Hiro is an intelligent assistant for R&D personnel, combined with Patent DNA, to facilitate innovative research.

10 results about "Optical flow" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

Optical flow or optic flow is the pattern of apparent motion of objects, surfaces, and edges in a visual scene caused by the relative motion between an observer and a scene. Optical flow can also be defined as the distribution of apparent velocities of movement of brightness pattern in an image. The concept of optical flow was introduced by the American psychologist James J. Gibson in the 1940s to describe the visual stimulus provided to animals moving through the world. Gibson stressed the importance of optic flow for affordance perception, the ability to discern possibilities for action within the environment. Followers of Gibson and his ecological approach to psychology have further demonstrated the role of the optical flow stimulus for the perception of movement by the observer in the world; perception of the shape, distance and movement of objects in the world; and the control of locomotion.

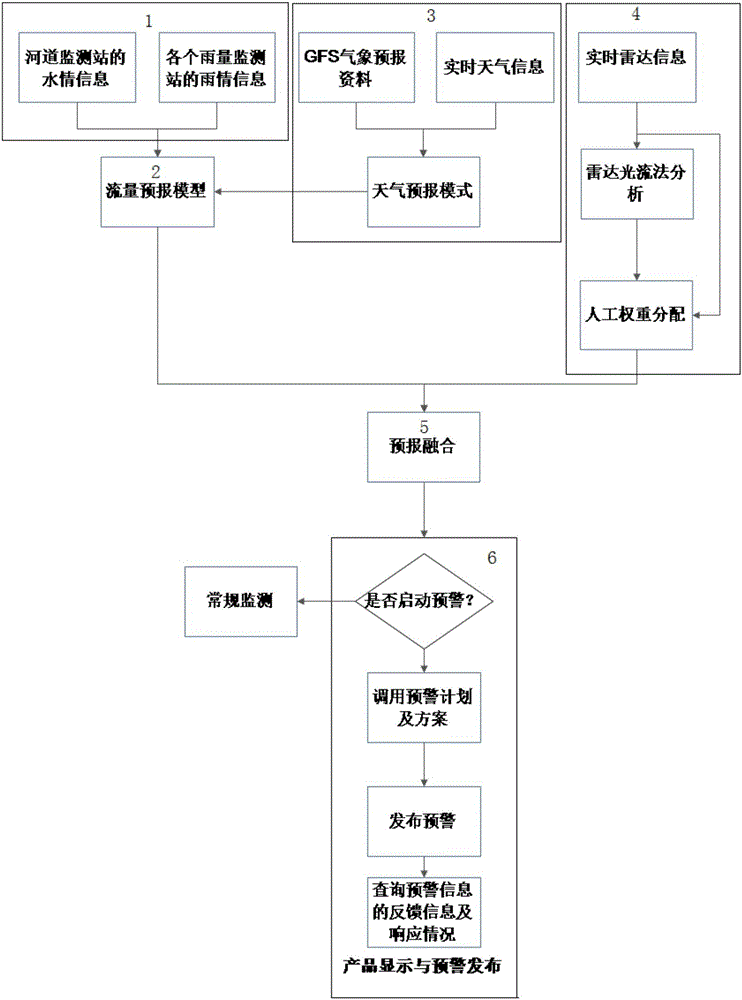

Device and method for monitoring and early warning of flood damages based on multi-resource integration

ActiveCN105894741AImprove accuracyExact approximation to the true solutionHuman health protectionAlarmsMulti resourceRadar

Owner:NANJING UNIV OF INFORMATION SCI & TECH

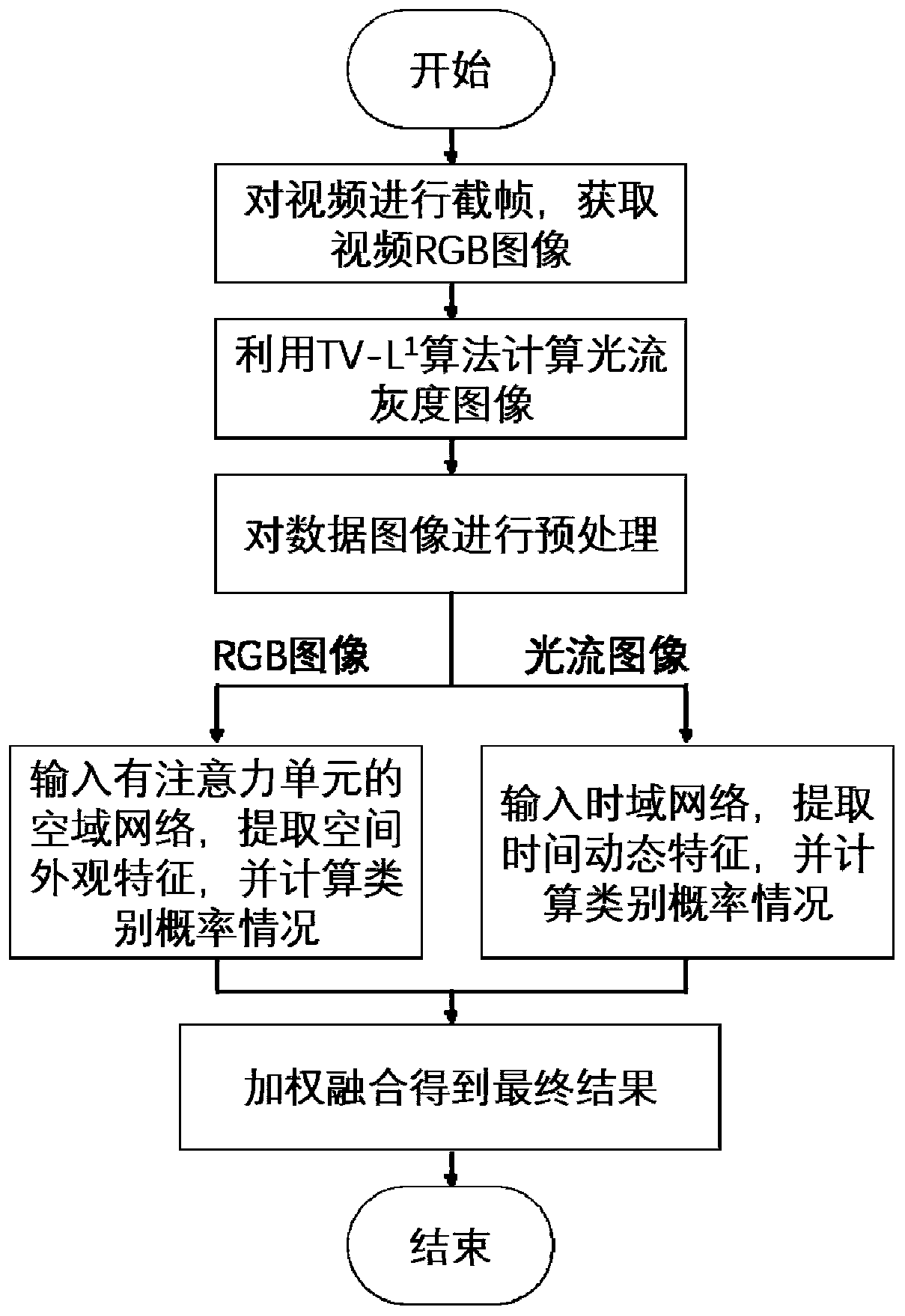

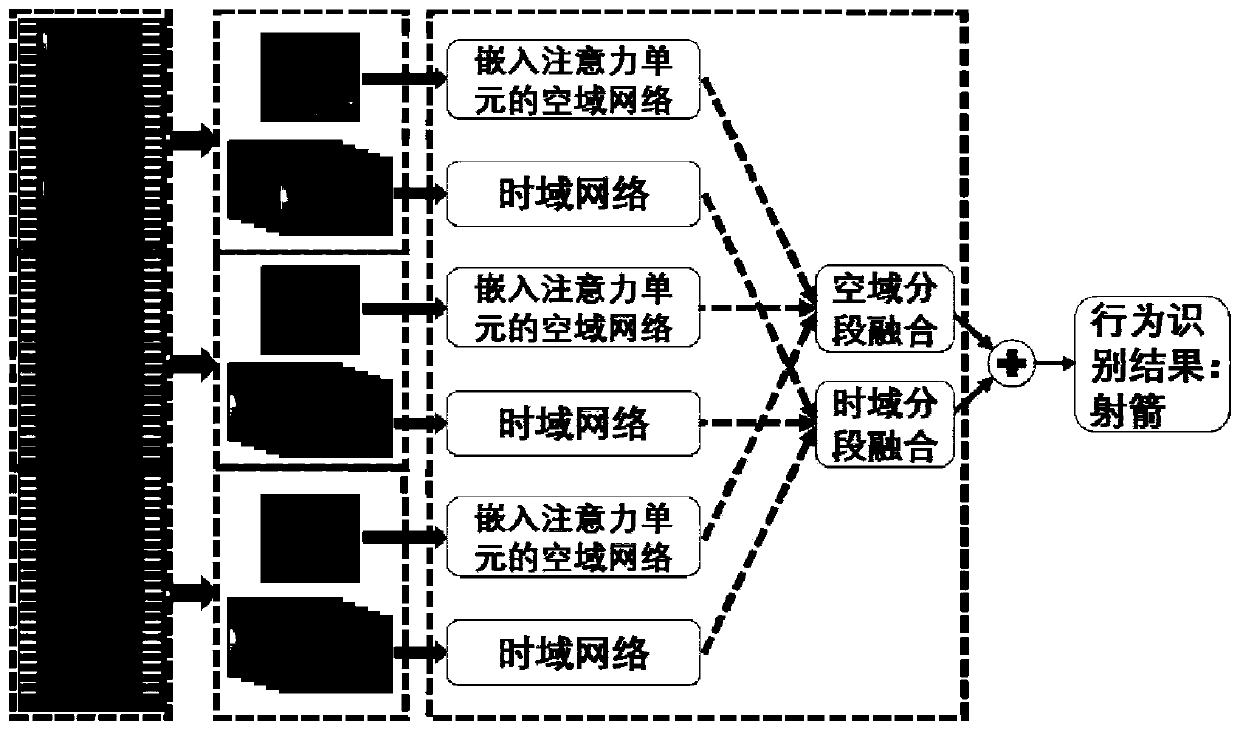

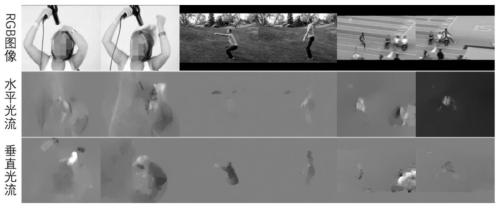

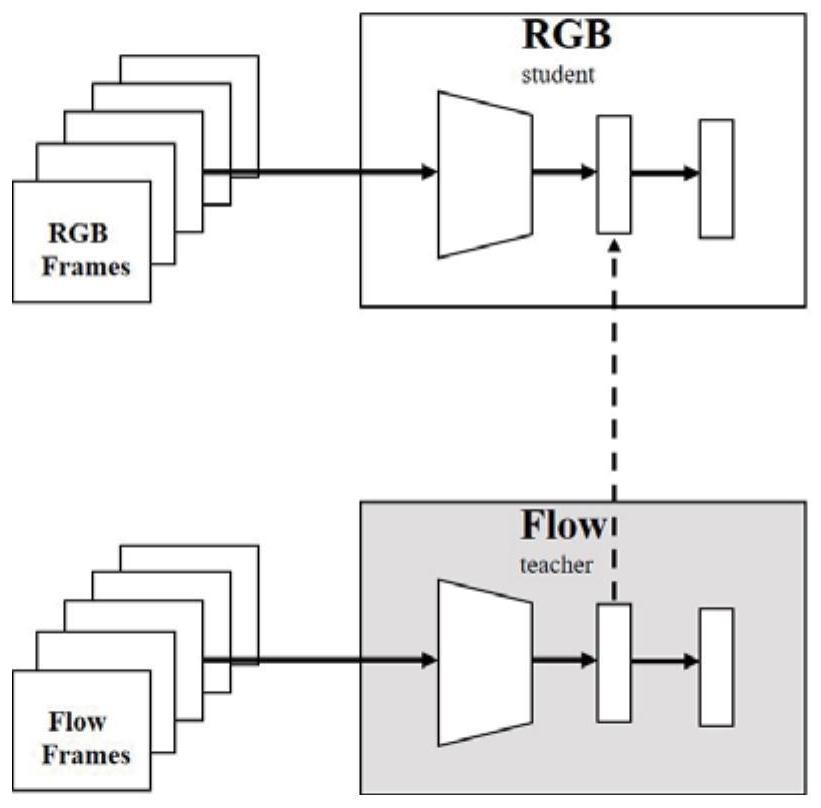

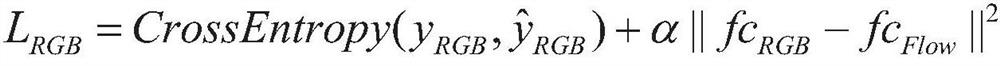

Behavior recognition method and system based on attention mechanism double-flow network

InactiveCN111462183ATake advantage ofImprove the accuracy of behavior recognitionImage enhancementImage analysisTime domainRgb image

Owner:SHANDONG UNIV

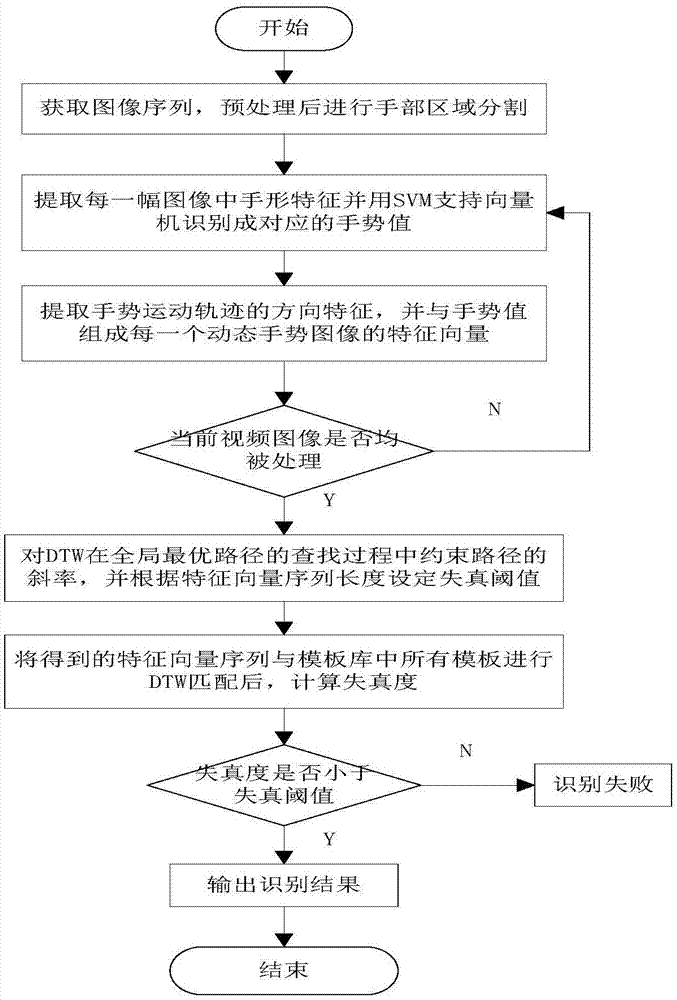

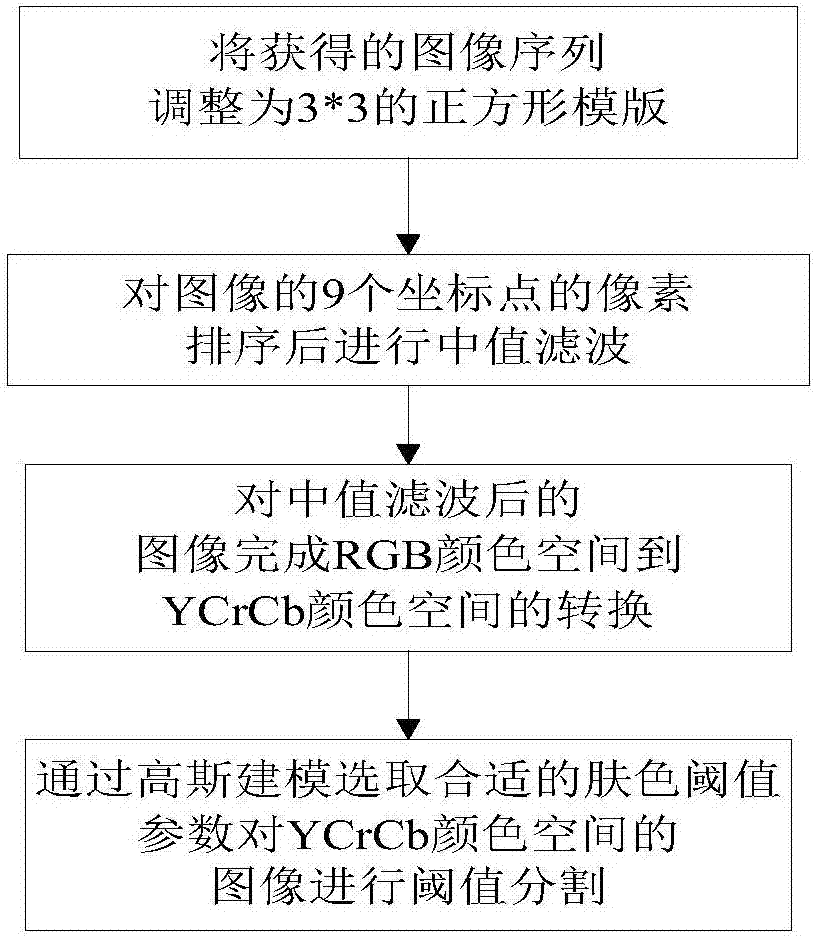

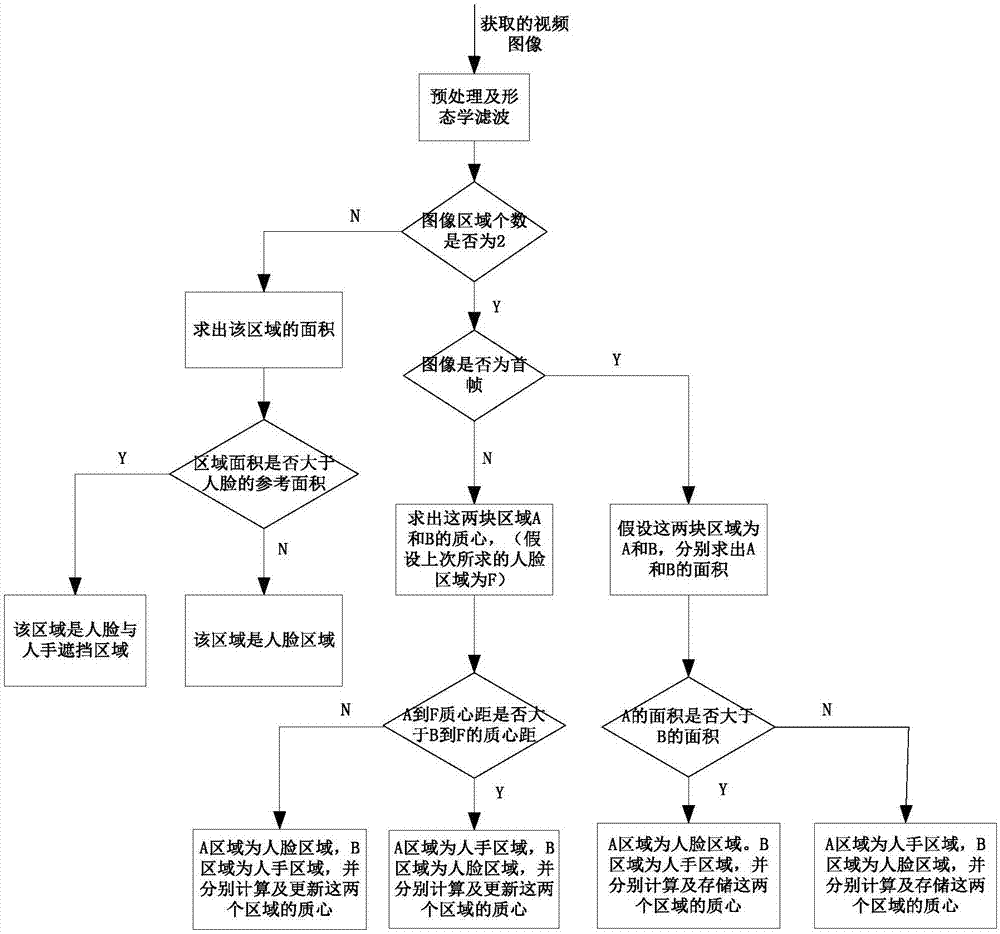

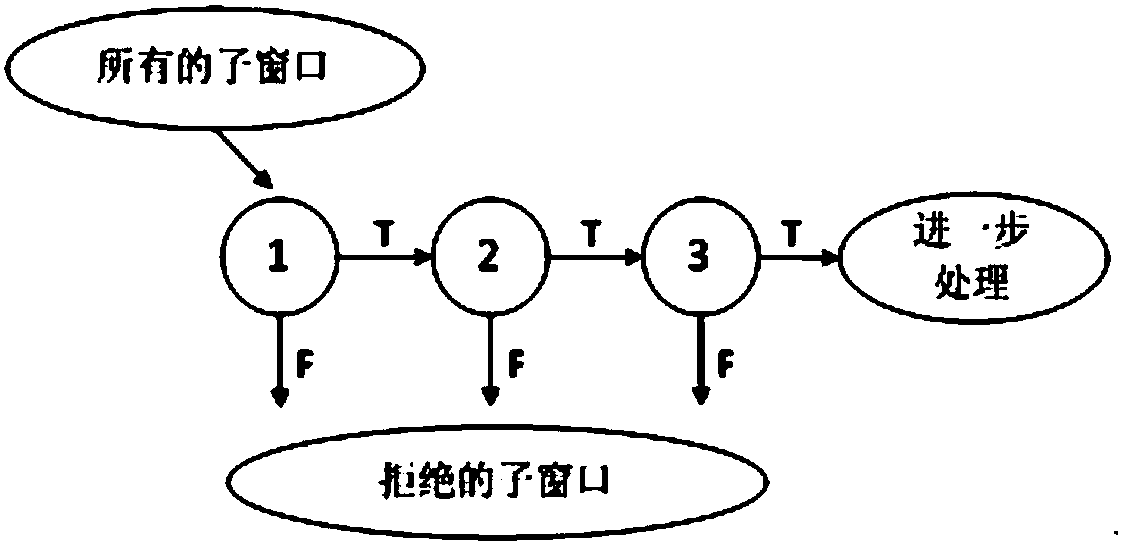

Real-time gesture recognition method

InactiveCN107958218AImprove dynamic gesture recognition rateImprove recognition rateInput/output for user-computer interactionCharacter and pattern recognitionSupport vector machineFeature vector

Owner:NANJING UNIV OF POSTS & TELECOMM

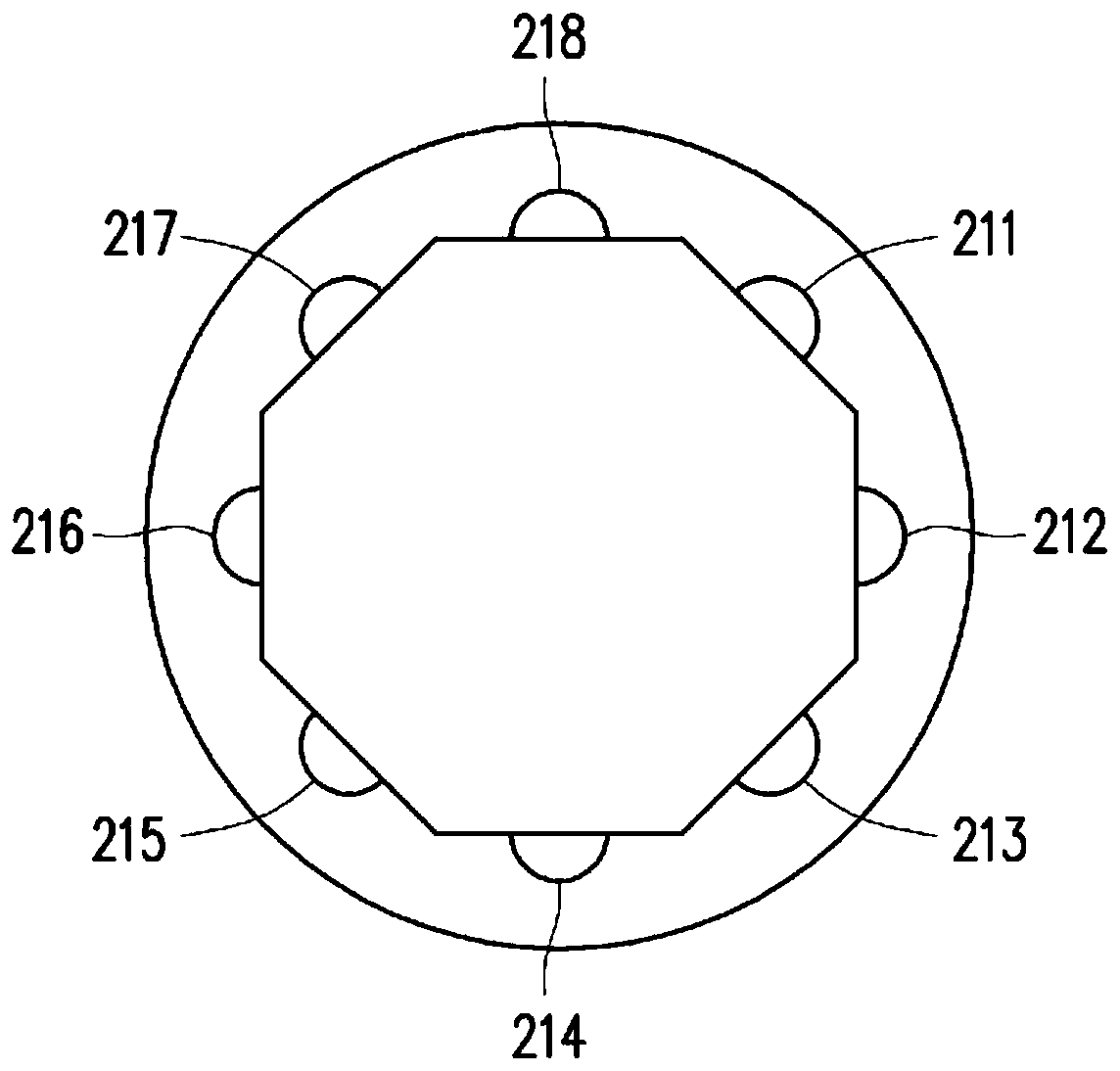

Feature point tracking method based on event camera

ActiveCN110390685AGuaranteed asynchronous trackingHigh precisionImage enhancementImage analysisPattern recognitionData acquisition

The invention discloses a feature point tracking method based on an event camera, and aims to improve the feature point tracking precision. According to the technical scheme, the feature point tracking system based on the event camera is composed of a data acquisition module, an initialization module, an event set selection module, a matching module, a feature point updating module and a templateedge updating module. The initialization module extracts feature points and an edge graph from the image frame. The event set selection module selects an event set S of the feature points from the event flow around the feature point. The matching module matches the S with the template edge around the feature points to solve an optical flow set Gk of n feature points at the moment tk. The feature point updating module calculates a position set FDk + 1 of the n feature points at the tk + 1 moment according to the Gk, and the template edge updating module updates the positions in the PBDk by using IMU data to obtain a position set PBDk + 1 of template edges corresponding to the n feature points at the tk + 1 moment. By adopting the method, the precision of tracking the feature points on the event stream can be improved, and the average tracking time of the feature points can be prolonged.

Owner:NAT UNIV OF DEFENSE TECH

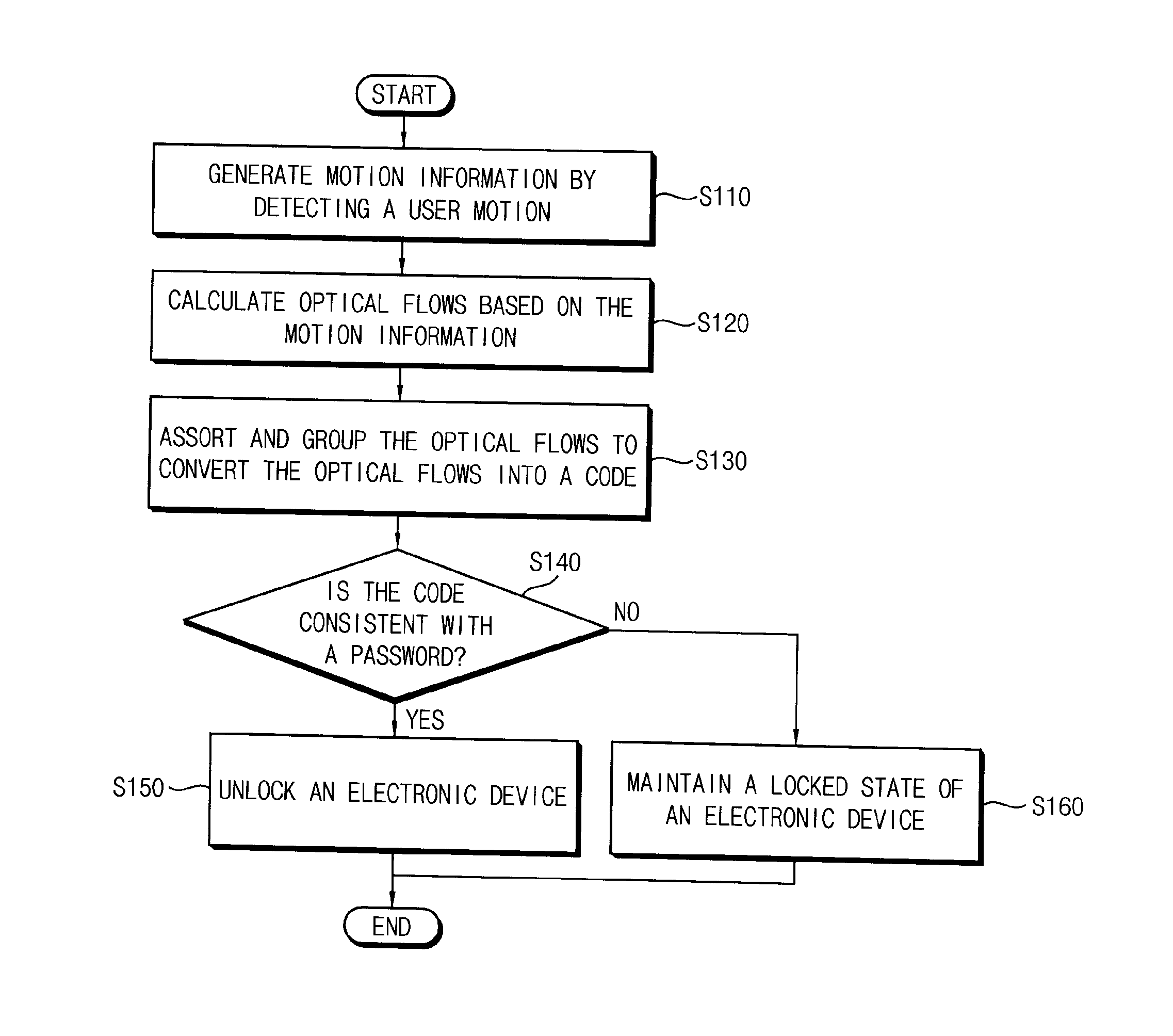

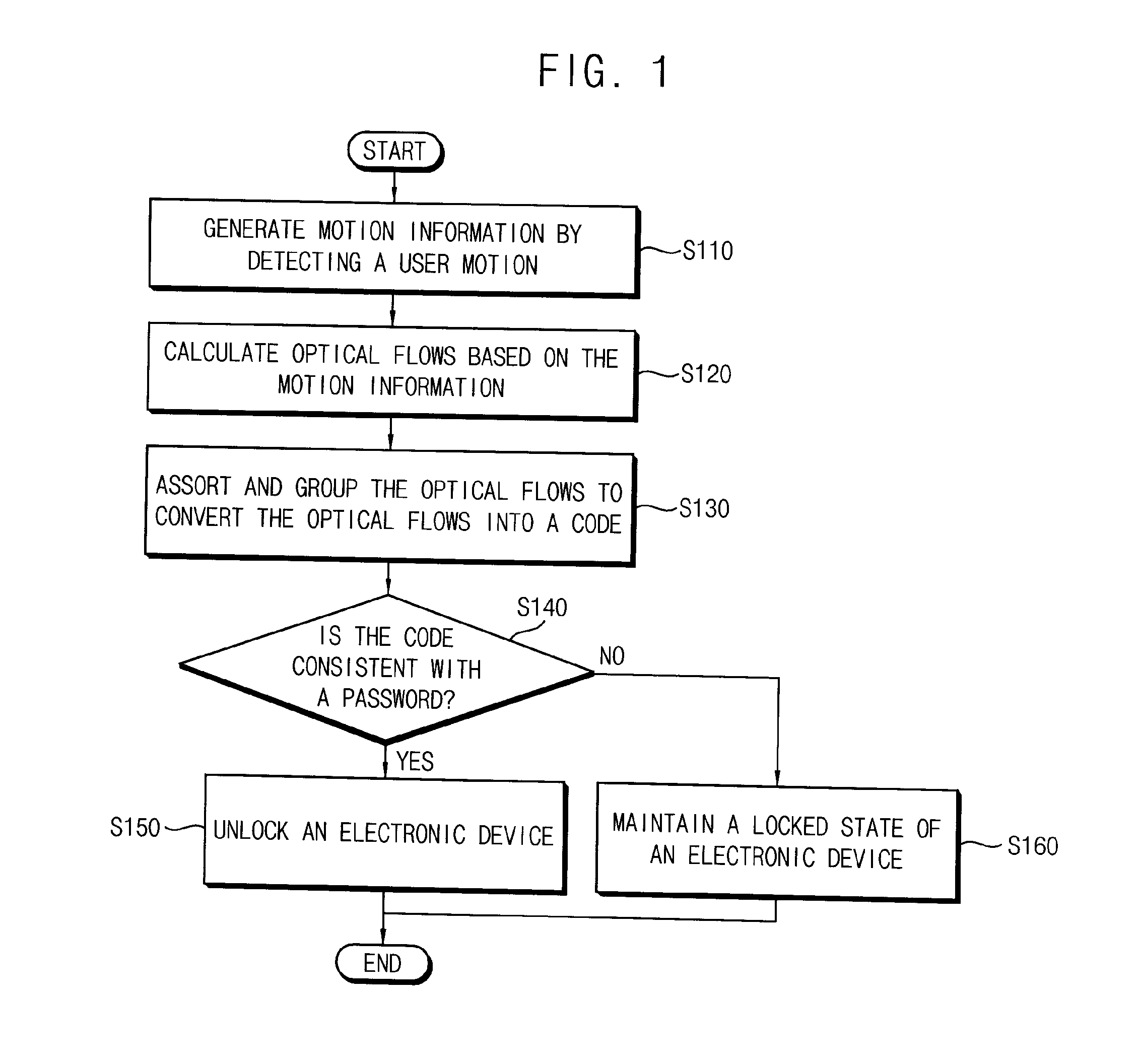

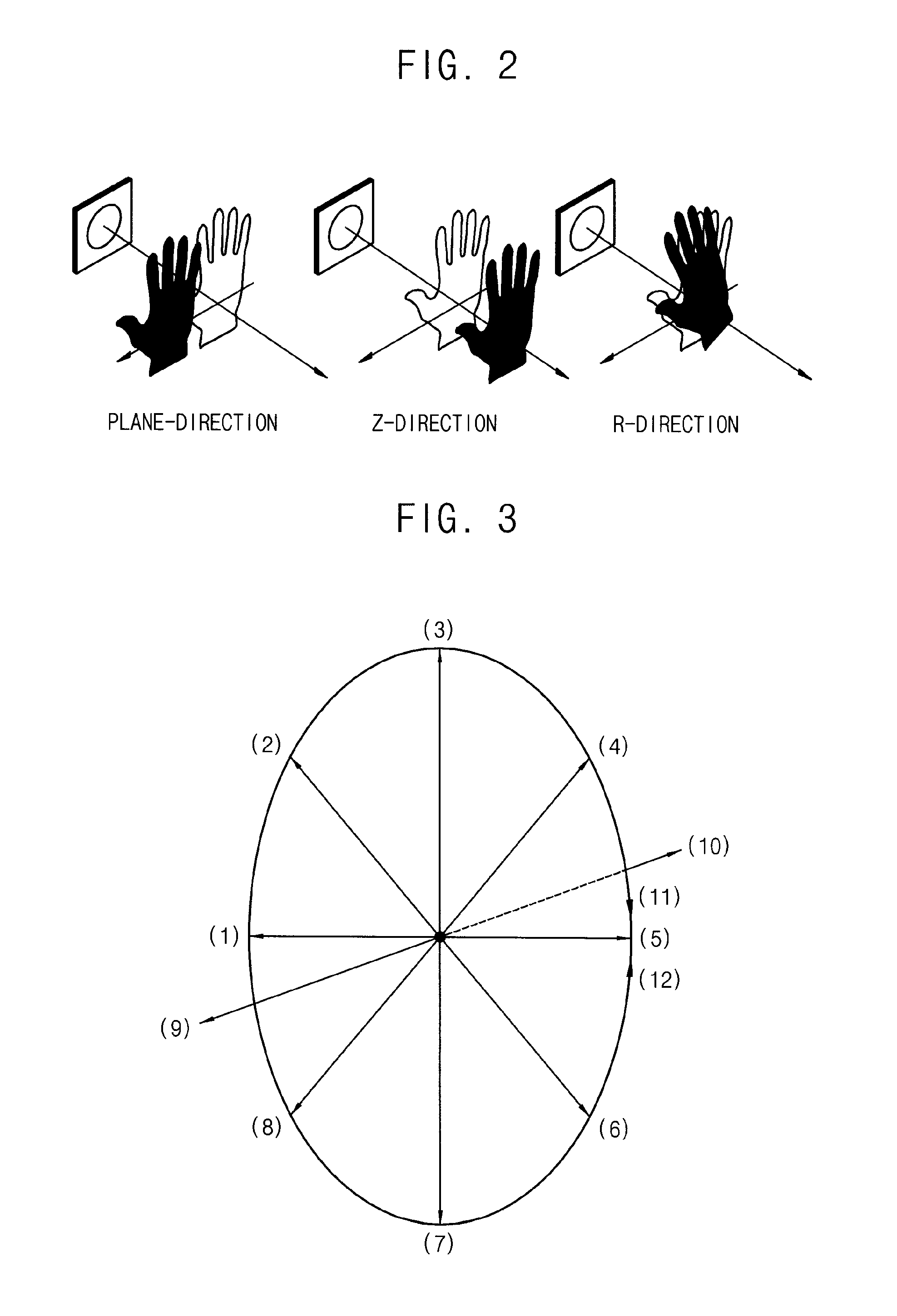

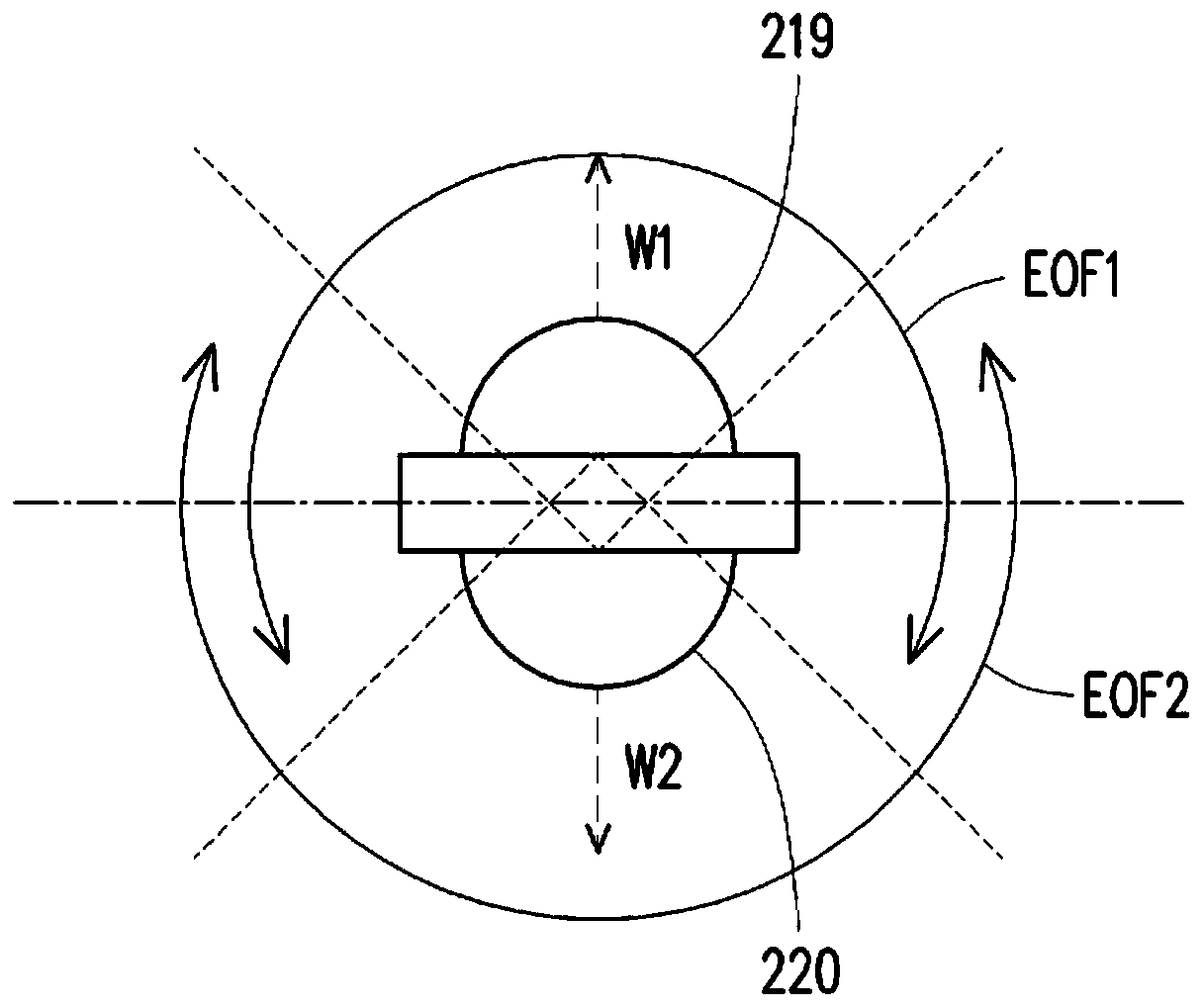

Method of unlocking an electronic device based on motion recognitions, motion recognition unlocking system, and electronic device including the same

InactiveUS20150248551A1Digital data processing detailsAnalogue secracy/subscription systemsPasswordSimulation

Owner:SAMSUNG ELECTRONICS CO LTD

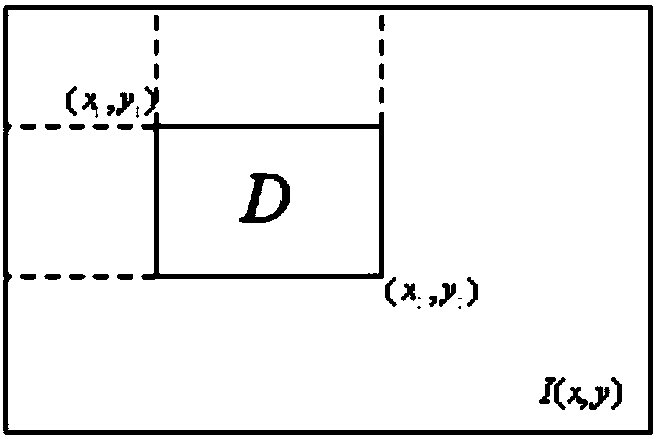

Multi-area real-time motion detection method based on monitoring video

ActiveCN108764148ARealize space-time position detectionReal-time processingImage analysisCharacter and pattern recognitionOptical flowTest phase

The invention discloses a multi-area real-time motion detection method based on a monitoring video. The method comprises a model training phase and a testing phase. The model training phase comprisesa step of acquiring training data and marking a database of specific actions, a step of calculating a dense optical flow of a video sequence in the training data, obtaining an optical flow sequence ofthe video sequence in the training data, and marking an optical flow image in the optical flow sequence, and a step of training a target detection model yolo v3 by using the video sequence and the optical flow sequence in the training data to obtain an RGB yolo v3 model and an optical flow yolo v3 model. According to the method, the spatiotemporal position detection of the specific action in themonitoring video can be achieved, and the real-time processing of the monitoring can be achieved.

Owner:NORTHEASTERN UNIV

Image processing device and image processing method

ActiveCN110264406AReduce operational complexityHigh image qualityImage analysisGeometric image transformationImaging processingImaging quality

Owner:威盛电子(深圳)有限公司

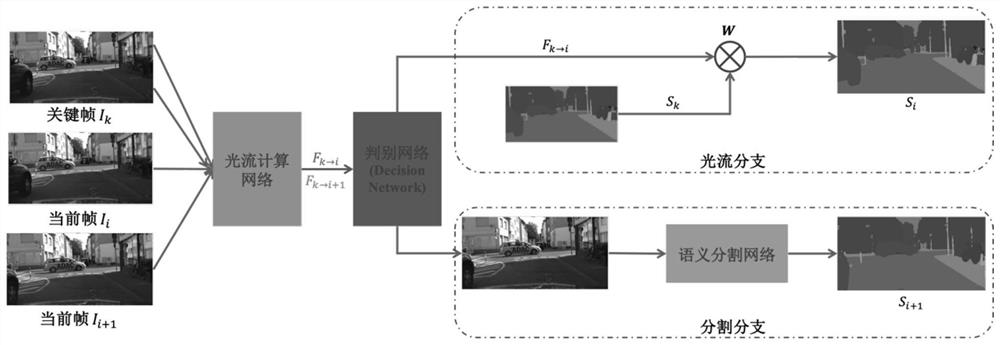

Unmanned aerial vehicle visual angle video semantic segmentation method based on deep learning

PendingCN113269133AEasy to use and flexibleReduction procedureCharacter and pattern recognitionNeural architecturesImage segmentationOptical flow

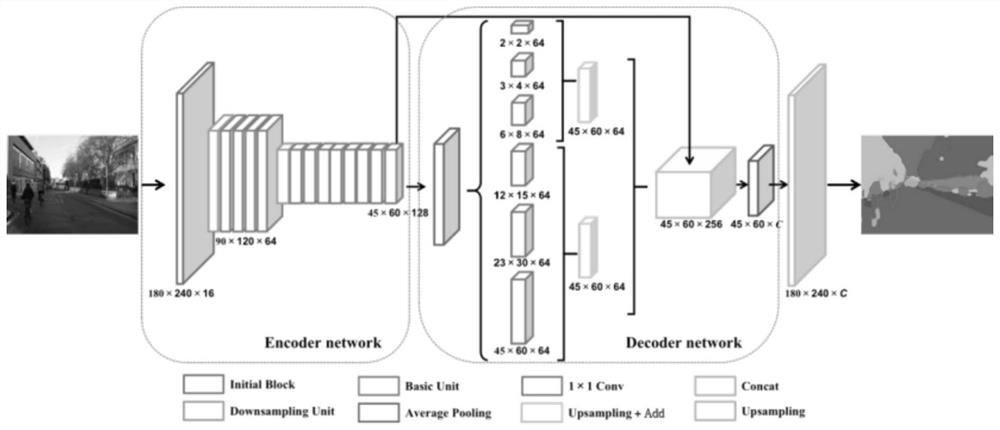

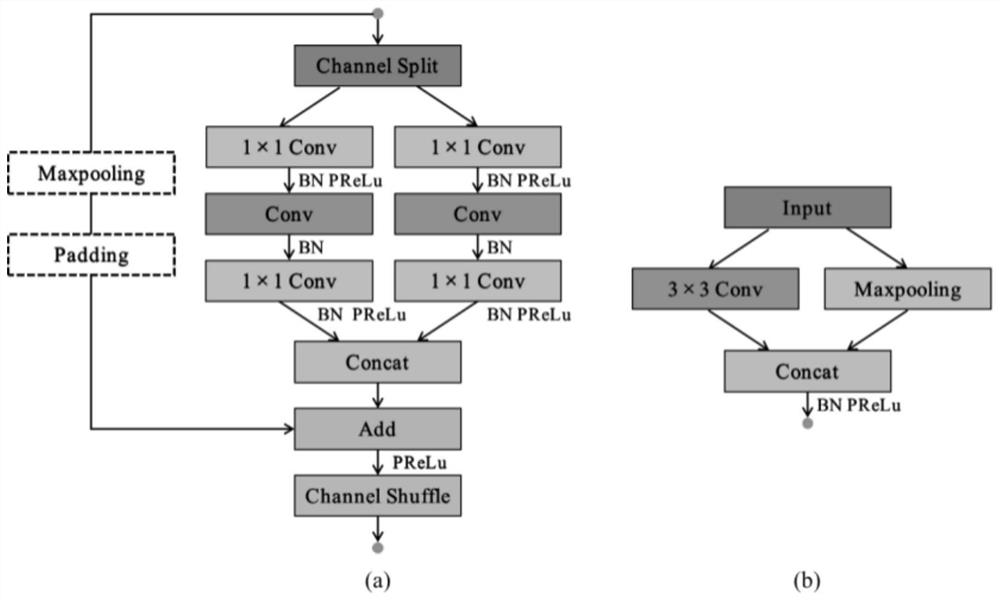

The invention belongs to the field of unmanned aerial vehicle vision, and relates to an unmanned aerial vehicle view angle video semantic segmentation method based on deep learning. In order to solve the problem of image semantic segmentation, an encoder-decoder asymmetric network structure is designed, in the encoder stage, channel split and channel recombination are fused to improve a Bottleneck structure so as to carry out down-sampling and feature extraction, in the decoder stage, rich features are extracted and fused based on a spatial pyramid multi-feature fusion module, and in the multi-feature fusion module, the multi-feature fusion is carried out on the basis of the multi-feature fusion module. And finally, up-sampling is performed to obtain a segmentation result. And then, aiming at a video semantic segmentation problem, the image segmentation model designed by the invention is used as a segmentation module of video semantic segmentation, thereby improving a key frame selection strategy and performing feature transfer in combination with an optical flow method, reducing redundancy and accelerating the video segmentation speed.

Owner:DALIAN UNIV OF TECH

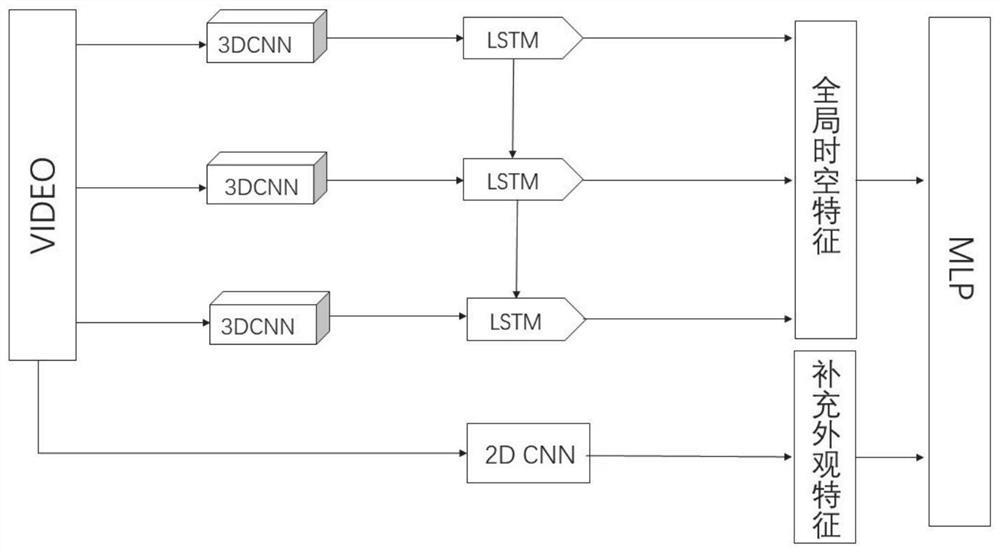

Zinc flotation working condition identification method based on long-time-history depth features

PendingCN113591654APrevent extractionResolution timeCharacter and pattern recognitionNeural architecturesFeature extractionEngineering

Owner:CENT SOUTH UNIV

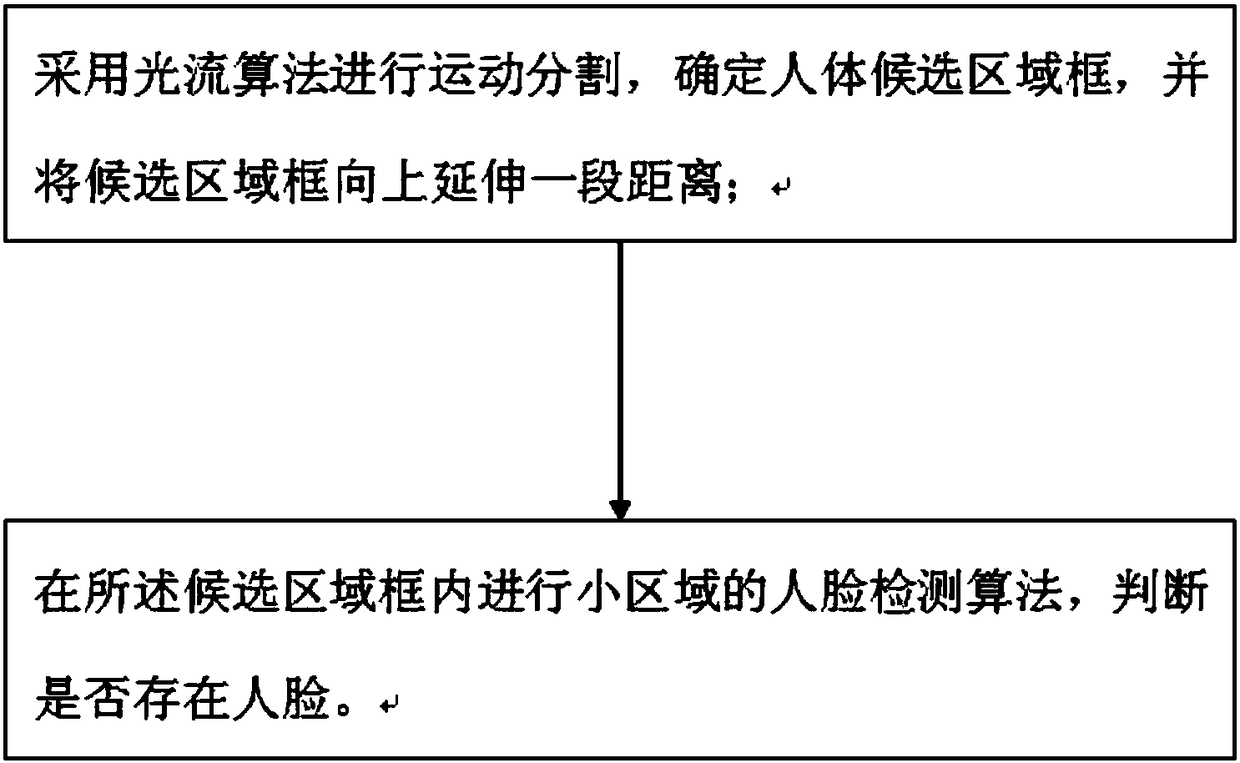

Fast human body searching method and system based on motion segmentation

InactiveCN109215052AReduced areaImprove classification accuracyImage analysisCharacter and pattern recognitionHuman bodyFace detection

Owner:SHENYANG SIASUN ROBOT & AUTOMATION

Who we serve

- R&D Engineer

- R&D Manager

- IP Professional

Why Eureka

- Industry Leading Data Capabilities

- Powerful AI technology

- Patent DNA Extraction

Social media

Try Eureka

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic.

© 2024 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap